Eliciting Students’ Voices through Screencast-Assisted “Feedback on Feedback”

María Fernández-Toro, Concha Furnborough, The Open University, United Kingdom

Abstract

Despite its obvious importance, research has suggested that students do not always engage with their tutors’ assignment feedback. This paper focuses on a new approach to examining student responses to feedback received. 10 distance students of Spanish from beginner to advanced level articulated their responses to feedback obtained from their tutor on a particular written assignment using student-generated screencast (Jing) recordings. The recordings were then analysed for cognitive, metacognitive and affective elements. The study demonstrated that motivated students engage with tutor feedback and make active efforts to integrate it into their learning, although sometimes their responses are ineffective, with incorrect tutor assumptions about an individual student’s abilities or assumptions leading to unsuccessful feedback dialogue. The findings indicate that this approach could constitute a valuable contribution to enhancing student-tutor dialogue in distance language learning assessment, which would merit further research.

Abstract in Spanish

Pese a su importancia, el feedback de los profesores no siempre es tomado en cuenta por los estudiantes. Este artículo se centra en una nueva técnica para investigar cómo responden éstos al feedback que reciben. 10 estudiantes matriculados en cursos de español a distancia de niveles principiante a avanzado verbalizaron sus respuestas al feedback de sus profesores sobre una tarea escrita y las grabaron en forma de screencast (Jing). Dichas grabaciones se analizaron con relación a elementos cognitivos, metacognitivos y afectivos. El estudio demuestra que los estudiantes motivados se involucran con el feedback de sus profesores y se esfuerzan activamente por integrarlo en su aprendizaje. No obstante sus respuestas no siempre surten efecto, y las asunciones incorrectas de los profesores sobre las habilidades de sus estudiantes pueden llevar a un fracaso del diálogo de aprendizaje. Concluimos que esta técnica puede contribuir a mejorar el diálogo entre profesores y estudiantes de educación a distancia sobre la evaluación del aprendizaje, y constituye una valiosa herramienta para futuras investigaciones.

Keywords: feedback, assessment, student engagement, distance education, screencast, languages

Background

Effective feedback not only enriches the learning experience, but is essential to successful learning (Hurd, 2000, 2006; Ramsden, 2003; White, 2003), yet the results of the UK National Student Survey (Times Higher Education, 2014) show that feedback remains an ongoing challenge for HE institutions in terms of student satisfaction.

Even assuming that the quality of assignment feedback is excellent in its content and timing, it can only be effective provided that learners engage with it (Nicol, 2010; Black & Wiliam, 1998). However research has shown that learners do not always engage with the feedback provided. In an earlier study Furnborough and Truman (2009) identified three patterns of student engagement with external feedback amongst distance learners studying languages at beginner level: Group A saw feedback as a learning tool which empowered them to take on more responsibility for their own learning, Group B primarily related it with a sense of achievement (e.g. good grades), and Group C did not value assignment feedback and seemed either unable or unwilling to take their tutor’s comments on board because of doubts or anxieties about their own performance.

So given that feedback is such a potentially valuable tool for effective learning, why would those students fail to engage with assignment feedback or feel dissatisfied with it? A common problem is that there is often a mismatch between the students’ needs and expectations on the one hand, and the tutors’ assumptions and practices on the other (Orsmond & Merry, 2011; Furnborough & Fernández-Toro, forthcoming).

Another line of research relates to the use of IT to improve the quality and effectiveness of assignment feedback. Many educational institutions have adopted electronic assignment management systems that improve the timeliness of feedback and the consistency of record keeping. Feedback can also be delivered through a variety of electronic media, which are especially useful in distance education. For example in the UK, the Open University routinely uses e-feedback in the form of standard templates for electronic reports (internally known as PT3 forms), annotations on student’s scripts using Word markup, and audio-recorded feedback. Certain tutors also give additional feedback by inserting links to screencast recordings in their written feedback.

The e-Feedback evaluation project

The aim of this project was to evaluate the use of spoken and written e-feedback in a context in which these modes of delivery had been adopted by a Higher Education institution across an entire subject area. One such context is the Open University, where the use of both audio-recorded and written feedback has been standard practice at the Department of Languages for a number of years. The evaluation looked at staff and student perceptions of assignment feedback, the quality of feedback itself, and student engagement with the feedback.

More specifically, the project aimed to evaluate:

- the students’ and tutors’ attitudes to assignment feedback in each of the media commonly used at the OU;

- the quality of feedback in three of the media used in terms of the criteria being assessed and the depth of feedback on strengths and weaknesses;

- the effectiveness of feedback in terms of student engagement and response.

These three evaluation strands were respectively evaluated by means of staff (N = 96) and student (N = 736) surveys; qualitative analysis of tutor feedback on 200 language assignments; and talk-aloud protocols consisting of screencast recordings in which students (N = 10) talked through the feedback written by their tutors on one of their assignments, or in other words, gave feedback on the feedback. This paper will focus on the latter strand, hereafter referred to as the ‘feedback on feedback’ (F/F) study.

Feedback on feedback

The F/F study was designed as a follow-up to the staff and student surveys and the analysis of tutor feedback. The aim of the study was to elicit and evaluate the students’ cognitive, metacognitive and affective responses to their tutor’s feedback. In analysing the recordings, special attention was given to the attitudes and perceptions reported in the surveys, as well as the features of tutor feedback that had been identified in the feedback analysis study. For reasons of space, the results of those two studies cannot be reported here, but relevant findings will be reported in the discussion section as appropriate.

Subjects

Participants in the study were adult university students studying distance learning modules in Spanish at the Open University. Out of the 736 language students who took the student survey, the 210 who were taking Spanish modules were invited to participate in the follow-up study. 88 of these agreed to be contacted and were sent an overall description of the study. Twenty of them subsequently requested the necessary instructions to produce the recordings. The final sample consisted of 10 students, who were the only ones to return a set of recordings. Such a high dropout rate was expected given the voluntary nature of the task, the challenge of trying out a new technological tool, and the fact that these were adult distance learners who had just completed their respective modules. As a result, the sample is not entirely representative of the student population as a whole, but of a highly motivated, high-achieving minority. Indeed, their marks on the assignment used all ranged between 75% and 94%, and this was taken into consideration when interpreting the data. All the levels taught at the OU were represented in the sample, which consisted of two students taking the beginner module, two from the lower intermediate module, four from the upper intermediate, and two from the advanced module. The sample comprised 5 males and 5 females. Three of the female students were not English native speakers and the remaining students were English native speakers. All were fluent enough to study a final year degree module in the UK and had no difficulty expressing themselves in English.

Method

Students were given a written set of instructions and a screencast showing a simulated talkthrough recorded by one of the researchers. All the necessary material was available online. The recording tool used was Jing, which allows a maximum recording time of 5 minutes. Students were asked to produce two recordings each: one about their marked written script (TMA) and another one about the accompanying feedback summary form (PT3). Students were sent anonymised copies of these document files so that no personal details could be seen on their recordings. In their task brief, they were encouraged to talk us through the assignment feedback, covering any aspects that they considered relevant, such as their first reaction to the feedback, which comments they did or did not understand, which ones they found useful or not useful, what feelings different comments elicited, what use students made of the feedback, and what they had learned from it. Once the recordings were completed, students submitted them by email. Thus, from the initial briefing to the final submission, the entire process took place electronically.

Each student’s recordings were analysed in terms of their use of the two media (TMA script and PT3 form); their cognitive, affective and metacognitive responses to comments on strengths and comments on weaknesses; and their responses to different depths of feedback relating to strengths and weaknesses of their work. The notion of depth, proposed by Brown and Glover (2006) refers to feedback that either indicates a weakness/strength (depth 1), corrects the error/describes the strength (depth 2), or gives an explanation (depth 3). Fernandez-Toro, Truman and Walker (2013) suggest an additional level for cases where errors or strengths are categorised, for example when tutors use codes to indicate the category to which an error belongs (e.g. gender agreement). Thus, the four depths considered in this analysis are:

- Indicated;

- Categorised/Described;

- Corrected/Exemplified;

- Explained.

A further category was added where some kind of future action to avoid an error or build on a strength is proposed. As the brief given to the students was fairly open, responses to different types of feedback could not be compared quantitatively. The next section therefore focuses on describing typical responses and proposes a framework for interpreting them.

Results

Students’ reported strategy for using the feedback

All students reported looking at the PT3 form before the TMA script, and all started by looking at their mark. They were also generally enthusiastic about receiving an overview in the general feedback form. As for the script, one student admitted that she had not really looked at it much, whilst another reported that she normally sets it aside until she has enough time to work systematically through each comment on her script. Printing out the feedback is common practice, sometimes in parallel with the computer, as mark-up comments on Word can be easier to read on screen than on paper. Subsequent use of the feedback was reported in only three cases, normally for revision purposes before the final assessment. Although all students found the feedback useful and clear, one stated that she had not learnt much from it and would just continue doing the same as she had been doing in her assignment.

Students’ responses to feedback on weaknesses

Where tutors annotated or commented on problem areas, a number of possible responses were observed:

- Active integration: Understands the information provided by the tutor and elaborates on it. For example, a correction is given and the student then adds a categorisation (e.g. “gender agreement”) or an explanation (“because poblacion is feminine”); or the tutor gives an error category (e.g. “verb form”) and the student then provides the correction (“I should have written fueron”).

- Attempted integration: Tries to elaborate on the feedback but produces an inaccurate/inappropriate interpretation (e.g. correcting the tense of a verb when the problem actually related to the meaning of the verb).

- Informed acceptance: Appears to understand the information provided in the feedback but does not elaborate on it (e.g. [looking at a spelling correction] “Oh yes, that was silly!”).

- Uninformed acceptance: Acknowledges the information provided by in the feedback but there is no evidence of understanding (e.g. [tutor rewrites a sentence] “yeah, that sounds better”).

- Uncertainty: Acknowledges lack of understanding (“Can’t understand why aunque is deleted here”).

- Rejection: Disagrees with the information provided by the tutor (“it does annoy me when she says I should have included more information when the word limit is so ridiculously low”).

- Evaluation: Evaluates the error, either by explaining what caused it (e.g. Russian student says “past tenses are different in Russian”) or by voicing an evaluative judgement about their performance (“silly mistake”).

- Planning: Proposes some kind of action to improve performance (“I must revise prepositions”).

In any of these responses, cognitive and affective elements may be present in varying degrees. The first three are more cognitively oriented. Uninformed acceptance is also cognitively oriented, although it may reflect an underlying avoidance strategy rooted in affective factors such as fear of challenge. Conversely, rejection often has a clear affective component while its roots may be cognitive (e.g. feeling that a correction is unfair because you do not understand it). Evaluation and planning are mostly metacognitive, but again may be related to affect, for example in face-saving judgements such as “silly mistake” or giving reasons for errors in an attempt to justify them.

Students’ responses to feedback on strengths

Cognitive, affective and metacognitive elements were also present in the students’ responses to feedback related to the strengths of their work, though the most evident aspect was the affective response:

- Appreciation of effort recognition: Student is pleased to see his/her efforts acknowledged in the feedback (“It was quite difficult but you see my tutor says well done”; “Two ticks for my quotation at the end! I like that quotation and I am very pleased that my tutor liked it.”). This was the most common response to feedback on strengths.

- Appreciation of personal rapport: Student feels that the feedback treats him/her as an individual (e.g. personal greetings).

Cognitive and metacognitive responses generally mirrored those elicited by feedback on weaknesses, although some response types were less apparent for feedback on strengths:

- Active integration: e.g. tutor says “good introduction” (Depth 2: strength categorised) and student adds that she made sure to include “the mandatory quote” in her introduction (Depth 4: strength explained).

- Attempted integration: A correction may be interpreted as praise (e.g. tutor says “you exceeded the word limit” and student then explains that she always worries that she will not be able to write so much “but you see I exceeded that!”).

- Informed acceptance: e.g. “Good. I got that one”.

- Planning: e.g. “She tells me my referencing system is correct so if I use that in my final assessment I’ll be ok”.

Not too surprisingly, no examples of rejection were found in response to feedback on strengths, though previous research has shown that these can occur in certain cases (Fernandez-Toro, Truman & Walker, 2013). Explicit evaluations were also difficult to pinpoint as they were generally blended with planning, integration and affective responses.

Depth of feedback

For reasons of space, only the most indicative responses to different depths of feedback have been summarised in this paper. In the case of feedback on weaknesses, the determining factor for students’ responses was whether tutors had provided enough information to elicit active integration or informed acceptance. Feedback on ‘basic’ mistakes such as spelling and gender agreement did not generally require a correction or an explanation in order to do so; whereas feedback on syntax and lexical errors could more easily result in failed attempts at integration, uninformed acceptance or rejection unless a suitable explanation was provided. The two advanced students who attempted to use vocabulary in a metaphorical way failed to understand why the tutor had corrected the words that they chose and rejected the corrections as “patronising” or repressive: “metaphors have been obliterated by the tutor […] another image that was not appreciated or completely wrong, but it’s not clear. It’s a shame that at level 3 we are not allowed to explore”. In other cases, students just accepted syntax corrections that they did not understand: “I can accept that but I would probably make that mistake again in the future”.

In the case of feedback on strengths, it is worth noting that tutors’ comments including explanations (depth 4) or specific examples drawn from the student’s work (depth 3) are extremely rare in the sample. Comments that simply say that the work is good (depth 1) normally elicit positive affective responses related to effort recognition and personal rapport with the tutor. Ticks elicit similar responses. However, high achievers may find that acknowledging the good quality of their work (for example by giving a high mark) is not sufficient: “I gained pleasing scores of 90%, and again what would I have had to do to achieve 100%?”. Where present in the feedback, examples (depth 3) are welcome: ‘I like the fact that she gives me specific examples of connectors that I’ve used’. However only one such comment at depth 3 was found in the sample, and no further depth was used by tutors in comments relating to strengths.

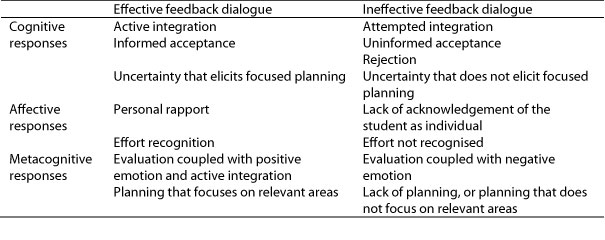

Discussion

The responses described above could be grouped into two categories: The first group are responses that indicate that an effective learning dialogue is taking place through the process of giving and receiving feedback, both between tutor and student and within the student him/herself. The second group are responses that indicate either that such a dialogue is not taking place at all, or that somewhere in the process communication is breaking down. Effective feedback dialogue elicits knowledge construction (Nicol, 2012), promotes a positive perception of oneself (Nicol & Macfarlane-Dick, 2006), sustains motivation (Dörnyei, 2001; Walker & Symons, 1997), and promotes autonomous learning (Andrade & Bunker, 2009; Truman, 2008). Conversely, ineffective feedback dialogue does not result in knowledge construction, challenges the self, is demotivating and fails to promote learner autonomy. Table 1 summarises the responses that are deemed to indicate effective and ineffective feedback dialogue.

Table 1: Students’ responses indicating effective and ineffective feedback dialogue

As explained above, the participants in this study were highly motivated students, and therefore it would be reasonable to expect a considerable number of responses indicating that effective feedback dialogue was taking place. Indeed, cognitive responses to feedback on weaknesses, especially those related to what students regarded as “silly mistakes” (spelling, agreement, missing references, etc.), tended to result in the construction of knowledge through active integration or informed acceptance. Positive affective responses to feedback on strengths, especially to perceived personal rapport (“she spotted I am French, well done tutor”, pleased to be singled out to receive feedback in Spanish, etc.) and effort recognition were also very common, as were metacognitive responses in the form of planning strategies to improve future performance.

However, somewhat unexpectedly in a group as motivated and high-achieving as this, a number of responses indicating ineffective feedback dialogue were also found alongside these constructive responses. Unhelpful cognitive responses such as uninformed acceptance or attempted integration tended to occur with feedback on errors related to more complex structures, such as syntax corrections that were left unexplained [i.e. depth 3 with no coverage of depth 4]. At more advanced levels, unexplained lexical corrections were perceived by students as the tutor’s failure to appreciate their creative attempts at experimenting with the language through the use of metaphors. This caused them to reject the feedback both on cognitive and affective grounds, as they felt that their personal efforts had not been appreciated. Well-intended tutor support was also rejected when students suspected a one-size-fits-all approach that failed to take their individuality into account (e.g. lengthy technical tips given to a student who had worked for years in IT, cut-and-paste invitation to contact the tutor at the end of a feedback form, etc.).

The presence in the sample of responses indicating both effective and ineffective feedback dialogue is consistent with claims commonly voiced by tutors that their feedback, or at least some of it, often does not achieve its intended purpose. The roots of the communication breakdown may be cognitive, as in cases where the depth of feedback was not sufficient, or affective as when students felt that their efforts or individuality were not being duly acknowledged. The fact that even a highly motivated group of students such as the participants in this study occasionally failed to integrate tutor feedback suggests that this type of occurrence might be considerably more common in a sample including a wider range of abilities and motivational levels.

Conclusion

This study shows that highly motivated students do engage with tutor feedback and make active efforts to integrate it. However in some cases their cognitive, affective, or metacognitive responses to the feedback are ineffective. The previous discussion suggests that a tutor’s incorrect assumptions about the student’s abilities, expectations or attitudes in relation to feedback can contribute to these occasional breakdowns in communication. By giving students a voice, the feedback on feedback method used in the study encourages students to articulate their responses to the feedback and makes it possible to identify what comments result in successful or unsuccessful feedback dialogue. The present study has two limitations: Firstly the self-selected nature of the sample means that it does not represent the student population as a whole, and the study would need to be repeated with a randomly selected sample including less motivated and able students. Secondly, as the feedback on feedback exercise conducted here was intended for research purposes, the students were addressing the researchers rather than their tutors, thus missing out on a valuable opportunity for genuine feedback dialogue. Despite these limitations, the fact that recordings were submitted at all shows that the method is potentially viable and could be implemented as a means of promoting feedback dialogue between students and tutors, both in face-to-face and distance learning environments. Tutors could, for example, invite all their students to comment on their feedback after the first marked assessment on a course, or they could use the method in a targeted way whenever they suspect that a student is not learning from their feedback. The findings of this study also indicate that high achievers would also benefit from the exercise and should be given the opportunity to make their voices heard.

References

- Andrade, M. S., & Bunker, E. L. (2009). A model for self-regulated distance language learning. Distance Education, 30(1), 47-61.

- Black, P., & Wiliam, D. (1998). Assessment and classroom learning. Assessment in Education: Principles, Policy & Practice, 5(1), 7-74.

- Brown, E., & Glover C. (2006). Evaluating written feedback on students’ assignments. In C. Bryan & K. Clegg (Eds.), Innovative Assessment in Higher Education (pp. 81-91). London: Routledge.

- Dörnyei, Z. (2001). Teaching and researching motivation. Harlow: Longman.

- Fernández-Toro, M., Truman, M., & Walker, M. (2013). Are the principles of effective feedback transferable across disciplines? A comparative study of tutor feedback on written assignments in Languages and Technology. Assessment and Evaluation in Higher Education, 38(7), 816-830.

- Furnborough, C., & Truman, M. (2009). Adult beginner distance language learner perceptions and use of assignment feedback. Distance Education, 30(3), 399-418.

- Furnborough, C., & Fernández-Toro, M. (forthcoming). Evaluating feedback on language assignments in distance learning: tutor and student perspectives.

- Hurd, S. (2000). Distance language learners and learner support: Beliefs, difficulties and use of strategies. Links and Letters, 7, 61-80.

- Hurd, S. (2006). Towards a better understanding of the dynamic role of the distance language learner: Learner perceptions of personality, motivation, roles and approaches. Distance Education, 27(3), 303-329.

- Nicol, D. J. (2010). From monologue to dialogue: improving written feedback processes in mass higher education. Assessment and Evaluation in Higher Education, 35(5), 501-517.

- Nicol, D. J. (2012). Assessment and feedback – In the hands of the student. JISC e‑Learning Programme Webinar, 23 January 2013.

- Nicol, D. J., & Macfarlane-Dick, D. (2006). Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Studies in Higher Education, 31(2), 199-218.

- Orsmond, P., & Merry, S. (2011). Feedback alignment: effective and ineffective links between tutors’ and students’ understanding of coursework feedback. Assessment and Evaluation in Higher Education, 36, 125-136.

- Ramsden, P. (2003). Learning to teach in higher education. London: Routledge.

- Times Higher Education (2014). National Student Survey 2014 results show record levels of satisfaction. Retrieved from https://www.timeshighereducation.co.uk/news/national-student-survey-2014-results-show-record-levels-of-satisfaction/2015108.article

- Truman, M. (2008). Self-correction strategies in distance language learning. In T. Lewis & M.S. Hurd (Eds.), Language Learning Strategies in Independent Settings (pp. 262-282). Bristol: Multilingual Matters.

- Walker, C. J., & Symons, C. (1997). The meaning of human motivation. In J.L. Bess (Ed.), Teaching well and liking it: Motivating faculty to teach effectively (pp. 3-18). Baltimore: John Hopkins University Press.

- White, C. (2003). Language learning in distance education. Cambridge: Cambridge University Press.

Acknowledgement

The e-Feedback Evaluation Project (eFeP) was a two-year JISC-funded collaborative project involving the Open University (OU) and the University of Manchester, UK.