Identifying Learner Types in Distance Training by Using Study Times

Klaus D. Stiller [klaus.stiller@ur.de], Department of Educational Science, Regine Bachmaier [regine.bachmaier@ur.de], Computer Centre, University of Regensburg, Germany

Abstract

Usage data of online distance-learning environments can inform educators about learning and performance of students. Among the usage variables, various time measures are indicative of learning approaches and performance level. In this study, we obtained study time parameters from distance-learning students and explored how it is connected to learner characteristics and learning. The data from 159 in-service teachers studying a script-based modularized distance training on media education were analysed. Students were clustered according to their module study times (using objective and subjective data) into 117 long and 42 short study-time learners (i.e., having studied at least one of their completed modules very fast). The clusters were compared on (a) their characteristics of learning strategy usage, domain-specific prior knowledge, intrinsic motivation, and computer attitude and anxiety and (b) their experienced difficulties of content and learning, their invested effort and experienced pressure while learning, and their performance. The clusters were expected to be meaningful entities that differ in relevant characteristics that influence distance-learning experience and performance. Long study-time learners showed a higher level of motivation and performance but a lower level of prior knowledge. We concluded that study time could be used as an indicator for problematic students.

Abstract in German

Nutzungsdaten von Online-Lernumgebungen beim Fernlernen können Informationen über den Lernprozess und die Lernleistung Studierender tragen. Unter den Nutzungsdaten erwiesen sich verschiedene Zeitmaße als Indikatoren für das Lernverhalten und das Leistungsniveau. In dieser Studie wurde die Lernzeit von Lernenden erhoben und untersucht, wie diese mit Lernenden-Eigenschaften, Lernerlebnis und Lernerfolg zusammenhängen. Die Daten von 159 Lehrkräften, die einen skriptbasierten, modularisierten Fernkurs zum Thema Medienbildung absolvierten, wurden analysiert. Die Studierenden wurden anhand ihrer Lernzeiten pro Modul (anhand objektiver und subjektiver Daten) in zwei Gruppen eingeteilt: eine Gruppe (n = 117), welche angemessen lange jedes abgeschlossene Modul bearbeitete, und eine Gruppe (n = 42), welche zumindest ein Modul sehr schnell bearbeitete. Die Gruppen wurden bezüglich (a) Lernstrategienutzung, domänenspezifischem Vorwissen, intrinsischer Motivation, Einstellung zum Computer und Computerängstlichkeit und (b) wahrgenommener Schwierigkeit der Inhalte und des Lernens, investierter Anstrengung, erlebtem Druck beim Lernen und der Lernleistung verglichen. Erwartet wurde, dass die Lernzeit-Gruppen aussagekräftige Einheiten sind, die sich in relevanten Merkmalen unterscheiden, welche die Erfahrungen beim Fernlernen und die Lernleistung beeinflussen. Die Lerngruppe mit angemessen langen Lernzeiten wiesen eine höhere Motivation und Lernleistung auf, startete aber mit geringerem Vorwissen im Kurs. Es zeigte sich, dass die Lernzeit als Indikator für problematische Studierende herangezogen werden kann.

Keywords: distance training, student characteristics, self-regulated learning, cognitive load, distance-learning performance

Introduction

Distance learning research investigates how to foster successful student learning (Rowe & Rafferty, 2013). One research focus is to explore the extent that learner characteristics and skills determine learning outcomes and to elaborate predictive models of performance (Akçapınar et al., 2015; Yukselturk & Bulut, 2007). Although these approaches often start with diagnostics of learner characteristics before learning (e.g., Yukselturk & Bulut, 2007), diagnostic methods applied while learning have become popular (Kinnebrew et al., 2013; Lile, 2011). Modern approaches use data mining and learning analytics to identify learners that have problems. These methods attempt to benefit from objective data that are provided by various types of log systems that record online traces (Akçapınar, 2015). Data mining methods might result in better online diagnostics and intervention methods when the mechanisms that underlie usage patterns are known. Hence, relating usage patterns to student characteristics has been recommended to render them meaningful (Akçapınar, 2015).

The following study obtained objective and subjective study time indicators and used them to identify groups of learners in a distance-training course. The groups were first compared in characteristics that have already been shown to be empirically relevant for distance learning, including motivational, affective, cognitive, and skill aspects (i.e., domain-specific prior knowledge, intrinsic motivation, computer attitude, computer anxiety, and use of learning strategies). The results of this analysis should show the extent that these correlates affect study time, which could serve as a starting point for adequate interventions. Second, group differences in learning were explored to show the relevance of study time for learning. These results should show how study time is related to learning. Our investigation was conducted against the background of self-regulated learning (Rowe & Rafferty, 2013).

Self-regulated learning, learning strategies, and motivation

“Self-regulation refers to self-generated thoughts, feelings, and actions that are planned and cyclically adapted to the attainment of personal goals” (Zimmerman, 2000; p.14). Self-regulation is relevant in multiple areas of human functioning but particularly plays an important role for learning in academic settings. In such settings, competent learning is basically understood as the initialization and adequate use of motivational, cognitive, metacognitive, and behavioural skills by learners (Weinstein et al., 2011). Accordingly, Rowe and Rafferty (2013) also defined self-regulated learning as a process that “involves students’ intentional efforts to manage and direct complex learning activities toward the successful completion of academic goals” (p. 590).

Self-regulated learning involves, according to Pintrich (1999), the use of learning strategies, which monitor, control, and regulate basic processes (e.g., eye movement and the decoding of verbal and pictorial information) for performing a learning task (Weinstein & Mayer, 1986). Learning strategies are categorized as cognitive, metacognitive, and resource management strategies. Cognitive strategies include strategies of rehearsal, elaboration, and organization, which are strategies of processing information (Weinstein et al., 2011). Rehearsal strategies are activities that focus on retention of information in working memory, elaboration strategies focus on integrating new information with prior knowledge, and organizational strategies serve the purpose of reducing and structuring information. Metacognitive strategies include the planning, monitoring, and regulation of cognitive processes (Griese, 2015; Pintrich, 1999). Resource management involve activities that generally support learning and shield against external disturbances or other influences (Griese, 2015).

Self-regulated learning has been found to be significantly related to (academic) performance (e.g., Agustiani et al., 2016; Song et al., 2016) and is assumed to be a key component of successful distance learning because of its high demands on self-regulation skills that are needed to succeed (Rowe & Rafferty, 2013). Research in the context of online and distance learning has found support for this assumption, showing that management skills (in particular managing time and organizing learning effectively and having comfortable conditions for studying) predicted dropout and learning achievement (e.g., Hart, 2012; Ivankova & Stick, 2007; Lee & Choi, 2011; Lee et al., 2013; Stiller & Bachmaier, 2017a; 2017b; Tsai & Tsai, 2003; Yukselturk & Bulut, 2007).

Related to self-regulated learning, Lee (2013) discussed deep and surface learning approaches, characterized by deep and surface motives and strategies. Learning approaches refer to intentionally adopted behavioural patterns by learners while performing specific learning tasks. Hence, learning approaches are not learner characteristics. A surface learning approach is more likely to be guided by extrinsic motives with the intention of completing a course with minimal effort, and a deep-learning approach is more guided by intrinsic motives with a focus on comprehension (Baeten et al., 2013; Laird et al., 2014; Lee, 2013). Empirical research shows that deep learning approaches (i.e., both deep motives and strategies) correlate positively with online and distance performance, whereas surface learning approaches (i.e., both surface motives and strategies) correlate negatively (e.g., Akçapınar, 2015; Lee, 2013; Yurdugül & Menzi Çetin, 2015).

Overall, motivation to learn has been the focal correlate of dropout and learning success in distance and online training. Intrinsic motivation refers to performing a task because it is inherently interesting or enjoyable, whereas extrinsic motivation pertains to performing a task because it leads to a contingent outcome that is unrelated to the action (Legault, 2016; Ryan & Deci, 2000). Intrinsic motivation is correlated with high-quality and successful distance learning. In general, motivation has been found to be positively correlated with course persistence and negatively with dropout (e.g., Grau-Valldosera & Minguillon, 2014; Hart, 2012; Ivankova & Stick, 2007) and positively with performance (e.g., Artino, 2008; Waschull, 2005; Yukselturk & Bulut, 2007). A higher level of intrinsic motivation might make learners more resilient against learning problems and thus against the risk of dropping out in comparison to less intrinsically or extrinsically motivated learners. A higher motivational level might also make learners invest more resources in learning and especially process information more deeply, which in turn contributes to successfully passing tests (e.g., Lee, 2013; Yurdugül & Menzi Çetin, 2015).

Prior knowledge, computer attitude and anxiety, and self-regulated learning

Prior knowledge is known to predict school and academic performance and especially influence learning in various instructional settings (e.g., Hailikari et al., 2008; Song et al., 2016; van Gog et al., 2005). In general, possessing prior knowledge is considered a desirable condition for learning (e.g., Chi, 2006). Learning succeeds best when new information can be connected to available knowledge from long-term memory (van Gog et al., 2005). The ability to relate new information more easily to prior knowledge should result in better performance. Research has shown that prior knowledge affects performance in various educational contexts. The more students know, the more they gain when studying. In the context of complex learning environments, including distance learning scenarios, domain-specific prior knowledge (e.g., previous GPA or academic performance) can positively influence performance and dropout (e.g., Jiang et al., 2015; Knestrick et al., 2016; Song et al., 2016; Stiller, 2019; Stiller & Bachmaier, 2017a; 2017b).

Studies have also reported that a higher level of prior knowledge correlates with higher levels of self-regulation skills (e.g., Chi, 2006; Hailikari et al., 2008). Thus, prior knowledge and self-regulation separately contribute to explaining learning performance, but their impact on performance has been rarely investigated together in research (Song et al., 2016). In a recent study, Magno (2016) reported high multiple correlations of prior knowledge and self-regulation skills with academic performance in various subjects, but the details of the calculated regression analyses were not reported. The findings are not clear as to the extent that each of the seven self-regulation strategies and three prior knowledge aspects contributed most to the prediction of performance. Another recent study by Song et al. (2016) found no relationship between prior knowledge and self-regulation, but they reported significant effects of prior knowledge and self-regulation on medical clerkship students’ performance.

Attitudes are mostly viewed as being composed of affective, conative, and cognitive components (Richter et al., 2010). From a cognitive perspective, attitudes are often conceptualized as beliefs, which are organized topically. In the context of distance and online learning, investigating computer attitudes could be informative. Richter and colleagues conceptualized computer attitudes as a belief about the computer being useful as an instrument for working and learning that develops through self-experience. They also proposed computer anxiety as a trait comprising affective aspects, such as feelings of anxiety, and cognitive aspects such as worrisome thoughts. Computer anxiety and computer attitude are assumed to influence self-regulated learning, especially learning strategy usage.

The empirical literature has frequently investigated the relation between computer anxiety and computer attitudes with performance and also learning systems and computer usage by focusing on the influence of computer self-efficacy as mediating variable (e.g., Hauser et al., 2012; Saadé & Kira, 2009). The prevailing underlying assumption is that computer anxiety and computer attitude directly influence self-efficacy, which then directly influences the system usage and performance. In this context, negative attitudes and a considerable level of computer anxiety might lead to a lower level of self-efficacy and hence to surface learning or inadequate usage of learning strategies. The adequate use of learning strategies (e.g., information processing, monitoring comprehension, selecting main ideas and test strategy, resource management, and time management) is widely known to be correlated with positive computer attitudes and lack of computer anxiety (Tsai & Tsai, 2003; Usta, 2011; Wong et al., 2012).

Usage data of learning environments, study time, and learning

Usage data of an online or distance learning environment can inform educators about learning and in particular about performance (Akçapınar et al., 2015; Kinnebrew et al., 2013; Lile, 2011). The indicators, which could be used for analyses, depend on the user actions that can be performed in an online learning environment (Akçapınar, 2015; Akçapınar et al., 2015; Jiang et al., 2015; Lile, 2011). Two main categories of data are the starting point for analysis: occurrence of events (e.g., logins, posts, posts viewed, questions asked, questions answered, tasks completed) and duration of events (e.g., time spent on self-assessments, time needed to solve a task). Usage patterns gained by logfile analyses could be related to the level of performance and surface or deep learning approaches (Akçapınar, 2015; Akçapınar et al., 2015). The Akçapınar studies revealed that a less intensive usage reflected by low frequency of events (e.g., logins, posts) and short event times (e.g., total time spent in the online environment) correlated with surface learning and low performance, and the opposite pattern of intensive usage correlated with deep learning and high performance.

Akçapınar (2015) and Akçapınar et al. (2015) also found that among the investigated usage pattern variables, various time measures were indicative of learning approaches and level of performance, suggesting that time spent on the learning task is important for successful online learning apart from frequency of participation (e.g., Akçapınar, 2015). Furthermore, research results also suggest that the time spent with specific information or actions might be more indicative of successful learning than the overall learning time (e.g., Jiang et al., 2015). In sum, overall learning time is composed of various partial durations, such as studying an educational video and also other – perhaps not useful – actions in a learning environment, which might be more indicative of successful learning, especially if these time measures are shown to more strongly correlate with effective and efficient learning processes.

Research objectives and expectations

Groups of students should be profiled based on their study periods in a distance training. Therefore, students were first clustered into fast and slow learners according to their module study times. First, the clusters were compared on the learner characteristics of learning strategy usage, domain-specific prior knowledge, computer attitude and computer anxiety, and in reference to their demographic characteristics. Second, they were compared in the experienced difficulties of content and learning, the invested effort and experienced pressure while learning, and performance. Clusters are expected to be meaningful entities that differ in (a) relevant individual characteristics that influence distance learning and (b) learning experience and performance (cf. Stiller & Bachmaier, 2019).

Method

Sample

The data of 159 (68% female; age: M = 37.42 years, SD = 8.98, range from 21 to 60 years) of the 318 in-service teachers who registered voluntarily for a distance training about media education in the German Federal State of Bavaria were analysed for this study. The criterion for analysis was completing at least one training module by taking the final module test. One half of the 318 registered teachers (159) dropped out of the training before completing any module. In-service teachers were recruited by promoting the training offline via flyers at all primary schools, secondary general schools, intermediate schools, and grammar schools in Bavaria (see the German classification of schools in Federal Ministry of Education and Research, 2017). Most teachers worked in intermediate and grammar schools, followed by primary and secondary general schools, and other school types (see Table 5 in the results section).

Description of the distance training

The training was based on a modular design and instructional texts. Students could learn at their own pace and at any time, and they could freely decide how many of the modules to study and in which sequence. The starting point of the training was a Moodle course portal. It consisted of nine modules, an introductory module, and eight modules about media education (e.g., Generation SMS: The use of mobile phones by children and adolescents; How to find a good learning program: Evaluation criteria for educational software). The introductory module informed about content, technical requirements, course organization, and learning skills. Each module had a linear structure of six sections: (a) An overview of the content and the teaching objectives, (b) a case example of a real-life problem, (c) a test of domain-specific prior knowledge used for activating prior knowledge and giving feedback about its level, (d) instructional text and optional supporting material, (e) a questionnaire about studying the module, and (f) a final performance test that evaluated learning success and provided feedback. The workload for studying a module was estimated to take 60 to 90 minutes. Students were supported via email, chat, and phone.

Procedure and measurements

The training was offered during a Bavarian school year lasting from October to July. The first login directed a student to the introductory module, which could be studied optionally. Then, students completed the first questionnaire assessing demographic information and the student characteristics in focus. Then, the eight course modules were accessible. A prior-knowledge test was presented at the beginning of each module and a final module test at the end. Students were questioned about each module before completing it by taking the final module test. A student could provide up to eight data sets, one for each module.

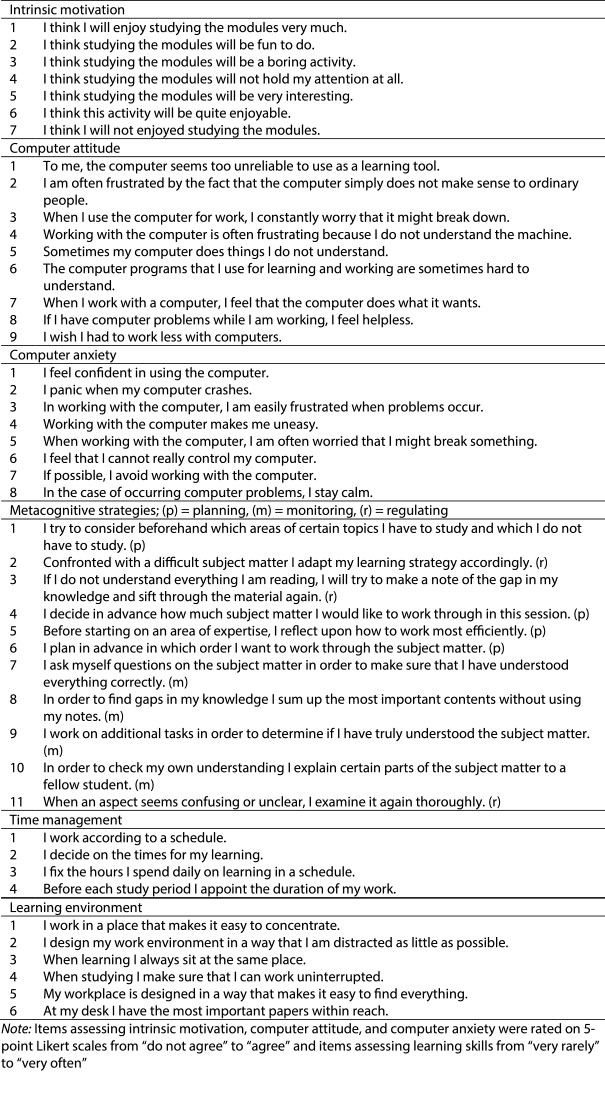

Table 1: Translated German items of the first questionnaire

The first questionnaire (see Table 1) assessed intrinsic motivation (Interest/Enjoyment scale of the Intrinsic Motivation Inventory (IMI); Leone, 2011), attitude towards computers (i.e., the negative component of computer attitude in the sense of the computer being regarded as an uncontrollable machine; “Personal experience/learning and working/autonomous entity” scale of the Questionnaire for the Content-Specific Measurement of Attitudes toward the Computer (QCAAC); Richter et al., 2010) and computer anxiety (“Confidence in dealing with computers and computer applications” scale of the QCAAC; Richter et al., 2010), and skills in using metacognitive learning strategies, time management strategies, and strategies to arrange an adequate learning environment (Wild & Schiefele, 1994; Griese, 2015). Scale scores were calculated as means of items. A higher score expresses a higher level of the assessed characteristic except for computer attitude, which indicates a low negative attitude (a higher score could be vaguely interpreted as a “positive” attitude).

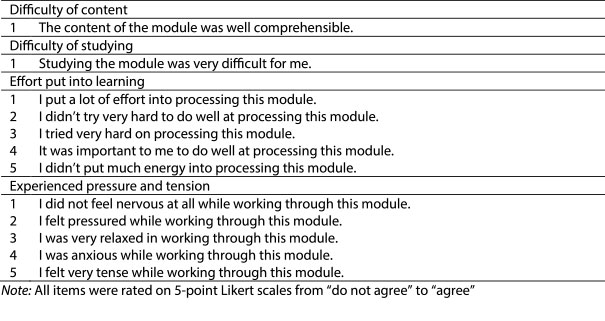

The module questionnaires (see Table 2) measured the effort put into learning and the tension experienced while learning (Effort/Importance and Pressure/Tension scales of the IMI; Leone, 2011), and the difficulty of the content and studying (one item each, often used for measuring intrinsic cognitive load and overall load against the background of Cognitive Load Theory; de Jong, 2010). Scale scores were calculated as means of items for each completed module, and the final individual scores were calculated as the mean of the module scores (varying from one to eight scores per student). A higher individual score expresses a higher level of the rated characteristics.

Table 2: Translated German items of the module questionnaire

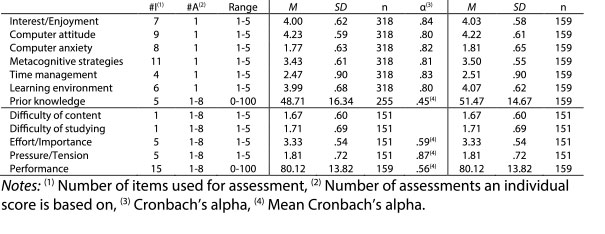

Multiple-choice tests were used to assess prior knowledge (five items) and performance (15 items including the pre-test items) for each module. Each item comprised four answers of which at least one was correct. Tests were considered appropriate for measuring learning success because the training was intended to provide factual knowledge. Per module, the scores of the multiple-item scales were calculated as the mean of items. Then prior-knowledge and performance scores were calculated as percent correct. Finally, means were calculated across the number of completed tests. Table 3 presents the features of all used scales.

Table 3: Descriptive statistics of measurements

Results

A short and long study-time group were identified by considering the following three criteria.

- The objectively measured period between completing the prior knowledge test and starting the final module test was calculated as an indicator of a module’s study time. These periods were assumed to be reliable for detecting short study times. The criterion for a short study time was set to 20 minutes. A successful completion of any module was calculated with a workload of 60 to 90 minutes.

- Longer periods are not reliable measures because they might include activity unrelated to learning (e.g., pauses or time between downloading and studying a script). Accordingly, the self-reported study time was used instead as an indicator of study time. The criterion to group learners into short or long study periods was set to 25 minutes.

- Finally, learners who studied at least one of the modules with a short study time were assigned to the short study-time group; otherwise, they were assigned to the long study-time group.

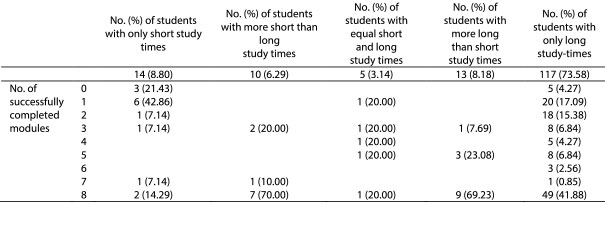

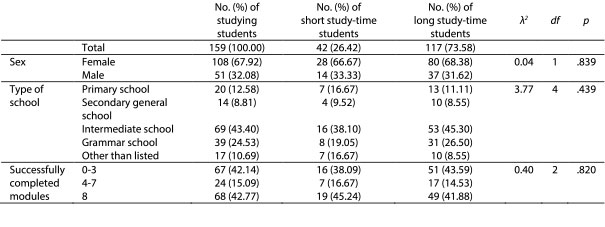

This process resulted in 117 long study-time learners and 42 short study-time learners. Slightly more than half (57%) of the students in the short study-time group studied most of their modules quickly. No differences were found between the study-time groups for sex, age, type of school, and number of successfully completed modules (for analysis, the categories of 0 to 3 and 4 to 7 completed modules formed one group each; see Tables 4 and 5). The students mostly completed one (17%), two (12%) or all modules (43%), but less often three to seven modules (23%).

Table 4: The percentage of students working on modules in short or long study times

Table 5: The demographic characteristics of the teachers and their successfully completed modules

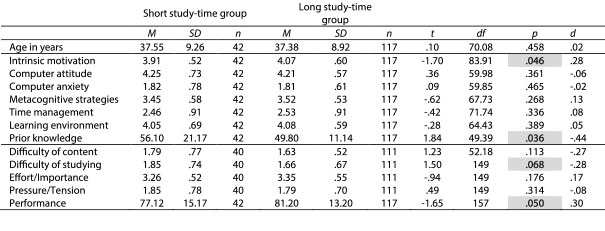

The study-time groups were compared on the learner characteristics and the study ratings (see Table 6). Significant differences were only found for prior knowledge, intrinsic motivation, and performance. Long study-time learners showed a higher level of motivation and performance but a lower level of prior knowledge. The ANOVA with repeated measures of prior knowledge and performance revealed a large effect of time, F(1,157) = 265.48, p < .001, ƞ2 = .63, and a medium sized interaction effect, F(1,157) = 10.41, p < .002, ƞ2 = .06, showing that the long study-time students gained more knowledge than the short study-time students.

Table 6: Means and standard deviations of the student groups, results and effect sizes are shown. One-sided Welch-tests and t tests were calculated

Discussion

Two learner groups were formed according to study time for each module. One group completed most of their modules quickly, spending little time studying. Hence, these students likely missed important information that could not be organized and integrated into an adequate knowledge representation. Students from the second group spent reasonably long periods for studying, which allowed an adequate selection, organization, and integration of important information. Evidence for this assumption was found only for performance (Akçapınar, 2015; Akçapınar et al., 2015), which reflects the results of Stiller and Bachmaier (2019) with a sample of trainee teachers. Results from the analysis of learning experience measures (i.e., ratings of content difficulty, studying difficulty, invested effort, and experienced tension while learning) descriptively show lower difficulty and tension ratings and higher effort ratings for the long study-time group, and a tendency toward significance for the difficulty ratings. These results only partially replicate the results by Stiller and Bachmaier (2019). Overall, effect sizes are mostly placed in the small to medium sized range of effects.

Groups also differed in motivation and prior knowledge. These findings are consistent with results on intrinsic motivation (e.g., Grau-Valldosera & Minguillon, 2014; Hart, 2012; Ivankova & Stick, 2007). That is, learners spending more time with studying are more motivated. Overall, this pattern of results is not surprising given that intrinsic motivation is understood to be inherently linked to self-motivated learning (Ryan & Deci, 2000). The finding that a higher level of prior knowledge contributed to faster study periods could have occurred as a result of the method. A module was deemed successfully completed when a student correctly answered at least 50% of the items in the given module test. Most students of the short study-time group had already met that criterion after the prior knowledge test. Consequently, they might have expected to perform equally well in the module post-test without spending much time studying a module. This procedure might have contributed to faster study times and worse performance.

Unexpectedly, the results are not consistent with empirical results on learning skills (e.g., Hart, 2012; Ivankova & Stick, 2007; Lee & Choi, 2011; Lee et al., 2013), and they do not fully replicate the findings by Stiller and Bachmaier (2019), who found higher metacognitive skills and skills in arranging an adequate learning environment for the long study-time trainee teacher group. One explanation can be found in the working conditions of students. Trainee teachers are assumed to have a higher workload and overall stress in their practical teacher-training period at schools in which planning, preparation, and regulation of their learning and teaching are stressed, thus they might be challenged to use their skills more effectively to integrate a continuing vocational distance-training course into their daily work (Stiller & Bachmaier, 2019). In-service teachers are assumed to feel less workload and stress compared to trainee teachers because of their experience with daily work routines related to teaching and administration. Hence, strategy skills might be less important for experienced teachers to integrate a continuing vocational distance-training course into their daily work.

Overall, the results must be interpreted carefully. Although the sample size was adequate, the distance training modular design, the use of instructional downloadable pdf papers, and the special target group of teachers are all a matter of concern when generalizing conclusions, especially to whole distance study programs. Nevertheless, the present study results are widely consistent with the theoretical approach and empirical evidence reported in the literature.

Study time could be used as a predictor for how students study and thus for identifying students that should be guided to a deep learning approach (Akçapınar, 2015; Akçapınar et al., 2015). Self-reported study times might be especially important when logfiles cannot be used for calculating study times because of, for example, institution security policies or the lack of this kind of information in the files (e.g., for distance learning courses that provide offline instructional material). In general, when logfiles can be used, additional indicators are likely to exist that are related to learning approaches (Akçapınar, 2015; Akçapınar et al., 2015; Kinnebrew et al., 2013; Lile, 2011). The data used in this study were obtained by a Moodle system that recorded the entry timestamp of course pages.

A problem might arise from trainings that are free to everybody, as was the case for the distance training in this study, which was free to every trainee and in-service teacher. A wide range of motives could lead to course registration and to participation, making it difficult to assess which students are willing to study and complete the course and which students could be targets of interventions. One particular problem with the present training might have engendered a gambling behaviour of students because the hurdle to complete a module was set low by using multiple-choice items of low- to medium-level difficulty that tested for factual knowledge. Thus, students could have tried their luck in succeeding in subsequent module tests with little effort. More challenging tasks might have shifted learners to dropping out. Normally, such tasks cannot be solved by guessing the solutions. Future research could first identify user groups and analyse these groups separately to gain clearer insights about the factors that lead to dropout and learning success.

For practice and research, focusing on combining logfile analyses with an initial diagnostic of relevant learner characteristics and their framework conditions for studying seems promising. Logfile analyses could especially be used to support students in their learning behaviour and to lead them to higher performance, and it might also be used to identify and support students that drop out after having studied parts of a training (Akçapınar, 2015; Akçapınar et al., 2015; Kinnebrew et al., 2013; Lile, 2011). In complex educational environments like study programs, other possible correlates could be analyzed such as academic background, grade-point average, or former distance learning experience and success (Lee & Choi, 2011; Wladis et al., 2014).

References

- Agustiani, H., Cahyad, S., & Musa, M. (2016). Self-efficacy and self-regulated learning as predictors of students’ academic performance. The Open Psychology Journal, 9, 1-6.

- Akçapınar, G. (2015). Profiling students’ approaches to learning through Moodle logs. In Proceedings of MAC-ETL 2015 in Prague. Multidisciplinary Academic Conference on Education, Teaching and Learning in Prague (pp. 242-248). Prague, Czech Republic: MAC Prague consulting Ltd.

- Akçapınar, G., Altun, A., & Aşkar, P. (2015). Modeling Students’ Academic Performance Based on Their Interactions in an Online Learning Environment. Elementary Education Online, 14(3), 815-824.

- Artino, A. R. (2008). Motivational beliefs and perceptions of instructional quality: Predicting satisfaction with online training. Journal of Computer Assisted Learning, 24, 260-270.

- Baeten, M., Struyven, K., & Dochy, F. (2013). Student-centered teaching methods: Can they optimize students’ approaches to learning in professional higher education? Studies in Educational Evaluation, 39, 14-22.

- Chi, M. T. H. (2006). Two approaches to the study of experts’ characteristics. In K. A. Ericsson, N. Charness, P. J. Feltovich, & R. R. Hoffman (Eds.), The Cambridge handbook of expertise and expert performance (pp. 21–30). New York: Cambridge University Press.

- de Jong, T. (2010). Cognitive load theory, educational research, and instructional design: Some food for thought. Instructional Science, 38, 105-134.

- Federal Ministry of Education Research (2017). Education and Research in Figures 2017. Retrieved from https://www.datenportal.bmbf.de/portal/en/education_and_research_in_figures_2017.pdf

- Grau-Valldosera, J., & Minguillón, J. (2014). Rethinking dropout in online higher education: The case of the Universitat Oberta de Catalunya. The International Review of Research in Open and Distributed Learning, 15(1), 290-308.

- Griese, B., Lehmann, M., & Roesken-Winter, B. (2015). Refining questinnaire-based assessment of STEM students’ learning strategies. International Journal of STEM Education, 2:12, 12 pages.

- Hailikari, T., Katajavuori, N., & Lindblom-Ylanne, S. (2008). The relevance of prior knowledge in learning and instructional design. American Journal of Pharmaceutical Education, 72(5), 113.

- Hart, C. (2012). Factors associated with student persistence in an online program of study: A review of the literature. Journal of Interactive Online Learning, 11(1), 19-42.

- Hauser, R., Paul, R., & Bradley, J. (2012). Computer self-efficacy, anxiety, and learning in online versus face to face medium. Journal of Information Technology Education: Research, 11, 141-154.

- Ivankova, N. V., & Stick, S. L. (2007). Students’ persistence in a distributed doctoral program in educational leadership in higher education: A mixed methods study. Research in Higher Education, 48, 93-135.

- Jiang, Y., Paquette, L., Baker, R. S., & Clarke-Midura, J. (2015). Comparing Novice and Experienced Students in Virtual Performance Assessments. In O. C. Santos, J. G. Boticario, C. Romero, M. Pechenizkiy, A. Merceron, P. Mitros, J. M. Luna, C. Mihaescu, P. Moreno, A. Hershkovitz, S. Ventura, & M. Desmarais (Eds.), Proceedings of the 8th International Conference on Educational Data Mining (pp. 136-143). Madrid, Spain: International Educational Data Mining Society.

- Kinnebrew, J. S., Loretz, K. M., & Biswas, G. (2013). A contextualized, differential sequence mining method to derive students’ learning behavior patterns. Journal of Educational Data Mining, 5, 190-219.

- Knestrick, J. M., Wilkinson, M. R., Pellathy, T. P., Lange-Kessler, J., Katz, R., & Compton, P. (2016). Predictors of retention of students in an online nurse practitioner program. The Journal for Nurse Practitioners, 12, 635-640.

- Laird, T. F. N., Seifert, T. A., Pascarella, E. T., Mayhew, M. J., & Blaich, C. F. (2014). Deeply affecting first-year students' thinking: Deep approaches to learning and three dimensions of cognitive development. The Journal of Higher Education, 85(3), 402-432.

- Lee, S. W.-Y. (2013). Investigating students’ learning approaches, perceptions of online discussions, and students' online and academic performance. Computers & Education, 68, 345-352.

- Lee, Y., & Choi, J. (2011). A review of online course dropout research: Implications for practice and future research. Educational Technology Research and Development, 59(5), 593-618.

- Lee, Y., Choi, J., & Kim, T. (2013). Discriminating factors between completers of and dropouts from online learning courses. British Journal of Educational Technology, 44(2), 328-337.

- Legault, L. (2016). Intrinsic and Extrinsic Motivation. In V. Zeigler-Hill & T. K. Shackelford (Eds.), Encyclopedia of Personality and Individual Differences. Springer: Cham.

- Leone, J. (2011). Intrinsic Motivation Inventory (IMI). Retrieved from http://selfdeterminationtheory.org/intrinsic-motivation-inventory/

- Lile, A. (2011). Analyzing e-Learning systems using educational data mining techniques. Mediterranean Journal of Social Sciences, 2, 403-419.

- Magno, C. (2016). The effect size of self-regulation and prior knowledge on students performance in an open high school program. The International Journal of Research and Review, 11, 39-48.

- Pintrich, P. R. (1999). The role of motivation in promoting and sustaining self-regulated learning. International Journal of Educational Research, 31, 459-470.

- Richter, T., Naumann, J., & Horz, H. (2010). Eine revidierte Fassung des Inventars zur Computerbildung (INCOBI-R). Zeitschrift für Pädagogische Psychologie, 24(1), 23-37.

- Rowe, F. A., & Rafferty, J. A. (2013). Instructional Design Interventions for Supporting Self-Regulated Learning: Enhancing Academic Outcomes in Postsecondary E-Learning Environments. MERLOT Journal of Online Learning and Teaching, 9, 590-601.

- Ryan, R. M., & Deci, E. L. (2000). Intrinsic and extrinsic motivations: Classic definitions and new directions. Contemporary Educational Psychology, 25, 54-67.

- Saadé, R. G., & Kira, D. (2009). Computer anxiety in e-learning: The effect of computer self-efficacy. Journal of Information Technology Education, 8, 177-191.

- Song, H. S., Kalet, A. L., & Plass, J. L. (2016). Interplay of prior knowledge, self-regulation and motivation in complex multimedia learning environments. Journal of Computer Assisted Learning, 32, 31-50.

- Stiller, K. D. (2019). Fostering learning via pictorial access to on-screen text. Journal of Educational Multimedia and Hypermedia, 27, 239-260.

- Stiller, K. D., & Bachmaier, R. (2017a). Dropout in an online training for in-service teachers. In A. Volungeviciene & A. Szűcs (Eds.), EDEN 2017 Annual Conference. Diversity matters! Conference proceedings (pp. 177-185). Budapest, Hungary: European Distance and E-Learning Network (EDEN).

- Stiller, K. D., & Bachmaier, R. (2017b). Dropout in an online training for trainee teachers. European Journal of Open, Distance and E-Learning, 20(1), 80-95.

- Stiller, K. D., & Bachmaier, R. (2019). Using study times for identifying types of learners in a distance training for trainee teachers. Turkish Online Journal of Distance Education, 20(2), 21-45.

- Tsai, M.-J., & Tsai, C.-C. (2003). Student computer achievement, attitude and anxiety: The role of learning strategies. Journal of Educational Computing Research, 28, 47-61.

- Usta, E. (2011). The examination of online self-regulated learning skills in web-based learning environments in terms of different variables. The Turkish Online Journal of Educational Technology, 10, 278-286.

- van Gog, T., Ericsson, K., Rikers, R., & Paas, F. (2005). Instructional design for advanced learners: Establishing connections between the theoretical frameworks of cognitive load and deliberate practice. Educational Technology Research and Development, 53(3), 73-81.

- Waschull, S. B. (2005). Predicting success in online psychology courses: Self-discipline and motivation. Teaching of Psychology, 32(3), 190-192.

- Weinstein, C. E., & Mayer, R. E. (1986). The teaching of learning strategies. In M. C. Wittrock (Ed.), Handbook of research on teaching: Third edition (pp. 315-327). New York, NY: Macmillan.

- Weinstein, C. E., Acee, T. W., & Jung, J. (2011). Self-regulation and learning strategies. New Directions for Teaching and Learning, 2011(126), 45-53.

- Wild, K.-P., & Schiefele, U. (1994). Lernstrategien im Studium: Ergebnisse zur Faktorenstruktur und Reliabilität eines neuen Fragebogens. Zeitschrift für Differentielle und Diagnostische Psychologie, 15, 185-200.

- Wladis, C., Hachey, A. C., & Conway, K. M. (2014). The representation of minority, female, and nontraditional STEM majors in the online environment at community colleges: A nationally representative study. Community College Review, 43, 142-164.

- Wong, S. L., Ibrahim, N., & Ayub, A. F. M. (2012). Learning strategies as correlates of computer attitudes: A case study among Malaysian secondary school students. International Journal of Social Science and Humanity, 2, 123-126.

- Yukselturk, E., & Bulut, S. (2007). Predictors for student success in an online course. Educational Technology & Society, 10(2), 71-83.

- Yurdugül, H., & Menzi Çetin, N. (2015). Investigation of the relationship between learning process and learning outcomes in e-learning environments. Eurasian Journal of Educational Research, 59, 57-74.

- Zimmerman, B. J. (2000). Attaining self-regulation:

A social cognitive perspective. In M. Boekaerts, P. R. Pintrich, & M.

Zeidner (Eds.), Handbook of self-regulation (pp. 13-39). San Diego, CA:

Academic.