Usability testing of e-learning content as used in two learning management systems

Prof. Dr. Matjaž Debevc [matjaz.debevc@uni-mb.si]

University of Maribor, Faculty of Electrical Engineering

and Computer Science

Smetanova ulica 17,

2000 Maribor, Slovenia

Julija Lapuh Bele [julija.bele@b2.eu]

B2 d.o.o.

Tržaška cesta 42,

1000 Ljubljana, Slovenia

Abstract

English

As Information and Communication Technology (ICT) becomes more widely used, it is increasingly important to develop methods of preparing and evaluating user-friendly e-learning content. This preparation and evaluation should not only evaluate content with respect to traditional learning strategies, but should also aim to increase accessibility as well. The article presents development and design solutions for ECDL e-learning modules in the framework of two Learning Management Systems (LMS), eCampus and Moodle. E-learning contents in Moodle were further adapted for persons with special needs. The central part of the article presents potential methods for testing the usability of e-learning content. This is followed by a more precise assessment of the appropriateness of SUMI (Software Usability Measurement Inventory) usability evaluation. The concluding part answers the question of whether SUMI evaluation can be used as a global and limited evaluation method to quickly identify possible system weaknesses.

Hungarian

Az információs és kommunikációs technológiák (IT) egyre szélesebb körű használata következtében mind fontosabbá válik a felhasználóbarát e-tanulási környezetek előkészítési és értékelési modelljeinek a kidolgozása. Ennek az előkészítésnek és értékelésnek nemcsak a tartalmat kell értékelnie a hagyományos tanulási stratégiák tükrében, hanem a hozzáférésen is javítania kell. Ez a tanulmány fejlesztési és megjelenési javaslatokat kínál ECDL e-tanulásmoduloknak két Tanulásmenedzsment Rendszer, az eCampus és a Moodle keretein belül. A Moodle keretein belül az e-tanulás elveit speciális szükségletű emberekre szabták tovább. A cikk fő része az e-tanulási környezet használhatóságára hoz potenciális tesztelési mintákat. Ezt követően a SUMI (Szoftver Felhasználhatóság Mérés Eszköztár) használhatóságértékelés megfelelőségének pontosabb értékelése következik. A konklúzióban arra a kérésre keres választ, hogy a SUMI értékelés használható-e globális és korlátozott értékelési eszközként a rendszer gyenge pontjainak gyors megtalálásához.

Key words

Distance Learning, Learning Management Systems, Open Systems, Accessibility, Usability Testing.

Introduction

With the rapid development of computers and related technology, Information and Communication Technology (ICT) has become widely used in the field of education. Research has shown that the use of ICT can help to create courses that are easier and more effective for learners, especially if ICT is integrated into the educational process [3]. Other research, including Parlangeli et al [22], Kulik [15] and Bosco [4], through a comparison of traditional learning strategies with multimedia approaches, has shown that the use of ICT leads to greater learning efficiency. Chou and Liu [6] and Schulz et al. [23] have furthermore shown that distance learning, with the help of a web-based virtual learning environment, is both effective and useful.

However, some authors have also reported critical reactions to e-learning and e‑learning content. In their work, Theofanos and Redish [26] have stated that in order to truly meet the needs of all users, it is not enough to have guidelines that are based on technology; it is also necessary to understand the users and how they work with their tools. Design of e-learning content is therefore not a simple process. This content should be carefully planned, designed and evaluated in order to ensure efficient and simple use.

Living in an information society exposes us to new products, new services and new usage areas that all require new knowledge. The deficit of such knowledge creates a "digital gap"; there is a distance in knowledge between those who have an education in information technology and those who lack one. Because of the ever-increasing importance of information technology in everyday life, there is a danger that the "digital gap" is going to continue to widen even further. Therefore, Slovenia has introduced the program PHARE 2003, entitled "Improvement of computer literacy of unemployed adults." Its main goal is to augment the qualification level of available human resources in Slovenia (the program involves about 10.000 unemployed adults). At the same time, the program contributes to the guarantee of equal accessibility to information society services and to the advantages they offer. The objective of the program is to reduce the "digital gap" by ensuring or increasing the basic computer skills of unemployed adults and creating workers who can adapt to the latest developments in the ICT field. This consequently augments the workers' employability. This will enable unemployed adults to apply for jobs they have not taken into account before, since those jobs required basic computer skills, as is the case with more and more work posts.

Slovenia has put into practice a possible education solution that tackles the issues described in this paper by developing web-based virtual learning environments. It enables the education of a greater number of participants than a classical education solution would involve.

Making computers, computer accessories and the Internet, with its information and communication services, available to unemployed persons does not in itself guarantee that they will be able to actively use these tools in their working environments, in which way they would improve their overall education level and improve their self-image. The majority of these persons are unemployed for three reasons: a low level of education, difficulty in adapting to new working environments and difficulty in communicating with the help of ICT.

How should e-learning content be designed to be user-friendly and thereby best serve unemployed persons when using ICT and methods of distance learning in the education process? There is also the question of the best form of distance learning to use with unemployed adults in order to allow them to successfully study and communicate.

At the Maribor Adult Education Centre (AEC Maribor), with the help of the University of Maribor's Faculty of Electrical Engineering and Computer Sciences (UM FERI) and at the company B2 d.o.o., we have educated more than 900 attendants (at least 600 through B2 d.o.o. alone) in 8 months. This took place within the scope of the project PHARE 2003, which aimed to improve the computer literacy of unemployed adults, and with the help of appropriately-adapted Learning Management Systems.

This paper therefore contains the following points:

- An explanation of the selection of the design for the e-learning content and how this system works. As an illustration of the education system, a presentation of an e‑learning course designed to complete 1 of the 7 ECDL (European Computer Driving Licence) modules will be presented. The complete program contains 7 modules and the participant has to pass 7 exams in order to obtain full ECDL certification.

- An explanation of how we have considered the needs and demands of persons with special needs (with particular focus on the deaf and hard of hearing) and consequently enabled them to access this e-learning content.

- A presentation of possible evaluation-tool choices as put forth by other studies, e.g. Squires and Preece [24], Ardito et al. [2], Dringus and Cohen [11], Holzinger [12] and Achtemeier [1], and their adjustment in consideration with the e-learning content evaluation. This includes the evaluation of the user-friendliness of the e-learning content, the usefulness of the whole system with regard to persons with special needs, the accessibility of the content, and the effectiveness of the entire course. In the contribution, there is special emphasis placed on the research of the SUMI evaluation as a possible tool for the evaluation of user-friendliness of the e-learning content.

Education and Learning Management Systems for ECDL modules

As mentioned before, the basis of the e-learning content used in our project of the education of unemployed adults was a curriculum that had been defined by the ECDL Foundation [5]. The ECDL Foundation is the global governing body of the world's leading end-user computer skills certification programme. The European Computer Driving Licence (ECDL) or International Computer Driving Licence (ICDL) are the global standards in end-user computer skills, offering candidates an internationally-recognised certificate that is globally supported by governments, computer societies, international organisations and commercial corporations.

The syllabus published on the ECDL Foundation Web site divides the course into seven modules:

- Module 1 – Concepts of Information Technology

- Module 2 – Using the Computer and Managing Files (e.g. Windows)

- Module 3 – Word Processing (e.g. MS Word)

- Module 4 – Spreadsheets (e.g. MS Excel)

- Module 5 – Database (e.g. MS Access)

- Module 6 – Presentation (e.g. MS PowerPoint)

- Module 7 – Information and Communication

The educational process took place in the form of courses, following the method of blended learning [19]. At the beginning, students met their tutors and had their first training in a computer room. Further training consisted of individual work through e-learning content at their homes or at special public ICT-equipped centres. For motivational reasons, we set up a schedule defining the time-frame when a tutor was available through the system for additional help and possible questions. The web-based course was designed so that knowledge-evaluation took place at the beginning, middle and end of the learning process. An indication of success lay in the number of passed ECDL exams. These took place at the company B2 d.o.o., which is an approved ECDL Test Centre that has been authorised locally by ECDL Licenses, based on the ECDL Foundation quality assurance standards.

Teaching was provided with the help of appropriately adapted Learning Management Systems. The company B2 d.o.o. designed its own system, eCampus, and its e-learning content for all 7 modules is approved by the ECDL Foundation.

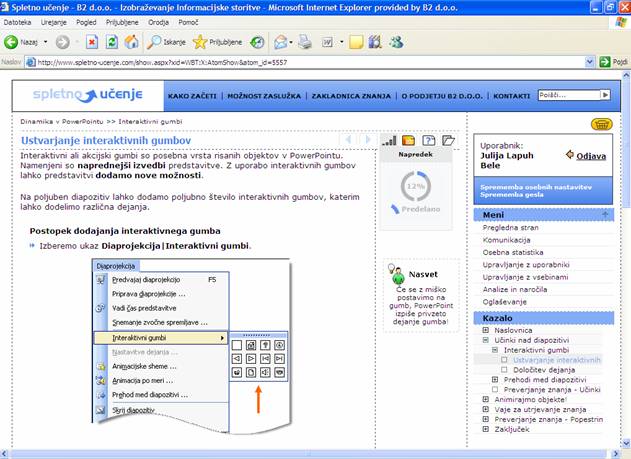

Figure 1 shows an example of an eCampus-system learning page. The learning portal is visually divided into three parts. The main part is designed for the actual learning content and interactive questions. The bar on its right has in its upper part a graph showing the student's progress, an interactive notebook for the student's personal notes, a method of direct communication with the tutor, and a working folder for on-line file storage. The lower part of the bar is reserved for comments on the learning content. The right side of the page consists of an application menu on the top and an index at the bottom showing learning content, such as the recommended learning path.

The eCampus-portal is a web-based application, designed for the creation of web-based learning content for different kinds of courses and was intended to carry out web-based and blended learning. eCampus enables the use of web-based pedagogical tools as scaffolds for self-regulated learning (SRL), such as collaborative and communication tools, content creation and delivery tools, administrative tools and assessment tools. Key SRL processes are implemented, such as goal setting, self-monitoring, self-evaluating, task strategies, help seeking and time management, that affect students' achievements and motivational beliefs. The learning portal is individually adjusted to the user, making personalisation possible. Learning with the help of eCampus is an active process where the strategy of "learning by doing" leads the student to cognitively approach and work through the e-learning content and to create links between experiences and both new and existing knowledge.

Figure 1. Example of a B2 eCampus web page for ECDL courses (LMS system).

The learning content is interactive, multimedia-based, and adjusted to the different learning styles of students (VAKT - visual, auditory, kinesthetic, tactile) [7]. Their activity is furthermore stimulated by simultaneous on-line questions, where the student receives feedback on his or her understanding of the subject and may be given tips for further learning. eCampus enables an interactive on-line evaluation of knowledge before, during, and after the learning process.

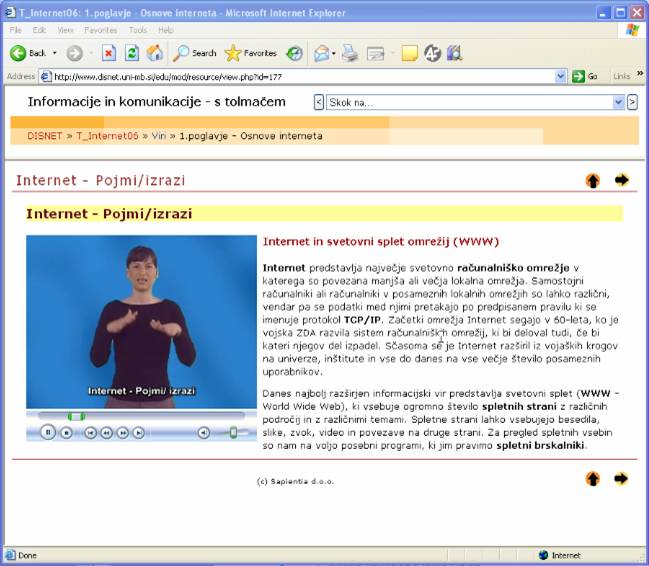

The University of Maribor's Faculty of Electrical Engineering and Computer Science (UM FERI) based their education portal on the open system Moodle [10]. E-learning content was designed for persons with special needs, specifically for the deaf and hard of hearing or visually handicapped. The University of Maribor chose Moodle for its user-friendliness, since it supports setup on different learning management systems, and particularly because it implies the most vital pedagogical principles. At the same time, its developers are trying to take into consideration various suggestions aimed at increasing the accessibility of e-content for persons with special needs. Figure 2 shows an example of a Moodle web-page design with an interpreter within the module.

Due to the special requirements of persons with special needs, web-pages with e-learning content are designed to be more user-friendly and simple in navigation and content. The sign language interpreter on the left of the page reproduces the shortened content on the right. The upper and lower corners on the right contain navigational tools for the page. For knowledge evaluation, the content contains closed-type questions in sign language which, in the case of incorrect answers, provide additional information. Other elements, such as forums, information, and communication, are implemented in Moodle's characteristic way.

During the development of the e-learning content, we formed several recommendations as a result of our user analyses and our previous work with less-experienced ICT users in different projects [8]:

- Enable quality streaming video of the sign-language interpreter, which can be viewed promptly and without unnecessary waiting time.

- Make the user interface simple and clear, without a multitude of additional options.

- Make the user interface as visual as possible without graphically exaggerating it.

- Keep navigation tools in a particular and clearly-defined position.

- Avoid unnecessary pop-up windows, since they can confuse users that have just started to work with computers.

- Simplify the language and explanations used - we advise the use of uncomplicated technical expressions.

Figure 2. Example of the ECDL e-learning

content based on Moodle,

designed for deaf and hard-of-hearing persons.

Possibility for testing the usability of e-learning content

The interesting question is how successful the two systems are going be in the usability testing. Usability is an important factor for the evaluation of e-learning technologies and systems. Despite recent advances of electronic technologies in e-learning, a consolidated evaluation methodology for e-learning applications is not available [2]. For the user of any interactive software, usability is one of the major aspects of the system. The question of what usability entails has several answers, as the term itself has many meanings and definitions.

According to ISO 9241- 11 [13], usability may be defined as the extent to which a product (such as software) can be used by specified users to achieve specified goals with effectiveness, efficiency and satisfaction in a specified context of use. Therefore, in computer science there is a strong relationship between quality and usability.

Usability is a quality attribute that assesses how easy user interfaces are to use. The word "usability" also refers to methods for improving the ease-of-use during the design process.

Nielsen [18] defined usability with five quality components:

- Learnability: How easy is it for users to accomplish basic tasks the first time they encounter the design?

- Efficiency: Once users have learned the design, how quickly can they perform tasks?

- Memorability: When users return to the design after a period of not using it, how easily can they re-establish proficiency?

- Errors: How many errors do users make, how severe are these errors and how easily can they recover from the errors?

- Satisfaction: How pleasant is it to use the design?

There are many other important quality attributes. One of the most important is "utility" which refers to the design's functionality: does the system do what users need? Usability and utility are equally important since it matters little if something is easy to work on but does not do what we want it to do. Neither does it help if the system has the potential to perform a task but the user is unable to actualize this potential due to an inappropriate interface. To study design utility, the same user research methods can be used as for improving usability [17].

The term "effectiveness" could be used instead of "utility" since the Oxford English Dictionary [21] defines effective as "producing a desired or intended result". The other quality components, e.g. learnability, memorability and errors, have great impact on user satisfaction.

The need for usability has been recognized in web site design literature as a crucial quality when determining user satisfaction in such systems. Therefore it can be argued that the usability of e-learning applications can significantly affect learning [7].

There are several methods for studying usability. These methods include heuristic evaluation by Nielsen [17], SUMI (Software Usability Measurement Inventory) evaluation [14], Automatic evaluation with tools (e.g. Bobby/WebXACT) [25] and Field Observation (Quick and Dirty Evaluation) [12] (Table 1).

Table 1. Proposed evaluation methods for usability testing of e-learning content.

Heuristic Evaluation |

SUMI evaluation |

Automatic evaluation with tools |

Field observation (Quick and Dirty evaluation) |

|

Purpose |

Usability testing |

Usability testing |

Accessibility testing with tools |

Usability and accessibility testing |

Project Phase |

Design |

Final testing |

Design |

Design |

Object |

E-learning contents |

E-learning contents |

Code (HTML, XML ...) |

LMS and E-Learning contents together |

Population |

Experts |

Users |

Users |

Users |

Reviewing all four options shown in Table 1, we have decided to choose the fastest method for testing our e-learning contents as it does not require large number of end users. Following a check of all required activities and the number of end users needed for all four methods, the SUMI method has best matched our situation.

SUMI evaluation is a consistent method for assessing the quality-of-use of software products. It is backed by an extensive reference database embedded in an effective analysis and report generation tool. SUMI has been hailed as the de facto industry standard questionnaire for analysing users' responses to software. It is a commercially available questionnaire for the assessment of the usability of software which has been developed, validated and standardised on an international basis. SUMI consists of 50 statements to which the user has to reply with "Agree", "Don't Know", or "Disagree". SUMI gives reliable results with as few as 10 users [14]. According to SUMI guidelines, a minimum sample of 10 users per system satisfies the requirements for getting stable statistical results.

The usability scales assessed by SUMI are:

- Efficiency (the user's feeling that the software is quick and economical)

- Affect (the user's emotional feeling that the software is stimulating and pleasant)

- Helpfulness (the user's perception that the software communicates in a helpful way)

- Control (the user's feeling that the software is responding in a normal and consistent way and assists him in the event of errors)

- Learnability (the ease with which the user becomes familiar with the software; whether there are tutorials, handbooks etc. or not)

Research

Methodology

Research design

We observed the usability of e.learning content used in eCampus and Moodle LMSs. We decided to implement SUMI evaluation of e-content for both systems. Therefore we chose two groups of users to participate in the research.

Each of the evaluated groups studied using different systems (eCampus and Moodle) with different e-learning content designed within the scope of ECDL modules. Both sets of e-learning content had the same learning goals and were similarly designed.

Participants

The tested subjects were unemployed adults that had taken part in the education process performed within the framework of the Slovenian PHARE project.

Fifty-five participants took part in the study. We picked two samples of 19 participants from company B 2 and 36 participants from UM FERI. This sample is in accordance with SUMI requirements for the minimum number of participants, which is ten. Persons with special needs were not included in this test. To ensure that both sets' content were as comparable as possible in the usability test, the tools for persons with special needs (deaf persons) were not included in Moodle.

The procedure

The evaluation was performed with the help of a SUMI questionnaire. Each group of users involved in the research had 3 evaluators.

In the first phase of usability testing, the users were asked to work with the system. The users had been learning the ECDL content for four weeks. After this phase, the users were asked to complete the modified SUMI questionnaire with the purpose of obtaining information about the usability of the e-learning content. The questionnaires were completed anonymously and no additional help was offered. We observed that the test-writers did not talk to each other during that period and consequently did not influence each other's results.

Results and discussion

Results

The analysis of the SUMI data was performed using a dedicated software analysis package called SUMISCO. All of the questionnaires from all users were completed correctly and were included in the final results. The results shown in Table 2 are presented in terms of the median, upper and lower confidence levels obtained for a global usability scale and each of the five usability sub-scales, results gained from the testing of ECDL e‑learning content in the eCampus and in the Moodle-system. From the table it is clear that both systems were successful overall from the viewpoint of usability (the positive limit in the graph is 50):

- the "global" scale is positive in both systems while eCampus achieved visibly better results then the content that was provided by Moodle. eCampus shows a higher dispersion as a consequence of a higher disaccord among the users, despite all answers being very positive.

- "efficiency" is very dispersed for eCampus and its lower part is near the limit. Unfortunately the situation is worse for Moodle and needs to be examined since it has limited the package's usefulness. Therefore, answers to questions regarding "efficiency" need to be evaluated in order to understand the reasons for the worse results.

- "affect" has proven at eCampus to be the element with the best results, as the answers are relatively alike, resulting in little dispersion. Likewise, Moodle recorded a positive attitude of users towards their content.

- "helpfulness" has good results for both systems and has little dispersion.

- "control" was positively evaluated in both systems, but it is slightly above the limit and shows little dispersion and therefore needs to be examined closely.

- "learnability" at eCampus is successful overall, but shows greater disagreement among users on this matter. Content in Moodle need to be checked for problems since it is generally under the positive limit.

Table 2. SUMI evaluation of e-learning content in

a) eCampus

b) Moodle

Regarding the comments of users, we have ascertained that most were dissatisfied with work-interruptions caused by the eCampus system. After studying this matter further, we found out that users had difficulties with the system automatically logging off following long periods of idleness. Despite the fact that this had been introduced as a security measure it proved to be an obstacle to the system's user-friendliness. After the problem had been fixed and the users were informed, results started to improve.

Discussion

Despite the fact that SUMI evaluation was not specifically designed for testing the usability of e-learning content, we wanted to examine whether it was suitable for this purpose and to propose some possible improvements. Therefore, the main question was if the SUMI method could be used as an appropriate evaluation method for e‑learning content.

SUMI was designed for assessing the usability of general software applications and was not specifically designed for web or educational applications.

Norman [19] asserts that a formative educational application should:

- be interactive and provide feedback,

- have specific goals,

- motivate, communicating a continuous sense of challenge,

- provide suitable tools, and

- avoid distractions and factors of nuisance interrupting the learning stream.

Issues of usability take on an added dimension in an educational environment. It is not sufficient to ensure that the e-learning system is simply usable; it must also be effective in meeting the instructor's pedagogical objectives [2].

Considering the ISO 9241- 11 definition of usability, the major factors of usability are effectiveness, efficiency and user satisfaction where effective means producing a desired or intended result. In the context of e-learning contents, learning objectives could represent the desired results (e.g. specific learning goals). Therefore, the learning objectives should be considered in assessments of the usability of e-learning content. As SUMI does not consider the specific goals of e-learning applications, the inventory should be adjusted. Furthermore, assessing usability is not enough. Ardito et al. [2] pointed out that the evaluation of educational software must consider usability as well as accessibility and didactic effectiveness. Unfortunately SUMI lacks the method of assessing either of these factors.

A user's interaction with a learning environment can be a one-time event. It's important to point out that, unlike traditional software products, where users gradually learn how to work with the interface, an instructional interface has to be mastered by the user rather quickly, as it probably won't be used for an extended period of time [16].

The user should not be burdened with having to learn and remember complicated forms of interaction; manipulating educational software should not compromise the learning experience. In this sense the interface should place a low cognitive demand on the learner and functionality should be obvious. Symbols, icons and names should be intuitive within the context of the learning task [24].

Therefore the educational application, such as e-learning content, should be simple and intuitive to use in order to make an additional user manual unnecessary. The question of whether the software documentation was informative or not could be ambiguous since, in the case that no user manual is supplied, the learner might understand that the question is about the actual e-learning content and not the software itself.

One of major factors of web-based applications is accessibility. At any rate, it is important to consider the equipment accessible to the average learner and the average Internet connection. One can develop exceptionally good e-learning content, which may however prove either useless or unattractive in the real world. Learners generally do not like to wait for sluggish computer responses. This has a negative effect on user satisfaction. The application has to be designed according to the average Internet connection. If the user has a slow or sluggish Internet connection, his or her answer to the question "This software responds too slowly to inputs" could be unjustifiably negative.

Inappropriate results might also result from asking learners whether they would like to use the supplied software every day or not. The purpose of educational software is to learn about the subject as quickly as possible. E-learning content is usually short and the learner does not want to learn the same content every day. One of major factors of usability is efficiency. Increasing the efficiency of the software would lead to a negative response, and so this question could be left out.

Conclusion

The article dealt with the applicability of usability testing methods in the evaluation of e-learning contents designed for unemployed adults within the scope of the PHARE project in Slovenia. We have considered methods that could be used for usability testing and examined their suitability regarding the different demands of e-learning content users. In both of the presented cases we had, besides common users working with the eCampus-system, persons with special needs (mainly deaf and hard of hearing persons). These users had specially-adjusted e-learning content, which was designed with the open system Moodle.

To get a more accurate evaluation of possible methods of usability testing, we had to choose between heuristic evaluation, SUMI evaluation, automatic evaluation with tools (e.g. Bobby) and field observation. We opted for SUMI evaluation and applied it to both systems.

Taking SUMI usability testing into account, we can conclude that eCampus is more successful than the Moodle-system, particularly with respect to the categories of "efficiency" and "affect". This might indicate that the e-learning content in eCampus was better-designed than the content in the Moodle-system.

The use of SUMI evaluation has its limits, particularly due to some questions in the SUMI questionnaire that do not yield appropriate answers. Despite this, we have ascertained that SUMI can be used as a quick and simple tool for the global and general evaluation of e-learning content with respect to end users. It can be easily and quickly determined in which field ("efficiency", "affect", "helpfulness", "control" and "learnability") the system has been more or less successful. In the case that the graph shows that potential problems occur in a specific field, additional and more detailed evaluation is necessary, particularly for the specified problem area. This concentration of effort on problem areas can considerably shorten the development time of the system. The SUMI evaluation for the eCampus-system indicated a particular problem in the "control" field. A detailed examination followed and found that the system logged idle users out too soon. The problem was fixed immediately.

By means of our study, we can certify that the SUMI-method is suited for usability testing, but only for the quick and simple evaluation of specific usability factors. This enables quick usability testing of the complete system. Further evaluation, however, requires other methods that enable a more profound detection of errors in planning, e.g. heuristic evaluation or field observation-method.

Acknowledgement

This work has been performed in the framework of the European PHARE 2003 Programme titled: "Improvement of computer literacy of unemployed adults" run in the year 2006. National co-financiers were the Ministry of Labour, Family and Social Affairs. Fundamental to the study was the close collaboration between the Maribor Adult Education Centre (AEC Maribor), that runs one project and the company Sapientia, that helps with the design of e-learning systems for deaf and hard-of-hearing persons. The collaboration with Mateja Verlič and Petra Povalej from University of Maribor's Faculty of Electrical Engineering and Computer Science was also of great importance due to their help with the development of the system for persons with special needs.

References

[1] Achtemeier, S. D.; Morris L. V., Finnegan C. L.: Considerations for developing evaluations of online courses, Journal of Asychronous Learning Networks (JALN), 7 (1), 2003.

[2] Ardito, C.; Costabile, M. F.; De Marsico, M.; Lanzilotti, R.; Levialdi, S.; Roselli, T.; Rossano, V.: An approach to usability evaluation of e-learning applications, Universal Access in the Information Society, Vol. 4, No. 3, 2006.

[3] Begoray J. A.: An introduction to hypermedia issues, systems and application areas, International Journal of Man-Machine studies, 33, pp. 121 – 147, 1990.

[4] Bosco J.: An analysis of evaluations of interactive video, Educational Technology, 25, pp. 7-16, 1986.

[5] Carpenter D.; Dolan D.; Leahy D.; Sherwood-Smith M.: ECDL/ICDL: a global computer literacy initiative, Proceedings of Conference on Educational Uses of Information and Communication Technologies (ICEUT), Beijing: Publishing House of Electronics Industry (PHEI), 2000.

[6] Chou S.W. and Liu C.: Learning effectiveness in a Web-based virtual learning environment: a learner control perspective, Journal of Computer Assisted Learning, 21 (1), 2005.

[7] Coffield, F.; Moseley, D.; Hall, E.; Ecclestone K.: Learning styles and pedagogy in post-16 learning. A systematic and critical review. London: Learning and Skills Research Centre, source: http://www.lsda.org.uk/files/PDF/1543.pdf , 2004.

[8] Costabile, M. F.; De Marsico, M.; Lanzilotti, R.; Plantamura, V. L., Roselli, T.; Rossano, V.: On the usability evaluation of e-learning applications, Proceedings oh the 38th Hawaii International Conference on System Science, 2005.

[9] Debevc M.; Zorič-Venuti M.; Peljhan Ž.; E-learning material planning and preparation, Report of the European project BITEMA (Bilingual Teaching Material For The Deaf by Means of ICT), source: http://www.bitema.uni-mb.si/Documents/PDF/E_learning_material_writing.pdf, Maribor, Slovenia, May, 2003.

[10] Dougiamas, M. and Taylor, P.: Moodle: Using Learning Communities to Create an Open Source Course Management System. In P. Kommers & G. Richards (Eds.), Proceedings of World Conference on Educational Multimedia, Hypermedia and Telecommunications 2003 (pp. 171-178). Chesapeake, VA: AACE.

[11] Dringus, L. P. and Cohen M. S.: An adaptable Usability Heuristic Checklist for Online Courses, Proceedings of the ASEE/IEEE Frontiers in Education Conference, October 19-22, 2005, Indianapolis, USA.

[12] Holzinger, A.: Usability Engineering Methods for Software Developers, Communication of the ACM, Vol. 48, No. 1, Jan. 2005, pp. 71-74.

[13] International Organisation for Standardisation: ISO 9241: Software Ergonomics Requirements for office work with visual display terminal (VDT), Geneva, Switzerland, 1998.

[14] Kirakowski J.; Corbett M.: SUMI: The Software Usability Measurement Inventory, British Journal of Educational Technology, 24 (3), 1993, pp. 210-212.

[15] Kulik C. C.; Kulik J. A.;, Shwalb B. J.: The effectiveness of computer applications: a meta-analysis, Journal of Research on Computing in Education, 27, pp. 48-61, 1994.

[16] Lohr., L.L.: Designing the instructional interface, Computers in Human Behaviour, 16, 1998 pp.161-182.

[17] Nielsen, J.: Usability 101: Introduction to Usability, Jakob Nielse's Alertbox, August 25, 2003, source: http://www.useit.com/alertbox/20030825.html.

[18] Nielsen, J.: Usability Engineering, Morgan Kaufmann, San Francisco, USA, 1994.

[19] Norman, D.: Things that make us smart: defending human attributes in the age of the machine. Perseus Publishing, Cambridge, MA, 1993.

[20] Osguthorpe, R. T.; Graham, C. R.: Blended Learning Environments: Definitions and Directions, Quarterly Review of Distance Education, 4 (3), pp. 227-33

[21] Oxford English Dictionary, http://www.askoxford.com, August, 2006.

[22] Parlangeli O.; Marchigiani E.;, Bagnara S.: Multimedia systems in distance education: effects of usability on learning. Interacting with Computers, 12, pp. 37-49, 1999.

[23] Schulz-Zander R.; Büchter A.; Dalmer R.: The role of ICT as a promoter of students' cooperation , Journal of Computer Assisted Learning, 18 (4), pp. 438, 2002.

[24] Squires, D. and Preece, J.: Predicting quality in educational software: Evaluating for learning, usability and the synergy between them, Interacting with Computers, 11 (5), pp. 467-483, 1999.

[25] WebXACT, source: http://webxact.watchfire.com/, August, 2006....

[26] Theofanos M.F.; Redish J.: Bridging the gap: between accessibility and usability, Interactions, Issue 6, Volume 10, pp. 36 – 51, 2003.