MOOC Replication Across Platforms: Considerations for Design Team Decision-making and Process

Rebecca M. Quintana [rebeccaq@umich.edu], Yuanru Tan [yuanru@umich.edu], University of Michigan, 500 S State St, Ann Arbor, MI 48109, United States of America

Abstract

As universities consider how Massive Open Online Courses can complement their public engagement strategies, the design space for learning at scale is expanding. Universities are now broadening their reach by offering the same MOOC on more than one course delivery platform because of the potential to reach larger audiences. Although a promising direction, relatively few universities have adopted this approach and few guidelines exists about how to proceed. The work of replication is challenging because differences in platform features and affordances make creating a “near replica” of a MOOC a complex endeavor. This design case focuses on one design team’s decision to replicate a MOOC on a different course delivery platform. We describe associated challenges and identify approaches that allowed the design team to achieve their goal of maintaining pedagogical consistency from one platform to another. The case concludes with a set of lessons for designers of online learning experiences, including (a) key considerations to factor into decision-making about course selection for replication and (b) processes-oriented guidelines to achieve desired pedagogical outcomes. Related work includes the mutual impact of technology and pedagogy, and the roles and processes within MOOC design teams.

Keywords: design teams, decision making, work processes, educational technology, learning at scale

Introduction

With Massive Open Online Courses (MOOCs) growing in prominence and importance, universities are developing their portfolios of online courses at a rapid rate (Gorlinski, 2013). Contrary to the declaration that “MOOCs are dead” (Young, 2017), MOOCs continue to be a viable component of the public engagement strategies of institutions of higher education. Furthermore, universities are seeking new ways of expanding the reach of their portfolios, which includes considering how to reuse and repurpose content to allow courses to reach different audiences of learners. A recent approach has been to take a course that was designed for delivery on one platform, and replicate it for use on another platform, although few examples of this direction currently exist.

Although a promising approach for expanding reach, the replication process has the potential to be problematic because differences in platform features have the potential to shape the learning experience; a pedagogical design may be influenced by affordances and limitations of the platform. Universities who create courses on more than one MOOC platform may make platform choices based on how well available features align with pedagogical goals. Once a platform choice has been made, and pedagogical decisions are made within platform constraints, it can be challenging to disentangle curriculum designs from platform features.

To provide designers, instructors, and practitioners with a direct account of our own experience, we have taken inspiration from design cases (Lawson, 2004) to elucidate our MOOC replication process, given that we are an “early mover” in this space. A design case is “a description of a real artifact or experience that has been intentionally designed” (Boling, 2010; p.2). We center our description around the process rather than the product and endeavor to reify the “unique knowledge embedded in a known design” (Oxman, 1994; p.146). We focus on the decision-making and work process of one design team whose work was situated within a university’s MOOC production unit. Their task was to replicate the pedagogical design of a MOOC – originally developed for one platform – and create a version for use on a different MOOC platform. A central challenge of this case concerns the “fidelity of replication”; since MOOC platforms have similar, but not identical features, the design activity was a complex one, and required that designers give simultaneous consideration to pedagogical design goals and technical capabilities of the MOOC platforms. The task required that the design team consider how differences in platform features might alter the learner experience.

Given the differences in platform features, we recognize from the outset that it is not possible to produce an exact replica of course. For this reason, we describe the outcome of the process as a “near replica,” rather than an “exact copy”. Our research questions examine the processes that institutions can undertake when preparing a course for deployment on more than one platform. To understand the experience of the design team that we studied, we interviewed four core participants and analyzed interview transcripts using an inductive approach. We also included original design documentation in our analysis, such as project notes, emails, memos, and other records. Specifically, we will examine key considerations that design teams should take into account during the decision-making process (i.e., whether or not to replicate a MOOC) and how to address challenges that will emerge during the replication process (i.e., process-oriented considerations). We aim to offer designers of technology-enhanced learning experiences an overview of the workflows used during a MOOC replication process and a set of “lessons learned”, with a view towards informing the work of those who might take on related design challenges. We conclude by identifying course features that make replication challenging to offer further guidance to course designers and decision makers.

Design Context

MOOCs have rapidly risen to prominence within the educational technology landscape within the last few years (Liyanagunawardena, Adams, & Williams, 2013). MOOCs support connectivity at a large scale (massive), do not restrict enrollment (open), are run on digital platforms (online), and offer potential for exploring new methods of teaching and learning (course) (Liyanagunawardena et al., 2013). Two of the most prominent course delivery platforms in the marketplace are edX and Coursera (i.e., the platforms of interest for this case). Both platforms provide a set of tools that allow design teams to create, publish, and deliver the digital courses they produce. MOOC publishing tools allow course designers to sequence various instructional materials (e.g., videos, PDFs), create machine-graded quizzes, and develop activities that allow learners to interact with other learners, such as through peer-graded assignments and discussion forums (Conole, 2014). Some aspects of MOOC design mirror traditional face to face higher education courses, with videos replacing the live lecture components (Hood, Littlejohn, & Milligan, 2015). However, MOOCs are emerging as their own instructional form, with individual MOOCs taking on “various forms and shapes” (Major & Blackmon, 2016; p.21). It is therefore necessary for instructors and designers of MOOCs to be sensitive to design intentions and carefully consider how these can be faithfully instantiated using platform authoring tools.

Expanded Design Space

Although MOOCs are a relatively new instructional form, they are quickly becoming part of a broader online educational landscape. Universities are seeing the potential for MOOCs to promote institutional reputation, gain new revenue models, and provide new research opportunities (Borthwick, 2018). At the same time, MOOC platform providers are offering differentiated educational products, such as those that allow universities to create course “bundles” (e.g., Specializations on Coursera) and to offer microcredentials (e.g., Micromasters programs on edX). In response, some universities are pursuing contractual agreements with multiple MOOC platforms, thereby expanding the design space. While these are the two main MOOC providers, a number of other hosting platforms are growing in visibility, adding to the potential for institutions to expand their educational offerings across multiple venues. Given the changes we are seeing to the MOOC landscape, with universities choosing to offer MOOCs as part of a series of courses and across multiple platforms, we expect this work to be a useful starting point for other designers who are just moving into this space.

Related Work

Technology and Pedagogy Considerations

In the context of developing technology-enhanced learning environments, technology and pedagogy are mutually influential, with platform functionality impacting pedagogy, and pedagogy pushing on the affordances of technology. By necessity, most MOOC instructors and design teams must work within the constraints and limitations of the course delivery platforms (Young, 2014). This requires a simultaneous consideration of pedagogical goals and technological capabilities. Lin and Cantoni (2018) described a MOOC team’s “bottom-up” approach to design which involved brainstorming how technical features could be leveraged to instantiate design and pedagogical goals. Najafi, Rolheiser, Harrison, and Håklev (2015) revealed how MOOC instructors strategically used available platform tools to assess learning, despite recognizing platform limitations. Additionally, approaches to content presentation may need to be reconsidered to make it fit the MOOC format, such as creating “bite-sized” presentation components (e.g., short videos) (Borthwick, 2018). However, some authors have argued that the functionality of MOOC delivery platforms can have the effect of inhibiting desired pedagogical outcomes. Head (2017) described her frustration when developing a creative writing MOOC, remarking that the platform functionality appeared to be arbitrary, with the result that pedagogical designs were compromised.

MOOC Design Teams and Processes

MOOC design teams have been described as “collaborative ecosystems” (Zheng, Wisniewski, Rosson, & Carroll, 2016) consisting of a variety of roles in addition to instructors, including learning experience designers, video designers, project managers, and teaching assistants (Zheng et al., 2016). Other core roles could include copyright specialists, marketing experts, and legal professionals (Doherty, Harbutt, & Sharma, 2015). Because MOOC design and delivery is a complex undertaking, it is necessary to plan carefully, develop systematic workflows, and clearly delineate roles (Doherty et al., 2015). Najafi et al. (2015) identified two effective planning and production strategies: a plan-ahead approach, where all components of the course were determined in advance before production began, and a production line approach, where one task was begun by a team member in one role, and the next task was continued by another team member in a different role. Lin and Cantoni (2018) outlined a linear process that included preparing materials, deciding on a pedagogical approach, discussing how platform features could impact design choices, and developing course assets.

The MOOC design literature largely focuses on cases where course teams have designed original MOOCs, such as those that are based on university-level courses or those that are created “from scratch.” Others have described efforts to improve on existing MOOCs, such as Borthwick (2018) who detailed a process with five iteration cycles, resulting in a new “spin off” course. Yet, because universities are only just starting to duplicate courses across multiple platforms, the literature provides little guidance for designers on how to approach this new kind of design challenge, that of replication.

Methods

Research Questions

In order to better understand the experience of the team involved in this design case, we formulated two research questions to guide our analysis. The first research question is, “What considerations should design teams take into account when making the decision to replicate a MOOC across platforms?” This question explores the key factors that influenced the decision-making process. The second research question is, “What challenges emerge – and how should they be addressed – when attempting to develop a ‘near replica’ of a MOOC, across platforms?” This question explores effective processes for achieving desired results.

Materials, Participants, and Approach to Analysis

This case addresses these questions in the context of a MOOC focusing on ethics in the field of data science. This MOOC is designed and offered by a large university in the mid-western United States. It explores the impact of data science practices on society through a series of case study videos, assessments, and discussion prompts. There is also a peer-reviewed assignment at the conclusion of the course that asks learners to write an original case study that is related to data science ethics, based on their own experience or local context. The course was first launched on the edX platform and had achieved high enrollment numbers (n = 9,235) prior to its launch on the Coursera platform 18 months later (i.e., at the conclusion of the MOOC replication process).

The authors conducted individual interviews with design team members who were involved in decision-making about replication and implementation of the edX version for Coursera: the service unit’s lawyer, a learning experience designer (also the director of the design team), a course builder, and a project manager. Each interview session was approximately 30 minutes and followed a prepared protocol (customized for each role), consisting of 10-14 open-ended questions.

Questions from the interview protocol included:

- Explain how you decided to replicate a MOOC, creating a second version for another platform.

- Why did you choose this course? What differences have you noticed about each platform?

- What concerns do you have, if any, about the decision to replicate the course?

- What challenges have you faced in implementation?

- What did you learn from this experience?

- How do you think the outcome of this experience can inform process?

The authors transcribed and analyzed the interviews, using an inductive approach (Creswell, 2015). This involved categorizing the data into segments relevant to the research questions, collapsing the segments to identify emergent themes, and matching the themes to research questions. The authors collected original design documentation, such as notes, emails, and spreadsheets, and compared these records to the recollections of participants.

Findings

We distilled insights from interviews into five questions that can be adopted by decision makers who need heuristics for making strategic choices about which courses to choose for replication. Each question is followed by details from the case that elaborate on themes introduced by the questions.

Decision-Making Considerations

Do the characteristics of the course make it suitable for replication?

The design team determined that the data science MOOC did not have any “unusual” design elements that would require extraordinary efforts to reproduce (e.g., drag and drop activities and other customized interactive elements). The learning experience designer for the course stated that there was nothing about the edX version of the course that could not be implemented on Coursera. Additionally, the course was relatively concise in length, and would therefore require relatively few design decisions and minimal input from the course instructor, who was a busy faculty member.

Are there available qualified personnel and resources?

The course builder, who had comprehensive technical knowledge of both platforms, was new to the design team and had available bandwidth to work on the project. The director of the design team was keen to leverage the role of course builder and take on a “backburner” project. The director had originally worked on the course as a learning experience designer and possessed important historical knowledge about original design intent. The team was sufficiently resourced, enabling them to use a contract lawyer to negotiate agreements with both platforms, and to hire a second course assistant to support the daily operations of the MOOC.

Are all key stakeholders in agreement?

After the design team had determined that the MOOC was a good candidate for replication, the director contacted the instructor of the course and asked for his input. The instructor was highly supportive because he wanted to see the course reach as broad an audience as possible. Another faculty member also expressed a desire to see the data science course exist on the Coursera platform, because he wanted to be able to point learners from his own programming specialization (on Coursera) to a complementary resource on the same platform. The key stakeholders believed that the Coursera learner audience would consist of learners with an interest in programming, and thus by hosting the data science ethics course on Coursera, they could connect to a larger audience.

Does the replication activity promise to improve design processes?

The design team hoped that the replication process and subsequent reflection on its outcomes would allow them to make evidence-based decisions about platform choice, rather than relying on their intuition. They hoped to gain a deeper understanding of which attributes of a course were consequential to making a platform decision (e.g., subject matter, planned activities). Team members believed that the work might promote understanding about when in the design process decisions about platform should be made, resulting in more clearly defined and consistent processes. The director of the design team commented, “You know we talk about trying to design with neither platform in mind. We always end up having to make a decision. But [the outcome of this work] could perhaps make it so that it’s clear to us at what point when we need to make a decision.”

Does the replication activity promise to provide a “return on investment”?

For the design team, “return on investment” was not articulated in terms of financial gain, but rather through other perceived benefits. They expressed pride in the course, citing characteristics such as the unique documentary film-making style that they had developed for the project, and a desire to see the course reach a larger audience. Another stated potential benefit was that the unit could capitalize on research opportunities that would emerge by running the same course on two different platforms. The director of the design team raised the following questions: “Would we see additional enrollments on Coursera? Would we see different levels of activity in discussion forums? Also, does Coursera reach a different audience and edX?”

Process-Oriented Guidelines

Next, we offer process-oriented guidelines for designers to use after a decision has been made to proceed with course replication. Each guideline is followed by a description of the approaches that the design team took to address challenges that arose.

Determine the nature of replication work from the outset

Once the decision to replicate the MOOC was made, the design team needed to decide whether or not to use the process as an opportunity for course refinement. However, the original course instructor did not desire changes, so the design team decided to maintain consistency with the original course. They chose to use this exercise as an opportunity to learn more about course replication, with a view towards scoping future work and improving future processes. Furthermore, the team decided to keep the course title identical on both platforms as a signal to potential learners that the courses were indeed the same. Although this did not come to fruition, the course builder reflected that this kind of work could become an opportunity to iterate, and had the potential to become “a completely new course experience”, such as through the addition of content, modification of structure, and use of platform-specific features.

Prepare all materials and establish workflows in advance

The course builder conducted an audit of the original course, looking for potential “trouble spots”. He reviewed all course materials and activities (e.g., videos and quizzes) and determined that there were no edX-specific references that would seem out of place in Coursera. He located original course files, such as videos, to ensure that the highest quality files were available to upload. When everything was in place, he displayed both platforms side-by-side, and systematically began developing the MOOC components on the new platform.

Seek understanding of the original pedagogical design intent

The data science MOOC was originally designed for the edX platform (i.e., it was not designed in a platform agnostic way). The project manager explained that the design team selected edX because the university had just secured a contract with the platform, and they were eager to try it out. For this reason, the course was designed to suit the structure of the edX platform and to take advantage of its features. The project manager remarked that because the instructor had wanted to “deemphasize the lectures, edX worked really well originally. You could draw more attention to the case studies and the forums, than to the lectures. Whereas on Coursera I feel like the lectures are what are more front and center.” However, when replicating the MOOC for Coursera, decisions needed to be made about how to structure content and emphasize important aspects of the learning design.

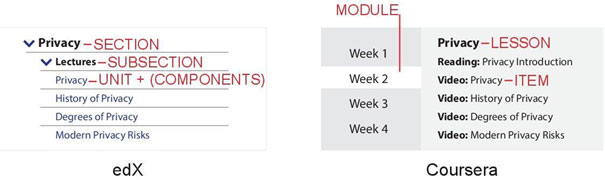

To prepare for the replication process, the course builder reviewed original design documentation and consulted with the learning experience designer and project manager to gain their perspective about original design intent. The course builder explained that the primary challenge was to account for structural differences across the platform: “I think of this translation work in terms of structure because that’s the main difference between the two platforms. edX offers much more flexibility in terms of the structure. There’s basically four kind of levels of structure on edX and there’s three on Coursera.” These differences in structure meant that structural decisions needed to be made before the course could be built on a second platform. See Figure 1 for a depiction of the structural differences between edX and Coursera. This experience revealed the need for consistent documentation practices, regardless of platform choice, and led to the development of new design templates (e.g., a spreadsheet that details course components and structure).

Figure 1. (L) The four levels of structure in edX are represented, with only three visible from this view. A learner would only see individual components (e.g., videos, discussion prompts) when they have selected that unit (i.e., drilled down). (R) The three levels of structure on Coursera are shown, with all levels viewable at the surface level.

Consider and resolve structural differences across platforms

A key consideration that emerged were the eight case study videos in the MOOC, which were intended to be authentic examples of how data science practices can impact society. The team determined that the case studies were a central feature of the course, and that the videos should be spotlighted to the greatest extent possible. The nested structure of the edX platform had made it possible to “surface” the case studies, by placing them in higher nesting levels. The linear structure of the Coursera platform would make the case studies less visible because every item is shown at the same level. Thus, the course builder needed to add additional titles to differentiate case study videos from lecture videos. Another structural consideration that needed to be addressed was the way in which content was organized. In the edX version, each case study was situated within its own “module”. However, if this structure were replicated exactly on the Coursera platform, each case study would exist within a “week” (Coursera’s default structural unit), giving learners the impression that the course was lengthier than it was. The course builder decided to reconfigure the content for Coursera into four weeks, with multiple case studies existing in a week.

Leverage platform features to instantiate pedagogical design

The Coursera platform offers predefined fields for learning designers that edX does not, such as module descriptions, learning objectives, and welcome messages. Because the course had been originally developed for the edX platform, the content for those fields had not been developed. The design team chose to create this content themselves, basing it on their knowledge of the course, and then asked the instructor for feedback before publishing it. The team felt it was necessary to create this content, because without it, the course would appear incomplete. As part of the replication process they also considered additional modifications; the course builder commented that he had started looking at the course analytics to see if there were any significant drop off points in video viewership. “We were thinking about adding ‘in video quiz’ questions, another platform feature that we do not have on edX.” In the end the team decided not to make this change, possibly because it seemed to be outside of the scope of the replication project.

Discussion

Design heuristics are best used when customized for use in a local context, rather than applied wholesale. In our design case, the answer to each question that we posed (designed to help decision-makers be strategic about the course(s) that they choose to replicate) was “yes”. In our example, the decision to proceed with replication of a given course was straightforward. However, in other cases, design teams might discover that the answer is “no” to one or more of these questions. In that case, design teams would have to prioritize which questions were most relevant to their context, and seek understanding of where dependencies lie. For example, if the answer to the first question of suitability for replication is “no” (i.e., it would not be a straightforward process and many workarounds would be required), then the answer to the second question about available personnel and resources becomes even more salient. Design teams might also need to come to consensus about whether a “yes” response to certain questions are negotiable. For instance, some design teams might insist that all key stakeholders are in agreement about a decision to proceed (i.e., in response to the third question). Finally, design teams would need to decide for themselves whether it is their goal to improve their design processes (i.e., question four) and whether “return on investment” is a desired outcome for the team.

Similarly, process-oriented guidelines are most effective when they are applied to a specific context. In this design case, the design team chose to create a near replica of a course. However, fidelity to the original course design may not be the desired outcome in all situations. Under a different set of circumstances, a design team might choose to use the process as an opportunity for course improvement and iteration. The nature of the replication work needs to be determined on a project-by-project basis. Further, if a team decides that “near replication” is not their goal and that they would like to capitalize on the opportunity for iteration, some of the guidelines we propose here may become more salient, while others may become less so. For instance, “seeking understanding of original design intent” may be less important than evaluating the efficacy of the current design and looking for ways to improve the design. Regardless of whether or not replication is the goal, design teams will need to create a plan and prepare the materials in advance, similar to the “plan ahead approach” articulated by Najafi et al. (2015). Similarly, design teams will have to consider the implications of structural differences across platforms, regardless of whether or not replication is a design goal. If an instructor wants to emphasize a particular course component or element, it will be important to understand how platform architecture serves to support or inhibit that design choice.

This design case also raises questions around whether or not it is advisable – or possible – to design a course in a platform agnostic manner. If a course is designed to be deployed on multiple platforms from the outset, one wonders if the distinct benefits of each platform would be used to their best advantage. A coinciding concern is that courses could become more “homogenous” in their design, and the variety that characterizes MOOCs as an instructional form (Major & Blackmon, 2015) could be lost. Conversely, a benefit of designing for multiple platforms could be that the strengths of one platform inform design practices for another platform (e.g., publishing learning outcomes on edX).

Further Considerations

Our design case focused on an instance of a course whose features made it an ideal candidate for replication. However, course design teams will find that not all courses are well-suited for replication. In what follows, we identify two challenge areas: (a) customized course structure and (b) activity or assessment designs that require platform-specific features. We provide two examples of when we have resisted replication for each challenge area. Our goal is to alert instructional teams to characteristics within courses that make replication difficult and to offer insight into how replication in these instances could compromise the learning experience.

Customized course structure

As we have already described, MOOC course delivery platforms follow either a linear or nested structure and these structural differences can create some challenges for designers. Yet even with these structural differences, the underlying assumption is that learners will progress through the MOOC in a linear fashion. Although learners are free to progress through the course in any order they choose (unless items are locked), MOOC user interfaces emphasize this linearity, using checkmarks, progress bars, and color changes to indicate progress. However, some course designers may desire to create a structure that allows learners to define their own pathway through the course, thereby avoiding linearity. This is often the case if course goals require learners to tailor the course experience to meet desired personal and professional outcomes. We offer two examples of courses that are designed to permit learner choice through the creation of a customized course structure.

In the first example, a MOOC about gameful learning, the instructional team desired to create an experience that would echo the topic of the course. In gameful courses, content and assessment structures are designed to better support learner autonomy and promote engagement (Aguilar, Holman, & Fishman, 2018). One design approach that allows instructors to instantiate a gameful design is that of “branched structures”, which essentially allow learners to navigate their own pathway through the course, and choose assignments to build up their grade. In the case of the gameful learning MOOC, the course consisted of three core modules which all learners were expected to complete. There were also five additional modules, and learners were required to choose and complete two of them to earn a certificate. The course design team utilized the edX platform, for two reasons: (a) the edX grading structure was more conducive to their gameful course design goals, and (b) the platform allowed developers greater customization than other platforms. With respect to grading structure, edX required that learners achieve a passing grade in the course, meaning that if the grading scheme is set up correctly, learners could select and complete assignments in order to pass the course. Coursera, on the other hand, required that learners achieve a passing grade on every assignment, making it challenging to create a grading structure that supports learner choice. With respect to customization, the edX platform permitted the gameful learning MOOC developers to use Javascript to create an interactive course map. This map provided learners with a high level view of all module options, and allowed learners to progress through course modules in a non-linear manner.

In the second example, a MOOC about preventing injury in children and teens, the instructional team wanted to provide a comprehensive set of resources for medical students. However, they also wanted to make these resources available to non-medical experts, such as parents and teachers, without overwhelming them. Following the example of the gameful learning MOOC, the instructional team created an interactive map to allow learners to navigate through the course according to their specific interests. The map depicted a tree, with 12 topics displayed on variously colored leaves. This format meant learners could interact with only the topics that would help advance their personal and professional goals. Both the gameful learning MOOC and childhood injury prevention MOOC would be challenging to replicate on a different platform (such as Coursera) if the platform did not allow for the degree of grading flexibility and customization that was possible on edX.

Activity or assessment designs that require platform-specific features

As course designers identify activities and assessments that will best support learners in meeting course goals, it is important that they do so with an eye towards identifying which platform features best enable these activities and assessments. If desirable features are available on one platform and not another, this could be a critical factor for decision-making. In the following two examples, we elaborate on instances where the availability of features on one platform – and not another – led to a clear platform decision. In these examples, the mode of interaction that was instantiated through the activities and assessments was specific to each instructional domain, programming and anatomy respectively.

In the first example, a Python programming MOOC series, course instructors wanted to develop assessments that involved coding practice and code submission. They chose Coursera because it had an Integrated Development Environment (IDE). This important platform feature allowed learners to complete and submit coding activities and programming assessments within the MOOC platform itself, without the need to install and use external tools. In this example, all programming-related assessments were embedded in the IDE (i.e., Jupyter Notebook), and learners received immediate feedback from the course auto-grader, which was designed by course instructors. This seamless and responsive interaction would not have been possible if learners had to use various external tools and complete assessments outside of the platform environment. In this example, replicating a Python programming MOOC series on a platform without an IDE would be very challenging. Course designers would be required to completely rethink activity and assessment design and would need to grapple with questions such as: What are the logistics for learners to complete and submit assessments? What are the mechanisms for learners receive feedback on their coding assignments?

In a second example, that of an anatomy MOOC series, the instructional team assembled a vast array of multimedia materials (e.g., photographs, detailed drawings, 3D models) to teach the topic of human anatomy to learners. A major task within course assessments was to label anatomical images. This was accomplished through drag and drop quizzes, where learners matched terms to their corresponding positions on the human body. The drag and drop format would be difficult to replicate using another approach such as traditional multiple choice questions, and would create a cumbersome assessment experience for the learner. In both of these examples it was important to maintain awareness of the signature pedagogy (Schulman, 2005) and mode of interaction that would be typical or expected in that domain. While it is possible that with careful resource management characteristics that make courses less than ideal for replication could be overcome, it is nevertheless important for course designers to be aware of how these obstacles could potentially impact the learner experience before decisions are made to invest time and resources for replication.

Conclusion and Next Steps

The key considerations and process-oriented guidelines presented in this case were developed from one course and design context. The data science MOOC was straightforward in structure, but a more complicated course might reveal additional challenges and considerations. To create a more complete description of considerations for replication work, additional design cases should be examined. Indeed, at the time of writing, we are finding that more and more instances of replication of courses and specializations exist in our work context. In addition, this case examined replication that moved in the direction from edX to Coursera. If the direction were reversed, additional considerations may have emerged. Future work will continue to explore the question of when to make a decision about platform choice in the design process and will investigate the experiences of other design teams who are doing replication work in the MOOC space.

References

- Aguilar, S. J., Holman, C., & Fishman, B. J. (2018). Game-inspired design: Empirical evidence in support of gameful learning environments. Games and Culture, 13(1), 44-70.

- Boling, E. (2010). The need for design cases: Disseminating design knowledge. International Journal of Designs for Learning, 1(1), 2-8.

- Borthwick, K. (2018). What our MOOC did next: embedding, exploiting and extending an existing MOOC to fit strategic purposes and priorities. In, M. Orsini-Jones & S. Simon (Eds.), Flipping the blend through MOOCs, MALL and OIL – new directions in CALL (pp. 17-23). Voillans, FR: Research-publishing.net.

- Conole, G. (2014). A new classification schema for MOOCs. The international Journal for Innovation and Quality in Learning, 2(3), 65-77.

- Creswell, J. W. (2015). Educational research: Planning, conducting, and evaluating quantitative and qualitative research. Boston, MA: Pearson.

- Doherty, I., Harbutt, D. & Sharma, N. (2015), Designing and developing a MOOC. Medical Science Educator, 25(2), 177-181.

- Gorlinski, V. (2013, October 31). MOOCs: The fastest-growing curriculum for higher education. Encyclopaedia Britannica [blog post]. Retrieved from https://www.britannica.com/topic/MOOCs-The-Fastest-Growing-Curriculum-for-Higher-Education-1952890

- Head, K. (2017). Disrupt This!: MOOCs and Promises of Technology. Hanover, NH: University Press of New England.

- Hood, N., Littlejohn, A., & Milligan, C. (2015). Context counts: How learners’ contexts influence learning in a MOOC. Computers & Education, 91, 83-91.

- Lawson, B. (2004). Schemata, gambits and precedent: Some factors in design expertise. Design Studies, 25(5), 443-457.

- Lin, J., & Cantoni, L. (2018). Decision, implementation, and confirmation: Experiences of instructors behind tourism and hospitality MOOCs. The International Review of Research in Open and Distributed Learning, 19(1).

- Liyanagunawardena, T. R., Adams, A. A., & Williams, S. A. (2013). MOOCs: A systematic study of the published literature 2008-2012. The International Review of Research in Open and Distributed Learning, 14(3), 202-227.

- Major, C. H., & Blackmon, S. J. (2016). Massive open online courses: Variations on a new instructional form. New Directions for Institutional Research, 2015(167), 11-25.

- Najafi, H., Rolheiser, C., Harrison, L., & Håklev, S. (2015). University of Toronto instructors’ experiences with developing MOOCs. The International Review of Research in Open and Distributed Learning, 16(3).

- Oxman, R. E. (1994). Precedents in design: A computational model for the organization of precedent knowledge. Design Studies, 12(2), 141-157.

- Schulman, L. S. (2005). Signature pedagogies in the professions. Daedalus, 134(3), 52-59.

- Young, H. N. (2014). Putting the U in MOOCs: The usability of course design. In S. D. Krause, & C. Lowe (Eds.), Invasion of the MOOC (pp. 182-194). Anderson, SC: Parlor Press.

- Young, J. (2017, October 12). Udacity official declares MOOCs ‘Dead’ (though the company still offers them). EdSurge [blog post]. Retrieved from https://www.edsurge.com/news/2017-10-12-udacity-official-declares-moocs-dead-though-the-company-still-offers-them

- Zheng, S., Wisniewski, P., Rosson, M. B., & Carroll, J. M.

(2016). Ask the instructors: Motivations and challenges of teaching massive

open online courses. Proceedings of the 19th ACM Conference on

Computer-Supported Cooperative Work & Social Computing, 206-221. ACM.