Peer Training Assessment within Virtual Learning Environments

Carlos Manuel Pacheco Cortés [carlos.pacheco@redudg.udg.mx], Elba Patricia Alatorre Rojo [patricia.alatorre@redudg.udg.mx], Adriana Margit Pacheco Cortés [adriana.pacheco@redudg.udg.mx], University of Guadalajara (Mexico) [http://www.udg.mx/en], Av. La Paz 2453 Arcos Vallarta 44170. Guadalajara, Jalisco, Mexico

Abstract

This contribution is focused on the assessment (evaluation) of peer training among in-service teachers within a virtual learning environment (VLE). The aim was to identify and to interpret participants’ perceptions of acceptance, utility and profit from their peer learning assessment online. The scope is to bring propositions for design and implementation of such peer training assessment. A limitation found in our contribution was the sample size, conditioning the generalization of outcomes toward wider contexts. The topic was addressed from a reference conceptual framework of assessment for learning combined with VLE based learning. Implications were directed toward peer learning assessment online, its benefits, and its improvement of design and implementation. Our contribution is placed on the field of educational models and procedures, the theoretical and pedagogical ones, as well as learning assessment based on distance education.

Abstract in Spanish

El objetivo de esta investigación fue analizar las percepciones de los docentes sobre la utilidad y los beneficios de la evaluación entre pares en el proceso de aprendizaje para identificar la aceptación de este tipo de evaluación. El enfoque de la investigación fue cuantitativo, con un método descriptivo no experimental, se aplicó un instrumento de encuesta, con un índice de confiabilidad Alfa de Cronbach, de .807 (nivel alto). Entre los hallazgos que se encontraron se encuentra la realizan de propuestas para el diseño e implementación de la evaluación entre pares para la virtualidad. Entre los resultados se encuentra la aceptación de la evaluación entre pares en ambientes virtuales, sus beneficios y la mejora de su diseño y aplicación. Este tema de este estudio es sobre evaluación entre pares, se ubica en el área de modelos y procesos educativos, teóricos, pedagógicos y evaluación de los aprendizajes en entornos virtuales.

Key words: learning assessment strategies, peer learning, student participation, virtual learning environments

Introduction

The current paradigm of teaching-learning process implies a change in which learner’s active participation is increasingly boosted. Assessment goes along with any activity, and to talk of assessment in the teaching-learning process, is to delve into specific activities that lead to improvement of such process. This assessment (evaluation) is carried out in several formats and types: expert evaluation, self-evaluation, equals evaluation, and coeval; thereby, it can be diagnostic, formative, summative or final assessment.

Some efforts to improve peer learning assessment of academics working in public institutions of higher education have been done for instance at the University of Guadalajara, in the Republic of Mexico, through open training programmes for in-service teaching staff members. Formerly, PICASA (Programa Institucional de Capacitación y Superación Académica) was a programme implemented for this purpose during 1990s and 2000s; lately, replaced in 2015 by PROFOCAD (Programa de Formación, Actualización y Capacitación Docente). Vera-Cazorla (2014) defined peer assessment as the process through which groups of individuals evaluate their equals (colleagues); thereby the focus lays on this conception.

This contribution approaches toward perceptions of trainees about equals’ assessment in virtual learning environments. VLE (López-Rayón, Escalera, & Ledezma, 2002; Chan-Nuñez, 2004) is the set of a/synchronous atmospheres of interaction where the teaching-learning process is done, based on a curriculum programme, and through a learning management system.

Setting the context

The University of Guadalajara (UDG) is nowadays constituted – since a 1994 restructuration – by six thematic faculty-centres in the Metropolitan Area of Guadalajara, capital of the State of Jalisco, in the Republic of Mexico; its network also comprises nine regional (multidisciplinary) faculty-centres; based on the Mexican model of mid-high education, the university has a sub-network of 66 public high-schools, 88 modules and 31 regional extensions; likewise, since 2005, a Virtual University System (UDG, 2017:01). The main functions of this public institution of higher education are: instruction (professionists training); scientific research; divulgation (educational, cultural, i.e. popular science); and extension services, such as news broadcasting, employees’ health program, community health services, sport activities and cultural events (UDG, 2016).

Teaching staff members who participated in this study have adscription to the faculty centre of engineering and exact mathematical sciences (CUCEI, in Guadalajara), whose functions are carried out across twelve departments grouped into three divisions: basic science, engineering, and electronics & computing. Based on the third activity report 2015-2016 by CUCEI’s rector, its total of academic personnel was 1,305 teaching staff members; from which, 545 were full-time lecturers, 26 part-time lecturers, 646 per-course academics, and 88 technician academics (Monzón, 2016). Several in-service training courses & workshops were offered, in which, 400 of them participated; tasks made were mainly focused on updating specific disciplinary topics; likewise, Monzón (2016) revealed that academics usually participate in ICT based training programmes for English given curricular courses and related to didactical assignments.

For the sake of all academics within the University of Guadalajara, in-service training programme PROFOCAD is offered; consisting of courses and workshops in a blended modality, e.g. “Training based on problem solving or project oriented” to which – for the inter-semester 2016B – CUCEI academics enrolled voluntarily. Five groups were set in different dates and schedules, although for this study, only two of those groups were taken into account for analyses (n=45). Considering the role of training and updating of academics in education, it was relevant establishing whether peer training assessment is justified or not with those participants.

Justification

Simpson and Clifton (2016) analysed perceptions of students on assessment processes: by peers, and learning based. These authors noticed peer assessment difficulties about the quality of reports from students in their subject group, some of whom did not demonstrate competencies (Siiman, Pacheco-Cortés, & Pedaste, 2014) to do value judgments; and the variability of opinions in those judgments that emit their fellow participants. Some other studies have been focused on the lack of reliability over the value judgments by participants. In our contribution, students’ perceptions and value judgments reliability are unknown in peer assessment of whom participated in a continuous-education workshop for training and updating of academics.

Thereby, the goal here is to identify perceptions of trainees over the peer training assessment in virtual learning environments, the acceptance by these participants, and whether such acceptance provides apprenticeship chances or whether it is used for this process.

Research question

What is the perception of trainees over peer training assessment in virtual learning environments?

Specific variants

Which perceptions do have trainees over such assessment’s qualification and acceptance?

Which perceptions do have trainees over utility and profit from equals’ assessment in the process carried out in virtual learning environments?

Theory background

Nowadays, the Information and Communication Technologies (ICT) make possible to perform different collaborative tasks giving less and less importance to distance and limitations of synchrony. Including ICT in education has boosted non-presence teaching-learning modalities, as well as semi-presential and dynamic ones (Rodríguez-Fernández, Gutiérrez-Braojos, & Salmerón-Pérez, 2010). Therefore, the offer of online curricula programmes has been extended; however, it is argued about the little socialization in a virtual learning environment (VLE). Interaction in a VLE must be planned and concreted in the assignments (tasks) required for learners’ progress through the curriculum programme, which could be: discussion forum participation, blog, wikis… but not simply to deliver products.

Activities in a VLE are presented as the support for learner as well as to show evidence of goal achievement or competencies development. A frequent activity requires peer product evaluation, which gives rise to equals assessment; such activity does not always give the expected results when designing it since, most of times, it is not instrumented due to a lack of clear directions or due to be rejected by learners. In this contribution, we set out to reflect on what the trainees think about peer assessment. There is much talk about evaluation of teacher performance or about evaluation of proficient; however, there is also the evaluation among peers that – in addition to be a learning strategy – is a support for instruction whenever it is appropriately designed. Our contribution is made from the concept of learning assessment and from the theory perspective of peer assessment that is also referred as equals’ assessment.

Fitzpatrick, Sanders, and Worthen (2012) stated that evaluation (assessment) is the detection, clarification and enforcement of defensible criteria considered for determining the merit or value of evaluated object; the foregoing, in order to give a diagnosis or issue a judgment. Hence, the purpose of assessing trainees’ perceptions is to offer a diagnosis by describing what is happening through a VLE in the training and updating of university academics; this is to identify perceptions about peer assessment as well as to provide an evaluation report to the programme department with those perceptions obtained from participants and bring those to decision-makers. For Fitzpatrick et al. (2012), evaluator assumes many roles, through several activities, including: negotiating with groups, collecting, analysing and interpreting quantitative & qualitative information, etc.; the role of the evaluator can be useful as an external proficient in the design and conduction of a study for the sake of some community, organization and institution; in the case of this contribution, for the fellow participants in the course and their assessor.

Another important aspect of assessment is the consideration of parameters and criteria to implement in concrete contexts, as well as the setup and processing of relevant information; and finally, to enforce the parameters and criteria of assessment to obtain useful, efficient and meaningful information for decision making. Fitzpatrick et al. (2012), stated that assessment implies procedures such as analysis, criticism, inspection, judgment, review, and establishing of traits and measures (indicators) among the most important; this leads us to think that the role of the evaluator would have to consider these procedures as competencies which must be achieved as a professional dedicated to this important task.

Sánchez-Rodríguez, Ruiz-Palmero, and Sánchez-Rivas (2011) stated that peer assessment is the disposition to evaluate teammates and to be evaluated by them since they possess a similar level of knowledge and competencies. Trainees compare their mates’ performance to their own one, which contributes to achieve an apprenticeship. Sanmartí (2007) addressed that it helps learners to better detect their own difficulties and inconsistencies. After analysing the conceptualization of peer assessment, it was noticed that while Sánchez-Rodríguez et al. (2011), named peer evaluation as a synonym of co-evaluation; Carrizosa-Prieto and Gallardo-Ballestero (2011) pointed such peer evaluation to differ from co-evaluation since, in the second one, student collaborates with the teacher in the process.

Likewise, Sanmartí (2007) assured that co-evaluation or co-regulation is the contribution from alumni – together with the evaluation made by academics – to the process of self-evaluation or self-regulation first proposed, authentic motor of meaningful learning; that is to say – in peer evaluation – learners do analyses, comments, and enforce criteria to determinate value of evaluated task: they measure up their mates participation, but not for the individual definite grade; while for the co-evaluation, it is considered a collaboration with the teacher in the regulation of the process: learner is part of the assessor’s assessment. The trainee who evaluates is a mean and his/her judgment matters (is part of) assessment/grading that the teacher will issue.

For Carrizosa-Prieto and Gallardo-Ballestero (2011) learners can participate in evaluation of teaching-learning processes, basically, in three ways: by reflecting from their starting point as to the contents proposed, their own difficulties, their merits, their reasoning (self-evaluation); by evaluating the participation of mates in collaborative assignments (equals assessment, evaluation by colleagues of themselves); and, by collaborating with the teacher in the regulation of the teaching-learning process (co-evaluation). Rodríguez-Gómez, Ibarra-Sáiz, and García-Jiménez (2013) – on the other hand – made out three types of peer evaluation: peer ranking, where each group mate sorts the other mates in one or several aspects from best to worst; peer nomination, where each learner announces the best mate by category; and peer rating, where each learner issues a scale grade (classification among the others).

Segers and Dochy (2001); Prins, Sluijsmans, Kirschner, and Strijbos (2005); Liu and Carless (2006); as well as Gallego-Noche, Gómez-Ruiz, Ibarra-Saiz, and Rodríguez-Gómez (2014) found that, among the improvements detected by learners when carrying out peer assessment, are: to improve the learning process by helping to structure it; to increment apprenticeship and performance; to stimulate critical thinking and deep learning. It becomes an incentive to improve group work and effort. The involvement of classmates increases when teacher shares with learners the responsibility to evaluate.

Literature review

According to Vera-Cazorla (2014), there are researchers who believe that there should be changes in alumnus-tutor power relations for a real self-regulation by the learner; other researchers argue that learners should be actively involved in building assessment criteria if they are to develop self-assessment competencies.

For Gallego-Noche et al. (2014), peer assessment is an evaluative strategy used to stimulate learning development, i.e. it is learning oriented; these authors pointed that the three basic elements on which learning-oriented assessment is based (active student participation, feed-forward and authentic assignments) foster participatory and collaborative evaluation processes that rely on flexible, open and mutual understanding of knowledge. For Cano and Ion (2016) learning-oriented evaluation focuses on the use of evaluation strategies which promote and maximize learners’ apprenticeship opportunities, as opposed to certification or validation of the same learners through summative assessment.

Likewise, Gallego-Noche et al. (2014), analysed equals assessment in the context of higher education focused on two aspects: to characterize this type of evaluation (individual, intragroup – taking place within a group, or intergroup – taking place between groups); and the difficulties faced by learners for its implementation (conceptual, institutional, relational – or aspects referred to reliability and validity of this type of evaluation). These authors identified, among benefits of peer assessment (university alumni and academics): (a) how to improve procedures and apprenticeship products; (b) development of interpersonal strategies; (c) improvement of capacity to issue judgments; and, (d) development of specific professional academia competencies.

Equals assessment (Gallego-Noche et al. 2014) is a specific type of collaborative learning, where learners make a titration on the learning process or product from all the learners, one-by-one or from a group; while Klenowski, Elwood, and Thomas (2004) pointed that in this type of assessment the formative intentionality is increased, the dialogue is fomented, the interaction and the creation of common meanings between classmates and even with teachers is enriching. Falchikov and Goldfinch (2000) conducted a meta-analytic study where equals assessment can be successfully used in every discipline, field or level.

Another study (Vera-Cazorla, 2014) describes a project in which peer collaborative work was put into practice in a VLE; the aim of this project was to analyse peer formative assessment in a VLE as instrument for improving results from opinion essays in an English class at Spanish University of Las Palmas: from 96 enrolled alumni, 30 participated voluntarily. It resulted that the most implemented type of assessment in a VLE supported teaching is the formative one, more than the summative one, and which is leaded to postgraduate students more than to graduates; there were positive outcomes from writing-skill products where improvement was noticed, however, an interesting element is the qualitative analysis of experience.

For instance, from interaction in VLE forums, it is observed that the starting hypotheses were overcome; finding that the students went beyond the mechanical correction of essays and contributed much to its content. Likewise, participants titrated the experience of peer assessment online as enriching, suggesting: to help students to provide effective feedback through constructive criticism and descriptive example; to require students to justify their judgments, emphasizing that the main focus of co-evaluation is not the classification itself, but to provide useful information for their apprenticeship; to train students to interpret feedback so they can make the right connections between comments received and quality of their work; and, use clear guidelines and monitor the use of guidelines by students. It is possible to help students by asking them to compare their peers work against guidelines or criteria for success.

Among research evaluations on students’ perceptions of peer feedback processes and learning assessment is done by Simpson and Clifton (2016); these authors stated that peer review was developed as a tool to support Students to: develop skills in peer evaluation; and, to increase quality in group analysis. They implemented this at Masters Level in a course about Climate Change Policy and made a pre-test as well as a post-test to determine if students found that the process was a rewarding apprenticeship opportunity; moreover, these authors pointed that 87% of post-test participants said that peer review is seen as an advantage in the development as well as in data collection; also pointed that in such assessment design (peer review) the possibility of plagiarism is minimized, and low levels of plagiarism were noticed.

Comments and feedback by Simpson and Clifton’s alumni were valid, according to the assessor, and were overall reliable; however, in the quality of group’s written report, distribution of the observations had a greater variance: it was low. These authors recommended to train learners in filling out the peer review template because it could lead to more reliable feedback. According to their literature, the review process was mainly focused on how to present the content, it was identified that the students had limited ability to judge quality of their peers’ work and critical analysis of them. In peer review feedback, seven out of eight group reports improved their submissions and changes in the reports from the indicated group were made.

Sánchez-Peralta and Poveda-Aguja (2015) investigated students’ academic perception about evaluation in higher education context within traditional distance and virtual modalities. Among results presented, they showed that the perception of students regarding conceptions about learning assessment (definition and purpose) was successfully countered. These authors pointed out that peer assessment is good when didactic mediation facilitates the integration of learning, it strengthens the acquisition of objectives, each evaluative action being a key factor in teaching and learning, learners measure up the results when they are aware in the implementation of assessment strategies.

Another study on peer assessment comes from Jeffery, Yankulov, Crerar, and Ritchie (2016), who identified how to achieve this type of assessment accurately based on assignments/essays in a bachelor’s major course with a high quality grade. Psychometric measures of peer assessment’s accuracy, with reliability and validity, are critical qualities for its use; as a supplement to the one made by the instructor. Jeffery et al. (2016), sought to determine which factors related to peer assessment are most influential in such psychometric measures, with a primary focus on accuracy of peer assessment and how close peer evaluations match those of an instructor.

Jeffery et al. (2016), examined and classified correlations of accuracy, reliability, and validity with 17 quantitative and qualitative variables from three bachelor curricula which use peer-review in written assignments; based on these analyses, they demonstrated that the number of comments issued by the reviewer has the greatest influence on peer review accuracy of all factors analysed. Calculations made by these authors suggest that each reviewer should issue at least six comments to achieve a peer review; this is similar to the number of times an instructor feeds. Effective training, prior experience, and strong academic skills of reviewers may reduce this number.

On the other hand, Reinholz (2016) presented a model that describes how peer assessment is compatible with self-assessment; this author stated that although earlier research shows that peer evaluation promotes self-evaluation, the connection between both is found under a specification analysis. This model, in the evaluation stage, is based on theories of self-evaluation to specify how learning is carried out through peer evaluation; the model applies to three described activity structures: to analyse their potential, to support learning, and to promote self-assessment. This author pointed that, by and large, the model can be used to understand learning that is achieved in a variety of peer assessment activities: to mark, to classify, to analyse comment, conferences and review. This approach contrasts most of studies on peer assessment, which have focused on instructor’s and peers’ appreciation, rather than on apprenticeship opportunities.

Methodology

This contribution consists in the design of non-experimental quantitative research. Information seeking strategy here selected is descriptive, since the basic statistical analysis was made. Research method was to implement a survey (validated instrument with a good reliability index) with close-ended questions in Likert ordinal-scale of four values, then statistical analysis and interpretation were made.

Research subjects

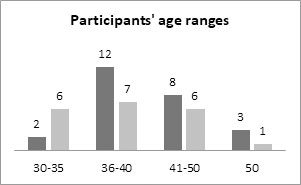

Research subjects (participants), for a non-probability sample intended, were academics voluntarily enrolled into a PROFOCAD workshop (open-continuous training) that was carried out during an inter-semester period and through a blended modality: opening and closing sessions in classroom, as well as interaction activity online within the MOODLE platform for two weeks. There were two subsamples: a 20-people Group A, and a 25-people Group B. Participants are teaching staff members in higher-education, which had a role of trainees in the aforesaid workshop; 25-male and 20-female, between 30 and 62 years old (Figure 1). Everyone has had work experience online as well as equals assessment experience in a virtual learning environment (VLE).

Research instrument

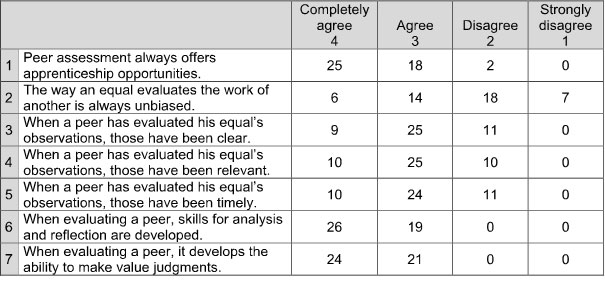

Research instrument, for analysing a problematic, is focused on tool construction; since data collection tools must obtain valid and reliable data which make possible to answer on research questions and to lead toward useful conclusions. Likert scale-based survey used to obtain information about equals assessment perceptions in a VLE required 50 collection tools where it was asked to respond voluntarily, from which, 45 tools were retrieved (answered); such scale consisted of 16 propositions: to identify trainees’ perceptions on acceptance of equals assessment (items 1, 2, 13 & 15); value given to observations they make on their work, an even number (items 3, 4 & 5); utility of equals assessment in the learning process (items 6,7, 8, 9 & 10); and, benefits from this assessment type (items 11, 12, 14 & 16). See Appendix A.

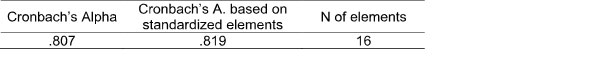

Measurement tools in research must meet two conditions: reliability and validity. According to Shrout and Lane (2012), reliability is the degree to which an instrument produces consistent and coherent results; while validity (Bernal, 2010) is the degree to which this instrument truly measures the variable to be measured. Instrument’s reliability was done by Cronbach’s Alpha that is a weighted average of correlations between the variables (items) being part of the scale, i.e. the precision level and consistence of results obtained after implementing the instrument in similar conditions.

We score the following scale: alpha coefficient >.9 as excellent; alpha coefficient >.8 as good; alpha coefficient >.7 as acceptable; alpha coefficient >.6 as arguable; alpha coefficient >.5 as poor; and, alpha coefficient <.5 as inacceptable. Cronbach’s Alpha Coefficient (CAC) oscillates between 0 and 1 and the closer to 1, the higher the reliability (of the instrument implemented).

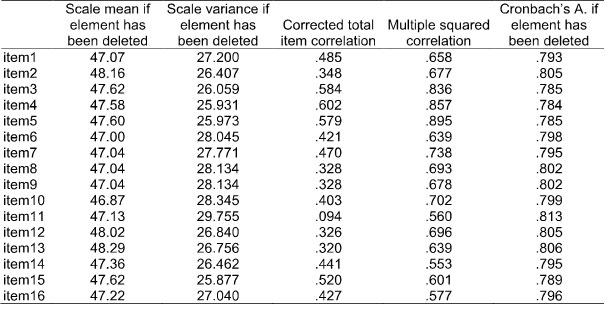

There is a data-collection example in Appendix B; information processing was made using SPSS statistical software; reliability index outcomes are shown in Table 1 and correlations in Table 2.

Table 1: Reliability statistics

Table 2: Total of elements statistics

Cronbach’s Alpha Coefficient (CAC), as illustrate in Table 1, was .807 which shows that the instrument has a good reliability.

Validity of content was made by consulting to experts. The support from a Doctor in Education and a Master in Technologies for Learning were requested, which emitted suggestions to improve the instrument and to order the items.

Information analysis

Excel software was used to analyse information then to obtain descriptive statistics (figures in this study) such as trainees’ participation frequencies. The results were plotted and the interpretation of such results was performed.

Results

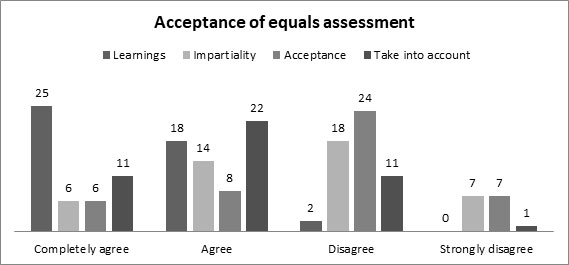

For Question 1 about participants’ perception on the acceptance of equals’ assessment, 55.5% of them completely agree that evaluation between equals is always offering apprenticeship opportunities (item 1); 40% agree; and, 4.4% disagree. Regarding item 2, on whether the way an equal evaluates the work of another is always impartial; 13.3% totally agree; 31.1% agree; 40% disagree; and, 15.5% strongly disagree.

Regarding item 13, which mentions that equals assessment is always well accepted, 13.3% completely agree; 17.7% agree; 53.3% disagree and 15.5% strongly disagree. Faced with the statement that, when an equal evaluates another, one always takes into account his/her observations and suggestions (item 15), 24.4% completely agree; 48.8% agree; 24.4% disagree; and, 2.2% strongly disagree (Figure 2).

Figure 2. Perception of trainees on acceptance of this evaluation type

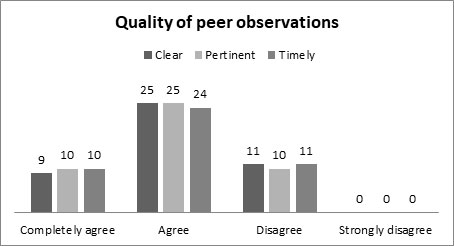

As for the value given to the observations made by a peer, in Figure 3, it can be seen – in item 3 – it was stated that such observations have been clear; whereupon, 20% completely agree; 55.5% agree; and, 24.4% disagree. Item 4 states that the observations have been pertinent; to what 22.2% completely agree; 55.5% agree; and, 22.2% disagree. As in item 5 to whether the observations have been timely, 22.2% completely agree; 53.3% agree; and, 24.4 disagree.

Figure 3. Perceptions on the quality of observations by a peer

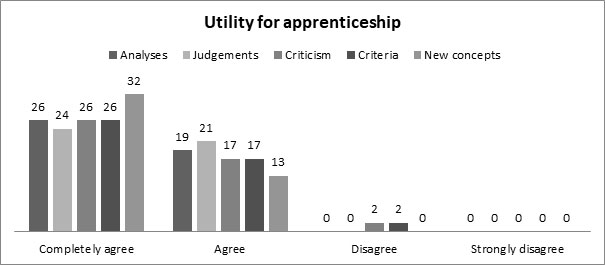

Having compiled findings about the utility that is given to the assessment between peers to the apprenticeship in item 6 which affirms that in the assessment between equals the skills are developed for analysis and reflection, 57.7% completely agree; and, 42.2% agree. On whether the ability to issue value judgments (item 7) is developed, 53.3% completely agree; and, 46.6% agree. On whether the habit of elaborating constructive criticism is developed (item 8), 57.7% completely agree; 37.7% agree; and, 4.4% disagree.

On whether, when evaluating an equal, criteria are used to judge the ideas of another (item 9), it was found that 57% completely agree; 37.7% agree; and, 4.4% disagree. In item 10, it is stated that when evaluating a fellow trainee where new concepts are learned and ideas are generated for self-performance, 71.1% completely agree; and, 28.8% agree (Figure 4).

Figure 4. Perception on the utility of the assessment between peers

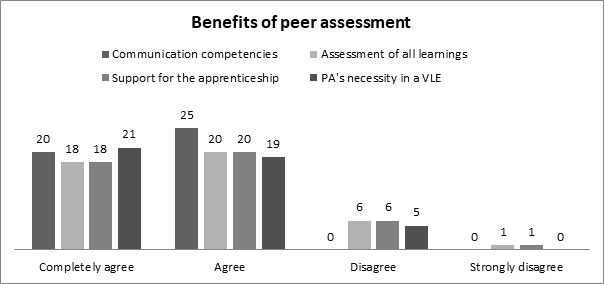

For the variable about benefits of equals assessment in item 11 which states that to evaluate a peer communicative skills are required, it was found that 44.4% completely agree; and, 55.5% agree. Regarding item 12 stating that every apprenticeship can be evaluated between equals, 13.3% completely agree; 40% agree; 35.5% disagree; and, 11.1% strongly disagree.

In item 14, which states that peer assessment always supports apprenticeship, 40% completely agree; 44.4% agree; 13.3% disagree; and, 2.2% strongly disagree. For item 16 regarding that peer assessment in a virtual learning environment is necessary, 46.6% completely agree; 42.2% agree; and, 11.1% disagree (Figure 5).

Figure 5. Perceptions on benefits of peer assessment

Interpretation and discussion of the results

It is agreed with Fitzpatrick, Sanders, and Worthen (2012) to state that in assessment of perceptions by students (trainees, in this contribution) provides a diagnosis of what is occurring in a virtual learning environment regarding the in-service training and updating of university academics. The study presented in this contribution offers a diagnosis, although it is not possible to generalize by the size of the sample, it does giving a clear idea of thinking/feeling from these teaching-staff members regarding peer assessment.

Regarding the question “What are the perceptions of learners about the acceptance and qualification of the peer assessment in a VLE?”, 96% of them consider that peer assessment gives opportunities for learning, and 73% say take into account observations made by a colleague; although 69% do not perceive it as impartial, and 69% say that this assessment type is not always well accepted. This gives evidence that although this type of evaluation provides elements for learning, the quality of evaluative comments is not always the best; and this coincides with the results of the study by Simpson and Clifton (2016) that emphasizes the variability of the judgments that are issued between equals. It was found that 75% of participants in this study rate observations made by peers in evaluative comments as clear; a 78% say those observations are pertinent; and, 75% see those observations as opportune.

Regarding the second question about usefulness and benefits of equals assessment in the learning process within virtual learning environments, participants’ perception is that they develop: skills for analysis and reflection (99%) and to make value judgments (99%); a habit of elaborating constructive critiques (95%) and criteria for judging the ideas of others (95%). Everyone agrees that when evaluating an equal, new concepts are learned and ideas for their own performance come up. In higher education, skills should be developed to help alumni integrate into the knowledge society, which requires the development of reflexive judgments, the development of critical thinking and the promotion of self-managed apprenticeship.

Detecting difficulties and inconsistencies in the output of an assignment can be facilitated by assessing what an equal has; this coincides with Sanmarti (2007) and with Sánchez-Rodríguez et al. (2011), since the participants of our contribution recognize learning from the work of their colleagues, they accept that when reading an equal’s work, they achieve ideas that they use in their own performance. This benefits the collaborative learning mentioned by Gallego-Noche et al. (2014), and to a deeper knowledge. The fact that the evaluative comments that are made between peers coincide with those made by the instructor is the task of instructional design of the courses and of the capacity of instructor to train his trainees to elaborate these comments.

For an adequate peer assessment, respondents say that communicative skills are required; however, 46% say that not all learning can be assessed in peers; although 48% perceive that this type of assessment always supports learning; and an 89 % considers peer assessment required in virtual learning environments. Fitzpatrick et al. (2012), point out that evaluation includes procedures such as analysis, judgment, criticism, as well as the establishment of traits and measures. Based on this, from the data obtained in this study, it can be affirmed that if these procedures are performed by trainees when assessing their peers, and this is carried out in a VLE, it is being propitiated that the learner develops communicative skills such as verbal and/or written expression and interpretation.

If the statement that online education uses more formative peer assessment than summative (Vera-Cazorla, 2014) is assumed, then it should be kept in mind that, according to the perception of respondents in this study, not all learners have gained necessary skills to make their observations accepted by their peers. This makes it necessary to design activities that help them to develop analysis skills, reflection and design criteria that will also serve as self-assessment.

Conclusion

Peer assessment has positive effects on learners’ apprenticeship, but should go beyond simple spelling or correction; it has to provide opportunities to deepen content, provide ideas and get students to value this type of assessment as enriching and reliable. Criticisms of products must be constructive and impartial. In order to ensure that peer assessment is efficient and effective, accepted and reliable, teaching methodology should be flexible, open to learner’s participation and include development of competencies that allow issuing judgments. This type of assessment, far from being inquisitive or accusatory, should be motivating for learners, recognizing their potential by locating windows of opportunity in writing, tasks, and peer products will give them greater apprenticeship opportunities.

Equals participation in peer assessment should be titrated in a fair way, collaborating with the instructor in the regulation of the teaching-learning process, thus achieving co-evaluation. Common elements are to promote autonomy of learning and to promote knowledge of greater depth both in form and in depth, thematic and methodological; for which a change in learner’s role, from passive to active, is required. Learners can be beneficially empowered by being given assessment tools with which they can develop their thinking skills to reach the critical thinking with that will achieve value judgments which support equals’ apprenticeship.

References

- Bernal, C. A. (2016). Metodología de la investigación [Methodology of Research] (4th ed.). Mexico City: Pearson Education. Retrieved from https://goo.gl/VtPhyc

- Biggs, J. B., & Tang, C. S.-k. (2011). Teaching for Quality Learning at University: What the Student Does (4th ed.). Maidenhead, UK: McGraw-Hill Education.

- Cano, E., & Ion, G. (2016). Innovative Practices for Higher Education Assessment and Measurement. Hershey, US: InfoSciRef. doi:10.4018/978-1-5225-0531-0

- Carrizosa-Prieto, E., & Gallardo-Ballestero, J. I. (2012). Autoevaluación, coevaluación y evaluación de los aprendizajes [Self-assessment, co-evaluation and assessment of the apprenticeships]. Paper presented at III Jornada sobre docencia del Derecho y Tecnologías de la Información y la Comunicación. Barcelona, ES. Retrieved from https://goo.gl/wnSjbK

- Chan-Nuñez, M. E. (2004). Tendencias en el Diseño Educativo para Entornos de Aprendizaje Digitales [Tendencies on Educational Design for Digital Learning Environments]. Revista Digital Universitaria, 5(10), 1-26. Retrieved from https://goo.gl/wKzOHI

- Falchikov, N., & Goldfinch, J. (2000). Student Peer Assessment in Higher Education: A Meta-Analysis Comparing Peer and Teacher Marks. Review of Educational Research, 70(3), 287-322.

- Fitzpatrick, J. L., Sanders, J. R. & Worthen, B. R. (2012). Program evaluation: Alternative approaches and practical guidelines (4th ed.). Harlow, UK: Pearson Education.

- Gallego-Noche, B., Gómez-Ruiz, M. A., Ibarra-Saiz, M. S., & Rodríguez-Gómez, G. (2014). La retroalimentación entre iguales como estrategia para mejora de competencias [Peer feedback as a strategy for development of competences]. Retrieved from http://hdl.handle.net/10498/16944

- Jeffery, D., Yankulov, K., Crerar, A., & Ritchie, K. (2016). How to Achieve Accurate Peer Assessment for High Value Written Assignments in a Senior Undergraduate Course. Assessment & Evaluation in Higher Education, 41(1), 127-140. doi: 10.1080/02602938.2014.987721

- Klenowski, V., Elwood, J., & Thomas, S. (2004). Developing assessment use for teaching and learning in higher education. Digital Education Resource Archive. Retrieved from http://dera.ioe.ac.uk/13042/

- Liu, N.-F., & Carless, D. (2006). Peer feedback: the learning element of peer assessment. Teaching in Higher Education, 11(3), 279-290. doi: 10.1080/13562510600680582

- Monzón, C. O. (2016). 3er Informe de Actividades 2015/2016 [Third Activity Report 2015-2016 by the Dean of CUCEI]. pp. 52. Mexico: University of Guadalajara. Retrieved from https://goo.gl/UI5Eu8

- Prins, F., Sluijsmans, D., Kirschner, P., & Strijbos, J. W. (2005). Formative peer assessment in a CSCL environment: a case study. Assessment and Evaluation in Higher Education, 30, 417-444.

- Reinholz, D. (2016). The assessment cycle: a model for learning through peer assessment. Assessment & Evaluation in Higher Education, 41(2), 301-315. doi: 10.1080/02602938.2015.1008982

- Rodríguez-Fernández, S., Gutiérrez-Braojos, C., & Salmerón-Pérez, H. (2010). Metodologías que optimizan la comunicación en entornos de aprendizaje virtual. [Methodologies to Improve Communication in Virtual Learning Environments]. Revista Comunicar, 17(34), 163-171. doi: 10.3916/C34-2010-03-16

- Rodríguez-Gómez, G., Ibarra-Sáiz, M. S. & García-Jiménez, E. (2013). Autoevaluación, evaluación entre iguales y coevaluación: conceptualización y práctica en las universidades españolas. [Self-, peer, and co-assessment: conceptualization and practice in Spanish higher education institutions]. Revista de Investigación en Educación, 2(11), 198-210. Retrieved from https://goo.gl/aPSHKR

- Sánchez-Peralta, D., & Poveda-Aguja, F. (2015). Percepción académica sobre la evaluación en el contexto universitario modalidad a Distancia Tradicional y virtual [Academic perception about evaluation within university contexts of traditional distance and virtuality]. Cultura, Educación y Sociedad, 6(1), 63-78. Retrieved from https://goo.gl/E9k8Yz

- Sánchez-Rodríguez, J., Ruiz-Palmero, J. & Sánchez-Rivas, E. (2011). Análisis comparativo de evaluación entre pares con la del profesorado: un caso práctico [Comparative analysis of peer assessment vs equals’ assessment: a practical case]. Docencia e Investigación, 21, 11-24. Retrieved from http://www.enriquesanchezrivas.es/img/docenciaeinvestigacion.pdf

- Sanmartí, N. (2007). Evaluar para aprender: 10 ideas clave [Assessing for learning: ten clue ideas]. Barcelona, ES: GRAÓ de IRIF, S.L. Retrieved from https://goo.gl/YjjLki

- Segers, M., & Dochy, F. (2001). New Assessment Forms in Problem-based Learning: The value-added of the students’ perspective. Studies in Higher Education, 26(3), 327-343. doi: 10.1080/03075070120076291

- Shrout, P. E., & Lane, S. P. (2012). Reliability. In H. Cooper, P. Camic, D. Long, A. Panter, D. Rindsk & K. Sher (Eds.), APA handbook of research methods in psychology: Foundations, planning, measures, and psychometrics (Vol. 1, pp. 643-660). Washington, US: American Psych. Assoc.

- Siiman, L. A., Pacheco-Cortés, C. M., & Pedaste, M. (2014). Digital Literacy for all through Integrative STEM. Proceedings of the LCT 2014 Conference, Learning and collaboration technologies: designing and developing novel learning experiences (Vol. 1, pp. 119-127). doi: 10.1007/978-3-319-07482-5_12

- Simpson, G., & Clifton, J. (2016). Assessing Postgraduate Student Perceptions and Measures of Learning in a Peer Review Feedback Process. Assessment & Evaluation in Higher Education, 41(4), 501-514.

- University of Guadalajara, Mexico (2018). Numeralia Institucional [university statistics]. Retrieved from http://copladi.udg.mx/estadistica/numeralia

- Vera-Cazorla, M. (2014). La evaluación formativa por pares en línea como apoyo para la enseñanza de la expresión escrita persuasiva [Formative peer-assessment online as a support for teaching persuasive written expression]. Educación a Distancia, 43, 2-17. Retrieved from http://www.um.es/ead/red/43/vera.pdf

Acknowledgement

To the teaching-staff members who participated in this study voluntarily, agreeing to give their perception about peer assessment in virtual learning environments.

To the faculty academics who supported this study when validating the instrument.

Authors’ affiliation

Carlos Manuel Pacheco Cortés is a doctoral candidate in education, research assistant, instructional designer, and teaching staff member at Virtual University System from University of Guadalajara.

Elba Patricia Alatorre Rojo is Education Doctor by Nova Southeastern University, research professor, instructional design coordinator, and magistral conferencist at the VUS from UDG.

Adriana Margarita Pacheco Cortés is Education Doctor by Nova Southeastern University, research professor, instructional designer, workshop leader and academia tutor at VUS from UDG.

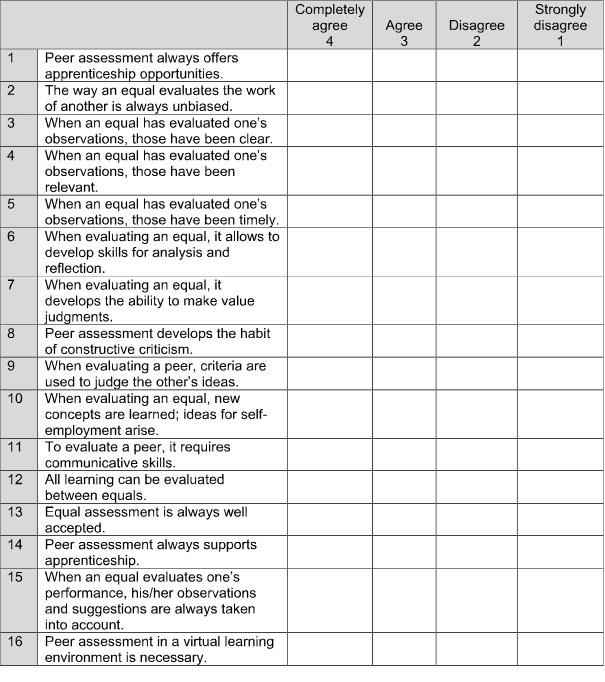

Appendix A

Purpose

To document the perception of teachers regarding the evaluation of peer learning in virtual learning environments.

Directions

Considering that peer evaluation is being done in a virtual learning environment, mark with a cross the option that you consider most appropriate according to your experience and perception.

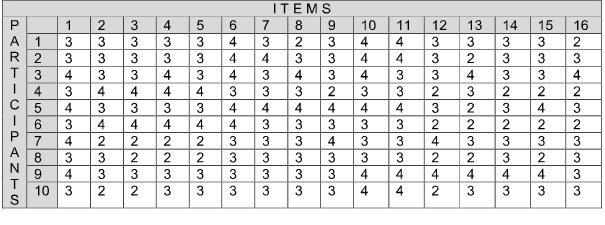

Appendix B

B.1 Example of Concentrate from Participants’ Responses

B.2 Example of Concentrate from Responses per Item