ÇÜ-eDS: Çukurova University E-Learning Support System

Mehmet Tekdal [mtekdal@cu.edu.tr], Çukurova University, Çukurova Üniversitesi, Eğitim Fakültesi, Bilgisayar ve Öğretim Teknolojileri Bölümü, 01330 Sarıçam/Adana-Turkey [http://www.cu.edu.tr], Turkey

Abstract

Ongoing developments in the field of information and communication technologies, as in many other fields, have brought new opportunities to education and instruction. Among these opportunities, e-learning technologies are of primary concern. On the other hand, open source software has brought in both an economic and parametric dimension to e-learning systems. However, despite all of these positive developments, the use and popularisation of these technologies by educational institutions in Turkey is not as widespread as it should be. Although there may be several reasons for this, one of the major causes is the problems encountered during developing and publishing e-learning courses. In this study, an e‑learning support system (ÇÜ-eDS–Çukurova University e-Learning Support System) capable of supporting the entire procedure from preparation to publication of economic and useful e‑learning courses has been developed. The system consists of four main modules, namely a learning objects portal, a content development editor, a learning management system, and a single sign-on system. The system was actively used by the second-year and third-year students for one semester and three semesters respectively. Then, in order to test the system usability, a system usability scale was applied to 30 second-year and 30 third-year undergraduate students of Çukurova University. According to the system usability score (65.17 out of 100), it can be said that the system is moderately usable, but requires some improvements.

Abstract in Turkish

Bilgi ve iletişim teknolojileri alanında süregelen gelişmeler, birçok alanda olduğu gibi eğitim ve öğretim alanında da yeni olanakların doğmasına yol açmıştır. Bunların başında, bilgileri zaman ve mekândan bağımsız ve hızlı bir şekilde öğrenenlere ulaştırmayı sağlayan e-öğrenme teknolojileri gelmektedir. Diğer taraftan, Açık Kaynak Kodlu yazılmalar e-öğrenme sistemlerinin hem ekonomik hem de parametrik bir boyut kazandırmıştır. Ancak tüm bu olumlu gelişmelere karşın, bu teknolojilerin Türkiye’deki eğitim kurumlarında kullanılması ve yaygınlaşması istenen boyutta olduğu söylenemez. Bunun çeşitli nedenleri olmakla birlikte, en önemli neden e-öğrenme kurslarının geliştirilmesi ve yayınlanmasında karşılaşılan sorunlar gelmektedir. Bu çalışmada, e-öğrenme kurslarının hazırlanmasından yayınlanmasına kadar tüm işlemlerin yapılabildiği ekonomik ve kullanışlı bir e-öğrenme destek sistemi (ÇÜ-eDS-Çukurova Üniversitesi e-Öğrenme Destek Sistemi) geliştirilmiştir. Sistem, öğrenme nesneleri portalı, içerik geliştirme editörü, öğrenme yönetim sistemi ve tek şifre sistemi olmak üzere dört ana modülden oluşmaktadır. Sistem bir dönem boyunca ikinci sınıf ve iki dönem boyunca üçüncü sınıf öğrencileri tarafından aktif olarak kullanılmıştır. Daha sonra, sistemin kullanılabilirliğini test etmek için bir sistem kullanılabilirlik ölçeği Çukurova Üniversitesi’nde okuyan 30 ikinci sınıf ve 30 üçüncü sınıf öğrencisine uygulanmıştır. Sistem kullanılabilirlik puanına (100 üzerinden 65.17) göre, sistem orta seviyede kullanılabilir olmakla birlikte iyileştirilmesi gerektiği söylenebilir.

Keywords: e-learning, e-learning support system, learning object repository, learning management system, content development editor.

Introduction

In parallel with advancements in information and communication technologies, the development and use of more efficient and effective teaching and learning methods have gained momentum within higher education institutions. With the implementation of these methods, learning tools and technologies are moving from teacher-centred learning environments to student-centred ones, and providing more effective ways of presenting learning content. Therefore, to develop greater learning skills in education, many institutions have started to develop e-learning software and are using this software to create relevant courses that are compatible with e-learning environments.

E-learning is generally defined as a teaching activity carried out using web and Internet technologies (Horton & Horton, 2003; p.13). It provides an environment that enables running several computer-aided education (CAE) applications, such as tutorials, hypermedia, drills, simulations, educational games, open-ended learning environments, tests, and web-based learning (Alessi & Trollip, 2001). In addition, when compared with traditional classroom learning, e-learning systems allow students to work on lessons in their own environment, at their own speed and to repeat activities without any time limits. Another advantage of these environments is that courses can be designed for students with different learning styles by providing more interactive and motivating multimedia elements such as text, audio, video, animations, and simulations (Clark & Mayer, 2016). In particular, e-learning courses that are effectively developed with rich multimedia materials make learning more enjoyable, meaning that even bored students can work in these environments for a long time (Leacock & Nesbit, 2007).

Despite all of these positive features of e-learning, it can’t be said that using e-learning technologies for education/instruction by the universities in Turkey is at a sufficient level. Although there may be several reasons for this, research by Bilgiç, Doğan, and Seferoğlu (2011) suggested that the major problems faced by instructors using e-learning systems were content authoring, technical conditions, the pedagogical weakness of distance education, and a lack of time, student evaluation, and feedback. On the other hand, Ismail (2001) argues the development of e-learning projects mostly transferred into a technical process resulting in expensive software implementations that in fact unused by uninformed, fearful or resentful employees. In another study, Balaban (2012) made the following assessment:

“E-Learning applications are not a technology or software project, it is a project for production and publishing of information. It is an educational project that benefits everyone: administrators, students, faculty members and employees. One of the most important deficiencies in our country is the lack of e-learning content; it brings responsibility to the universities to overcome this lack.”

One of the most important projects for promoting e-learning environments is Open Course Ware (OCW), which is supported by the Turkish Academy of Sciences (TUBA). This project was initiated in 2002 by the Massachusetts Institute of Technology (MIT) (OpenCourseWare, 2016). In order to ensure the implementation of this project in Turkey, TUBA organised a meeting on March 23, 2007, which brought together the Turkey Council of Higher Education (YÖK), the Turkey State Planning Organization (DPT), Scientific and Technical Research Council of Turkey (TÜBİTAK) and Turkey National Academic Network & Information Center (ULAKBİM) and representatives of 24 universities. At this meeting, a consortium and consortium directory board were formed and the courses developed by the participating universities were published on the organisation’s website (Türkiye Bilimler Akademisi, 2014). According to the latest information, only eight universities (Ankara, Baskent, Eastern Mediterranean, Gazi, Hacettepe, Middle East Technical and Sabancı) have continued to support the project. It is obvious that this support is inadequate.

Another important development regarding open courses was the announcement of the Massive Open Online Courses (MOOC) project (Hollands & Tirthali, 2014; Spector, 2014). The goal of the project was for anyone in the world with an Internet connection to be able to easily participate in courses without having to pay any fees. The slogan of this project, “Free education accessible all over the world” received universal admiration in a short time. In this context, MOOC platforms such as Udacity (www.udacity.com), Coursera (www.coursera.org), and edX (www.edx.org) have introduced online courses, in which media organisations have shown great interest. Magazines, such as The New York Times, Time, MIT Technology Review, The Wall Street Journal, The Guardian, and The Economist have all published articles about MOOC platforms (Leontyev & Baranov, 2013). Also, in addition to universities, some organisations have shared their own open and free e-learning resources with the world. Some institutions and organisations that stand out in this regard are Khan Academy (www.khanacademy.org), Saylor Foundation (www.saylor.org), and iTunes U (www.apple.com/tr/education/ipad/itunes-u). The most important and remarkable feature of Khan Academy lessons is that they publish video courses with voice animation instead of live teachers. Saylor courses are published as YouTube videos featuring experts and professors in the subject area. With the iTunes U platform, teachers can prepare their own course using multimedia elements such as texts, pictures, animations, and videos. Students are then able to register for the course.

However, despite all of these positive developments in e-learning arena, the use and popularisation of these technologies by educational institutions in Turkey is not as widespread as it should be. Although there may be several reasons for this, one of the major causes is the problems encountered during developing and publishing e-learning courses. Therefore, in this study we have developed a cost-effective e-learning support system, called ÇÜ-eDS, based on project supported by Çukurova University in Turkey, in order to help solving the problems mentioned above, and also to contribute to OCW and MOOC projects, and thus helping to spread e-learning systems to more universities. In this context, the primary objective of this study is to introduce and evaluate the usability of ÇÜ-eDS by analysing System Usability Scale (SUS) data collected from 30 students who used the system over three semesters (hereafter known as the Very Experienced Group – VEG) and another 30 students who used the system over one semester (hereafter known as the Less Experience Group – LEG). Four research questions based on this primary objective are listed below:

- According to the mean SUS score, is the system usable?

- Is there a significant difference between the mean SUS score of male and female students?

- Is there a significant difference between the mean SUS score of students in the VEG and the LEG?

- Are the qualitative and quantitative results consistent with each other in regards to system usability?

System Usability

System usability can be defined as the extent to which a system effectively and efficiently satisfies the needs and specifications of users (Thowfeek & Abdul Salam, 2014). In the case of software, a more comprehensive definition of usability has been presented by Gupta, Ahlawat, and Sagar (2014) as “Software Usability is the ease of use and learnability of a human-made object. The object of use can be a software application, website, book, tool, machine, process, or anything a human interacts with”. In Software engineering, usability is the degree to which software can be used by specified consumers to achieve quantified objectives with effectiveness, efficiency, and satisfaction in a quantified context of use. According to the International Standardization Organization’s (ISO) standard number 9241-11, the usability of a system depends on the assessment of three elements: efficiency (user performance based on time), effectiveness (the ratio of task performance to success), and satisfaction (user response to the system) (Uzun & Çağıltay, 2012).

Structure and Functions of ÇÜ-eDS

ÇÜ-eDS is a low cost system capable of supporting the entire process of creating and managing useful e-learning courses, from development stage to publication. For future needs, the system was developed with a highly flexible and modular structure, so when the need arises, new subsystems and modules can be added. As possible as, the system was developed using reusable open source software. Thus, instead of creating new modules from scratch, we chose the best ones from a list of open source software and integrated them to create the new system based on reusability concept. In this way, the development of the system was both economically viable and time-efficient. In this context, this project is expected to be a base model for the development of new e-learning projects. At present, the system website may be found and run from the following URL: eds.cu.edu.tr. A sample screenshot of the main interface of the ÇÜ-eDS website is shown in Figure 1.

Figure 1. A screenshot of the main interface of ÇÜ-eDS website

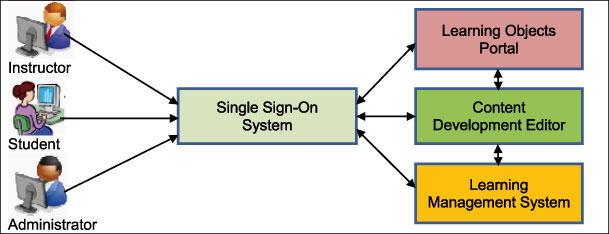

In order to perform the functions mentioned above, the system is developed by integrating these subsystems: a learning objects portal (LOP), a content development editor (CDE), a learning management system (LMS), and a single sign-on (SSO) system. A simplified structure of the system is shown in Figure 2. As seen from Figure 2, to access the system, firstly all users (Instructors, students and Administrators) should logon the system thru Single Sign-On mechanism with a valid username and password. Then they can access all other subsystems or applications with their rights until logout from the system. Simply, ÇÜ-eDS have four subsystems. The features and functions of these subsystems are introduced below.

Figure 2. A simplified structure of ÇÜ-eDS

ÇÜ-ÖnPort: Çukurova University Learning Objects Portal

The main purposes of this portal, previously developed by researchers as a research projects, was to promote the concept of learning objects, to host an array of information about learning objects, to store objects, to make it easier to access these objects in various ways, and to allow people to benefit from these objects when developing appropriate courses for e-learning systems. More detailed information about the features and functions of this portal can be found in article by Tekdal (2012) or by accessing the portal’s website (onport.cu.edu.tr). A sample screenshot from the main interface of ÇÜ-ÖnPort website is shown in Figure 3.

Figure 3. A screenshot of the main interface of ÇÜ-ÖnPort website

Xerte: Content Development Editor

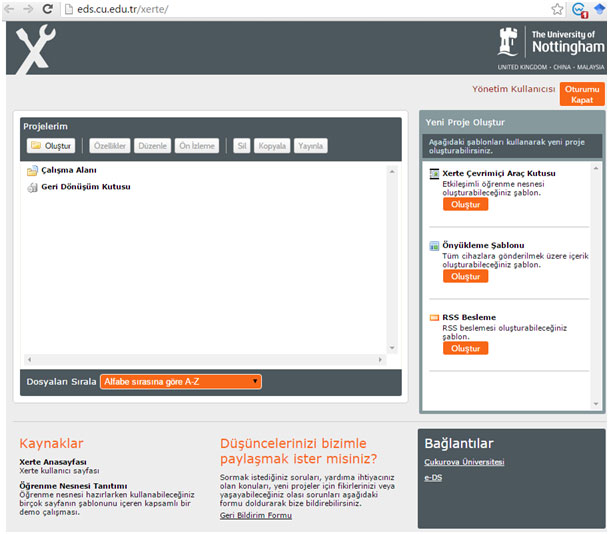

In order to provide a convenient way to develop content, an e-learning content development editor, called Xerte, has been integrated with the system. The Xerte project, launched in 2004, aims to provide high-quality free software to educators all over the world and attempts to build a global community of users and developers of the software. The goal of the project is to speed up the process of developing interactive learning materials by providing a structure that allows for the preparation of reusable learning objects. In this way, development time and the number of problems encountered during the development process can be minimised (The University of Nottingham, 2016).

Xerte, an open source software, was developed by a group of researchers at the University of Nottingham to allow for the preparation of interactive and rich multimedia e-learning content. E-learning content created with Xerte can be saved as a Sharable Content Object Reference Model (SCORM) file. SCORM is a standard for web-based learning. So, if a project saved in this standard format, it can run smoothly on all types of LMS (Williams, Williams, & Boyle, 2014).

Xerte software has an easy to use, understandable interface and provides an environment for quickly preparing learning objects by combining text, pictures, video, audio, and other multimedia elements. In addition, completed projects are automatically provided with components such as navigation tools and contents pages, making it easy to switch between and locate specific pages. In addition, this tool also embeds components to help with preparing courses for students with different learning styles (Bahadur & Oogarah, 2013). The Xerte main interface page is shown in Figure 4.

Figure 4. A screenshot of the main interface of Xerte

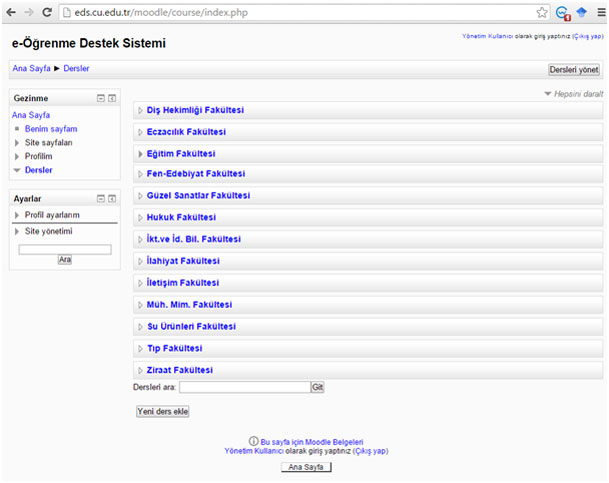

Moodle: Learning Management System

Moodle is a popular learning platform designed to provide educators, administrators, and learners with a single robust, secure, and integrated system for creating personalised learning environments (Moodle.org, 2016).

Moodle stands for Modular Object-Oriented Dynamic Learning Environment. The original version of Moodle was developed in 2001 by an Australian researcher, Martin Dougiamas, as a part of his research on social constructivist pedagogy, that is, learning by doing, communicating, and collaborating. Due to its flexible structure and easy to use interface, it has quickly become one of the most popular forms of software in educational institutions around the world, and its popularity as an open source virtual learning environment continues to increase (Büchner, 2011; Stocker, 2011). A screenshot of Moodle is shown in Figure 5.

Figure 5. A screenshot of the main interface of Moodle LMS

According to Cole and Foster (2008), Moodle is an open source course management system (CMS) used by universities, colleges, primary institutions, businesses, and even individual teachers to integrate web technologies into their courses. They also reported that more than 30,000 organisations worldwide had used Moodle software for publishing online courses and/or to support face-to-face training. However, according to the latest statistics (Moodle.net, 2016) the number of registered Moodle Sites was 73,352 across 232 countries, with 10,917,590 courses offered and 94,635,124 registered users. Some features of this preferred LMS software are (Moodle.org, 2016):

- easy to use;

- free, with no licensing fee;

- always up-to-date;

- language support;

- all-in-one learning platform;

- highly flexible and fully customisable;

- scalable to any size;

- robust, secure, and private;

- use any device, anywhere, any time;

- wide range of existing resources;

- supported by a large community.

Moodle, as a learning management system, provides a structure which fulfils many functions for learning. Some of these important functions are grouped and listed below.

- Course management:

- open courses;

- students can register for courses using a variety of methods;

- ability to monitor the work of students;

- upload of files (assignments, lecture notes, courses, etc.).

- Adding various activities:

- Assignments;

- discussion forums;

- online quizzes;

- peer evaluation activities.

- Site management:

- add users with various roles (manager, course creator, educator, student, guest, etc.);

- add courses;

- change appearance of interface;

- install new plug-ins.

- Use mobile applications:

- immediate notifications and announcements;

- follow lessons from mobile devices;

- create courses suitable for mobile devices.

Single Sign-on System

Single sign-on is the ability of an individual to log on to multiple applications within in an organisation using a single username and password (Zhao et al., 2015). Through this mechanism, users who log on to a system with a password and username can access and use all other subsystems or applications within their rights without signing in again (Süral, 2013). In short, it is a method that enables the user to access other applications once they sign in to the application’s main session screen. For example, when users initiate a session in their Google account, as long as they log on, they can use other Google services such as Gmail, Drive and Google+ etc. without have to sign in again. Likewise, when the user logs out of the system, the open sessions of all services are closed automatically.

In order to integrate independent and heterogeneous software systems such as Moodle, Xerte, and ÇÜ-ÖnPort as subsystems of ÇÜ-eDS and enable access to all of them with a single username and password, the single sign-on system module was developed. The only condition enabling the use of this system is creating an account on the system by providing a valid e-mail address.

Research Method

This study is predominantly quantitative, with a single qualitative item added. This is also called an enriched pattern, in which quantitative and qualitative data are collected simultaneously. From the findings, it can be determined whether the two data sets are consistent with each other or not. Thus, this method also contributes to the reliability and validity of the research (Büyüköztürk, Akgün, & Karadeniz, 2016; Johnson & Christensen, 2008).

Participants

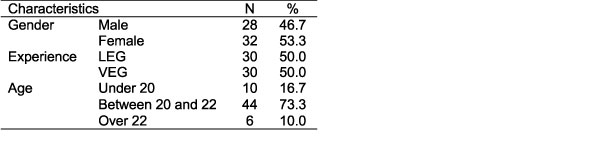

The participants of this study were 60 undergraduate students from the University of Cukurova in Adana, Turkey. Table 1 shows descriptive statistics of the participants’ characteristics.

Table 1: Descriptive Statistics of the Participants’ Characteristics

Procedure

In order to test and evaluate the usability and success rate of ÇÜ-eDS, it was made available for students during the 2014/2015 academic year (first and second semester) and the 2015/2016 academic year (first semester). The study was conducted with students enrolled in Physics I, Programming Languages I, Computer Hardware, and Operating Systems and Applications courses. In this experimental research, the following steps were carried out in sequence: (a) Firstly all students created their accounts by providing profile information and a valid e-mail address. After the confirmation process was completed they started to use the system; (b) The system and its functions were described and explained to the students by the researchers; (c) Graphic and screen design rules (Thorsen, 2009) and issues of creating tutorials (Alessi & Trollip, 2001) were presented to the students by the researchers; (d) Each course, as mentioned above, was presented by a researcher; (e) Project groups were formed and each group assigned a project. The objective of each project was to create tutorials for the course that they were enrolled in. Students started to create tutorials by using the system resources and functions that they had learned from the researchers during the semester session; (f) Finally, after completing their tasks each student answered the questions of SUS questionnaire.

Instruments

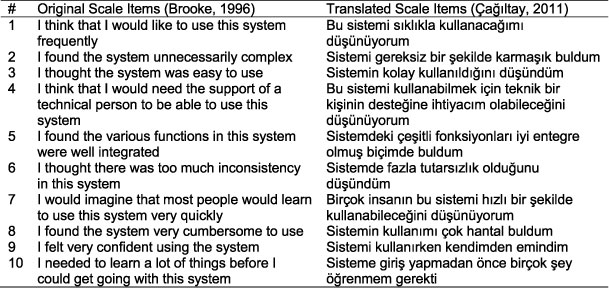

In this study, the SUS was used as a measuring tool. This scale was originally developed by Brooke (1986) to measure the usability of products and services (Bangor, Kortum, & Miller, 2009). However, it has since been used to determine the usability of many systems, mostly software systems. It is also frequently used by researchers in the field of education to determine the usability of distance education and e-learning systems (Ayad & Rigas, 2010; Granic & Cukusic, 2011; Luo, Liu, Kuo, & Yuan, 2014; Marco, Penichet, & Gallud, 2013; Renaut, Batier, Flory, & Heyde, 2006; Shi, Gkotsis, Stepanyan, Al Qudah, & Cristea, 2013). This scale was translated and adapted for Turkish contexts by Çağıltay (2011). The original and the translated version of the SUS scale are presented in Appendix 1.

The SUS is a Likert-type scale consisting of 10 items. Each item is on a 5-point scale (1 = strongly disagree, 2 = disagree, 3 = neutral, 4 = agree, and 5 = strongly agree). This scale gives a single score between 0 and 100 that represents the overall usability of the system being tested. Score calculation involves the following steps:

- for items 1, 3, 5, 7, and 9 subtract 1 from the scale position;

- for items 2, 4, 6, 8, and 10 subtract the scale position from 5;

- sum the converted responses of each user and multiply the result by 2.5 to find a score between 0 and 100.

A new open-ended item (“Please write any positive or negative aspects of the system in your own words”) was also added to the end of the SUS questionnaire in order to collect positive or negative opinions about the system.

Results

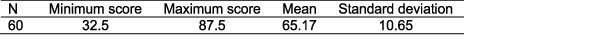

The first research question of this study was whether the system is usable according to the mean SUS score. In order to answer this question, descriptive statistics of SUS scores were calculated and are presented in Table 2. As seen from Table 2, the mean score was found to be 65.17.

Table 2: Descriptive Statistics of SUS Scores

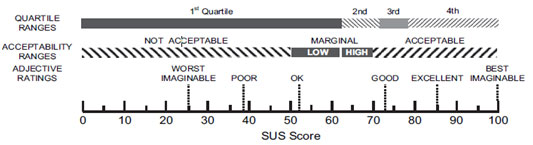

According to the SUS score evaluation scale (Figure 6) developed by Bangor, Kortum, and Miller (2008), a mean SUS score of between 50 and 70 indicates that a system is usable/acceptable, but higher scores are needed to indicate a superior system. In this respect, it can be said that the system is usable, however, not at a sufficient level.

Figure 6. SUS score evaluation scale

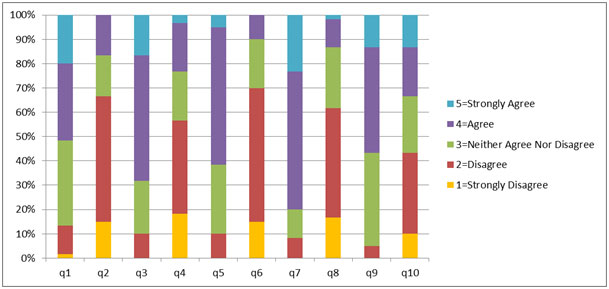

Furthermore, to indicate whether the system is usable or not, the percentage frequencies of responses to the questionnaire were calculated and are graphically presented in Figure 7. As seen in Figure 7, more than 87%, 90%, 90%, 92%, and 95% of the students responded with scores of 3, 4, or 5 to positive items (1, 3, 5, 7 and 9) of the questionnaire respectively. Likewise, more than 83%, 77%, 90%, 87%, and 67% of the students responded with scores of 3, 2, or 1 to negative items (2, 4, 6, 8 and 10) of the questionnaire respectively. According to these results, it can be concluded that the students enjoyed using the system and, thus, that the system is usable.

Figure 7. Percentage frequencies of responses to the SUS questionnaire

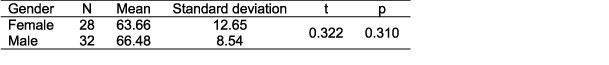

The second research question of this study was whether there is a significant difference between mean SUS scores of male and female students. To determine if students’ mean SUS scores show a significant gender difference, an independent groups t-test analysis was conducted and the results are provided in Table 3. As it can be seen, no significant difference was found between mean SUS scores of male and female students (t = 0.322, p > .05).

Table 3: Independent Groups T-Test Analysis of Mean SUS Scores of Students by Gender

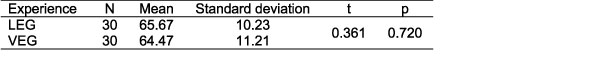

The third research question of this study was whether there is a significant difference between mean SUS scores of students in the VEG and the LEG or not. In order to answer this question, an independent groups t-test analysis was performed and analysis results are listed in Table 4. According to the results of this analysis, there is no significant difference between mean SUS scores of students in the VEG and the LEG (t = 0.361, p > .05).

Table 4: Independent Groups T-Test Analysis of Mean SUS Scores of Students in the LEG and the VEG

To find the answer to the final research question, regarding whether the qualitative and quantitative results are consistent in the case of system usability, qualitative data analysis of responses to the open-ended item of the questionnaire (“Please write any positive or negative aspects of the system in your own words.”) was performed and then compared with the results of the quantitative data analysis (see Table 2). In this context, the feedback of the 30 students to this open-ended question was examined individually. After detailed examination, it was determined that 13 students (43.3%) reported ‘positive’ feedback, three students (10.0%), reported “negative” feedback, and the remaining 14 students (46.7%) reported ‘positive’, but with suggestions on eliminating some deficiencies. Deficiencies of the system, as stated by the students, were grouped under the following main headings: (a) improving the design of the system, (b) increasing the number of help menus, (c) fixing upload problems for some file types, (d) improving visualization of the system, and (e) translating all menus to Turkish. In conclusion, because most of the students gave positive feedback about system usability that accordance with SUS score (65.17 out of 100); so, according to the results, it can be said that the qualitative and quantitative data obtained in this research are consistent and support each other. And also, because of this consistency, we can reasonably say that this result also positively supports the reliability of the research data (McMillan & Schumacher, 1997).

Conclusion and Suggestions

In this study, the usability of the newly developed e-learning support system, ÇÜ-eDS, was investigated. To measure the usability of the system, quantitative and qualitative data were gathered from 60 undergraduate students who had used the system for some time. Based on the analysis of the collected data, the following conclusions have been reached:

- The system SUS score was found to be 65.17 out of 100. According to this result it can be said that the system is at a medium level of usability (Bangor, Kortum, & Miller, 2008).

- There was no significant difference between the SUS scores of male and female students (t = 0.323, p > .05). This result is consistent with a number of similar works in the existing literature. For example, Ituma (2011) reported no significant gender difference in a study on the usability of the WebCT Blackboard Learning Management System. Similarly, Orfanou, Tselios, and Katsanos (2015) conducted a study in which students evaluated eClass and Moodle systems in terms of system usability. They also found that students’ gender does not significantly affect SUS score.

- No significant difference was found between the SUS scores of experienced and inexperienced students (t = 0.361, p > .05). This means that students who had used the system more and those who had used it less found the system equally usable. From this evidence it can be concluded that the system is user friendly. However, in other similar studies, researchers have reported a significant difference between experienced and inexperienced students (Kortum & Bangor, 2013; Orfanou, Tselios, & Katsanos, 2015; Sauro, 2011). Although there may be several explanations, the greater sample size of these previous studies may be one of the reasons for this discrepancy. Furthermore, previous studies have used different methods to determine user experience. For example, in one study students were asked to state whether they had prior experience with the system by answering a (yes/no) question.

- Finally, it was found that the results obtained from the quantitative and qualitative data were consistent with each other; both data sets showed that students found the system usable.

According to the results of this study, it may be suggested:

- As this study only had a sample size of 60 students, another study should be repeated with an increased sample size for more accurate results.

- As this study only involved students, a more comprehensive study should be done with data gathered from both students and teachers.

- As this study was conducted with a limited number of students from one department, a more comprehensive study should be carried out with more students and teachers from different faculties and departments.

- With this study, very useful data and feedback were obtained. After eliminating the deficiencies of the system in accordance with the feedback and by considering the three suggestions listed above, a more comprehensive study could be conducted.

References

- Alessi, S. M., & Trollip, S. R. (2001). Multimedia for learning: Methods and development. Boston: Allyn and Bacon.

- Ayad, K., & Rigas, D. (2010). Comparing virtual classroom, game-based learning and storytelling teachings in e-learning. International Journal of Education and Information Technologies, 4(1), 15-23.

- Bahadur, G. K., & Oogarah, D. (2013). Interactive whiteboard for primary schools in Mauritius: An effective tool or just another trend? International Journal of Education and Development using Information and Communication Technology, 9(1), 19.

- Balaban, E. (2012). Dünyada ve Türkiye’de Uzaktan Eğitim ve Bir Proje Önerisi.

- Bangor, A., Kortum, P. T., & Miller, J. T. (2008). An empirical evaluation of the system usability scale. International Journal of Human–Computer Interaction, 24(6), 574–594.

- Bangor, A., Kortum, P., & Miller, J. (2009). Determining what individual SUS scores mean: Adding an adjective rating scale. Journal of Usability Studies, 4(3), 114–123.

- Bilgiç, H. G., Doğan, D., & Seferoğlu, S. S. (2011). Türkiye’de Yükseköğretimde Çevrimiçi Öğretimin Durumu: İhtiyaçlar, Sorunlar ve Çözüm Önerileri. Yükseköğretim Dergisi, 1(2), 80–87.

- Brooke, J. (1996). SUS – A quick and dirty usability scale. Usability Evaluation in Industry, 189(194), 4–7.

- Büchner, A. (2011). Moodle administration. Packt Publishing Ltd.

- Büyüköztürk, Ş., Akgün, Ö. E., & Karadeniz, Ş. (2016). Bilimsel Araştırma Yöntemleri. Ankara: Pegem Akademi.

- Çağıltay, K. (2011). İnsan bilgisayar etkileşimi ve kullanılabilirlik mühendisliği: Teoriden pratiğe. Ankara, Turkey: ODTÜ Geliştirme Vakfı Yayıncılık.

- Clark, R. C., & Mayer, R. E. (2016). E-learning and the science of instruction: Proven guidelines for consumers and designers of multimedia learning. John Wiley & Sons.

- Cole, J., & Foster, H. (2008). Using Moodle: Teaching with the popular open source course management system. O’Reilly Media, Inc.

- Granic, A., & Cukusic, M. (2011). Usability testing and expert inspections complemented by educational evaluation: A case study of an e-Learning platform. Educational Technology & Society, 14(2), 107–123.

- Gupta, D., Ahlawat, A., & Sagar, K. (2014). A critical analysis of a hierarchy based Usability Model. Proceedings of Contemporary Computing and Informatics (IC3I), 2014 International Conference, IEEE, 255-260.

- Hollands, F. M., & Tirthali, D. (2014). MOOCs: Expectations and Reality. Full report. Retrieved from http://cbcse.org/wordpress/wp-content/uploads/2014/05/MOOCs_Expectations_and_Reality.pdf

- Horton, W., & Horton, K. (2003). E-learning Tools and Technologies: A consumer's guide for trainers, teachers, educators, and instructional designers. Indianapolis: John Wiley & Sons.

- Ismail, J. (2001). The design of an e-learning system: Beyond the hype. The internet and higher education, 4(3), 329-336.

- Ituma, A. (2011). An evaluation of students’ perceptions and engagement with e-learning components in a campus based university. Active Learning in Higher Education, 12(1), 57–68.

- Johnson, B., & Christensen, L. (2008). Educational research: Quantitative, qualitative and mixed approaches. Sage.

- Kortum, P. T., & Bangor, A. (2013). Usability ratings for everyday products measured with the System Usability Scale. International Journal of Human-Computer Interaction, 29(2), 67–76.

- Leacock, T. L., & Nesbit, J. C. (2007). A framework for evaluating the quality of multimedia learning resources. Educational Technology & Society, 10(2), 44–59.

- Leontyev, A., & Baranov, D. (2013). Massive open online courses in chemistry: A comparative overview of platforms and features. Journal of Chemical Education, 90(11), 1533–1539.

- Luo, G.-H., Liu, Z.-F., Kuo, H.-W., & Yuan, S.-M. (2014). Design and implementation of a simulation-based learning system for international trade. The International Review of Research in Open and Distance Learning, 15(1). Retrieved from http://www.irrodl.org/index.php/irrodl/article/view/1666/2816

- Marco, F. A., Penichet, V. M. R., & Gallud, J. A. (2013). Collaborative e-learning through drag & share in synchronous shared workspaces. Journal of Universal Computer Science, 19(7), 894–911.

- McMillan, J. H., & Schumacher, S. (1997). Research in education: A conceptual introduction. New York: Longman.

- Moodle.net (2016). Moodle.org: Moodle Statistics. Retrieved 17 November, 2016, from https://moodle.net/stats/

- Moodle.org (2016). About Moodle – MoodleDocs. Retrieved 17 November, 2016, from https://docs.moodle.org/30/en/About_Moodle

- OpenCourseWare (2016). MIT OpenCourseWare | Free Online Course Materials. Retrieved 17 November, 2016, from http://ocw.mit.edu/index.htm

- Orfanou, K., Tselios, N., & Katsanos, C. (2015). Perceived usability evaluation of learning management systems: Empirical evaluation of the System Usability Scale. The International Review of Research in Open and Distributed Learning, 16(2).

- Renaut, C., Batier, C., Flory, L., Heyde, (2006). Improving web site usability for a better e-learning experience. Current developments in technology–assisted education, 891-895. Badajoz, Spain: FORMATEX.

- Sauro, J. (2011, January 19). Does prior experience affect perceptions of usability? [Blog post]. Retrieved from http://www.measuringu.com/blog/prior-exposure.php

- Shi, L., Gkotsis, G., Stepanyan, K., Al Qudah, D., & Cristea, A. I. (2013). Social Personalized Adaptive E-Learning Environment: Topolor - Implementation and Evaluation. In H. C. Lane, K. Yacef, J. Mostow, & P. Pavlik (Eds.), Artificial Intelligence in Education. AIED 2013. Lecture Notes in Computer Science (Vol. 7926, pp 708-711). Berlin, Heidelberg: Springer.

- Spector, J. M. (2014). Remarks on MOOCS and Mini-MOOCS. Educational Technology Research and Development, 62(3), 385-392. doi: 10.1007/s11423-014-9339-4

- Stocker, V. L. (2011). Science teaching with Moodle 2.0. Packt Publishing Ltd.

- Süral, İ. (2013). SAKAI Öğrenme Yönetim Sisteminde Tek Şifre Yönetimi. Akademik Bilişim 2013–XV, 915–918. Akademik Bilişim Konferansı Bildirileri.

- Tekdal, M., Örneksoy, C. A., Karsen, M., & Kocabıyık, Y. (2012). ÇÜ-ÖnPort: Çukurova Üniversitesi Öğrenme Nesneleri Portalı. Akademik Bilişim Konferansı 2012, 1-3, Uşak Üniversitesi.

- Thorsen, C. (2009). TechTactics: Technology for teachers. Boston: Pearson/Allyn and Bacon.

- Thowfeek, M. H., & Salam, M. N. (2014). Students’ assessment on the usability of e-learning websites. Procedia – Social and Behavioral Sciences, 141, 916–922. Retrieved from http://dx.doi.org/10.1016/j.sbspro.2014.05.160

- Türkiye Bilimler Akademisi (2014). Ulusal Açık Ders Malzemeleri. Retrieved from http://www.acikders.org.tr

- University of Nottingham, The (2016). The Xerte Project. Retrieved from https://www.nottingham.ac.uk/xerte/index.aspx

- Uzun, E., & Çağiltay, K. (2012). Çevrimiçi El Yazısı Tanıma Sistemi Olan Grafitti’nin Kullanılabilirlik Açısından Değerlendirilmesi. SDU International Journal of Technological Science, 4(1).

- Williams, M., Williams, P., & Boyle, A. P. (2014). Development of an online field safety open educational resource using Xerte. Planet(2014), 1–11.

- Zhao, G., Ba, Z., Zhang, F., et al. (2013). Constructing authentication web in cloud computing. Proceedings of Cloud and Service Computing (CSC), 2013 International Conference on, 106-111.

Acknowledgements

This project is supported by funds from the Scientific Research Projects Unit of Çukurova University, Adana, Turkey (Project code: EF2013BAP9).

Appendix 1

Original and translated versions of System Usability Scale (SUS)