Exploring Distributed Leadership for the Quality Management of Online Learning Environments

Stuart Palmer [spalm@deakin.edu.au], Dale

Holt [dholt@deakin.edu.au], Deakin University, Geelong, Victoria, Australia

[http://www.deakin.edu.au],

Maree Gosper [maree.gosper@mq.edu.au], Macquarie University, North Ryde,

Australia [http://mq.edu.au],

Michael Sankey [michael.sankey@usq.edu.au], University of Southern Queensland,

Toowoomba, Australia [http://www.usq.edu.au],

Garry Allan [garry.allan@rmit.edu.au], RMIT University, Victoria, Australia

[http://www.rmit.edu.au]

Abstract

Online learning environments (OLEs) are complex information technology (IT) systems that intersect with many areas of university organisation. Distributed models of leadership have been proposed as appropriate for the good governance of OLEs. Based on theoretical and empirical research, a group of Australian universities proposed a framework for the quality management of OLEs, and sought to validate the model via a survey of Australasian university representatives with OLE leadership responsibility. For the framework elements: Planning and Resourcing were rated most important; Organisational structure was rated least important; Technologies were rated low in importance and high in satisfaction; Resourcing and Evaluation were rated low in satisfaction; and Resourcing had the highest rating of importance coupled with low satisfaction. Considering distributed leadership in their institution, respondents reported that the organisational alignments represented by ‘official’ reporting and peer relationships were significantly more important and more effective than the organisational alignments linking the formal and informal leaders. From a range of desirable characteristics of distributed leadership, ‘continuity and sustainability’ received the highest rating of importance and a low rating of ‘in evidence’ – there are concerns about the sustainability of distributed leadership for the governance of OLEs in universities.

Keywords: Online learning environments; distributed leadership; quality management framework

Introduction

Higher education internationally has been significantly influenced by developments in information technology (IT) that have opened up new channels to information, and new methods for learning and teaching. Learning management systems (LMSs), as the underpinning element of an institutional online learning environment (OLE), are perhaps the most widely used and most expensive educational technology (Salinas, 2008). An e-learning environment is more than just the sum of a technical system and quality learning ‘content’; its success, or otherwise, is strongly mediated by actions taken in the management of the system (Hilgarth, 2011). Management and leadership are thus centrally important for the success of OLEs. It has been observed that the implementation of institutional-level IT systems, including OLEs, often intersects with a complicated and distributed existing organisational power structure, such that many areas and levels of the organisational hierarchy can influence the success, or otherwise, of such a system (Hussain & Cornelius, 2009; Söderström et al., 2012). More generally, changes in organisational structures, external partnerships and governance of organisations reliant on IT systems have led to ‘distributed leadership’ being proposed as a viable means to capitalise on, and productively mobilise, the segmented sources of knowledge and growing number of individuals enacting leadership at all levels in relationship to IT systems (Zhang & Faerman, 2007).

Definitions of distributed leadership in various contexts abound (Lefoe, 2010; Zhang & Faerman, 2007), but a useful generic statement of principles is provided by Harris (2009):

“Distributed leadership, essentially involves both the vertical and lateral dimensions of leadership practice. Distributed leadership encompasses both formal and the informal forms of leadership practice within its framing, analysis and interpretation. It is primarily concerned with the co-performance of leadership and the reciprocal interdependencies that shape that leadership practice”. (Harris, 2009, p.5)

It has been argued that forms of distributed leadership offer at least rhetorical (Bolden, Petrov & Gosling, 2009) if not practical (Jones et al., 2012) models for the effective leadership of large, modern higher education institutions. Distributed leadership is often suggested as a particularly relevant form of leadership for the implementation of OLEs because: it may be more responsive to the unpredictable or disruptive issues arising from e-learning developments (Jameson et al., 2006); the ‘leaders’ in implementing e-learning innovations are often not within, or not senior within, the formal organisational leadership structure (Lefoe, 2010); and, the curriculum changes that often accompany e-learning developments typically involve the collaboration of many contributors, all of whom must take on shared responsibility for achieving project outcomes (Keppell et al., 2010).

Internationally, universities have made very large investments in corporate educational technologies to support their commitments to online, open, distance and flexible education. LMSs have represented the centrepiece of these institutional investments over the last decade or more in the creation of university OLEs (Lonn & Teasley, 2009). The choice of the particular elements of an OLE is a significant decision-making event, shaping institutional approaches to e-learning for a considerable period of time, typically many years. Many university leaders have a stake in making and implementing such a choice, ranging across University Senior Executive members, leadership of central teaching, learning, media production and IT groups and through various levels of faculty academic leadership. Almost all staff in a university use and rely on its OLE in enabling student learning. Like definitions of distributed leadership, models (variously described as frameworks, models, benchmarks, systems, etc.) for the good governance and quality management of IT systems, including models specifically for e-learning systems, also abound (Australasian Council on Open Distance and E-learning, 2010; Charles Sturt University, 2010; IT Governance Institute, 2012; Marshall, 2007; White & Larusson, 2010). Recent data indicates a significant proportion of LMS owners are considering changing their LMS platform (Instructional Technology Council, 2011). This may be due to the age of the existing system, changes in the teaching and learning context of the institution or the emergence of new LMS vendors, including the option of open source systems (EDUCAUSE learning Initiative, 2011). The passing of time means that existing contracts with vendors may expire, and this coupled with recently observed consolidation amongst system vendors and new entrants into the LMS marketplace mean that there will naturally be some changeover of LMSs, with consequent ripple-through changes to institutional OLEs.

A project exploring distributed leadership in the quality management of OLEs

Given all of these circumstances and developments, a group of five Australian universities proposed a project to the Leadership Excellence in Learning and Teaching Program of the Australian Government Office for Learning and Teaching (OLT — http://www.olt.gov.au) (formerly the Australian Learning and Teaching Council) that was entitled ‘Building distributed leadership in designing and implementing a quality management framework for Online Learning Environments’. The aim of the project was to develop and disseminate through a distributed leadership approach an overall framework for the quality management of OLEs in higher education, in an Australian context. The purpose of the framework was to help to guide, but not prescribe, specific leadership actions in various organisational settings relating to new investments in OLEs, and the on-going maintenance and enhancement of such environments, for the benefit of student learning. The framework was specifically not intended to compete with the many existing governance and quality management models for IT systems. Rather, its intention was to assist leaders of such systems to decide where and how appropriate elements of existing models might be employed in a specific institutional context. The five university project partners encompassed a diversity of LMS/OLE configurations (including proprietary and open source), were at different stages of deploying their next generation OLEs, and represented the institutional diversity of the Australian university sector.

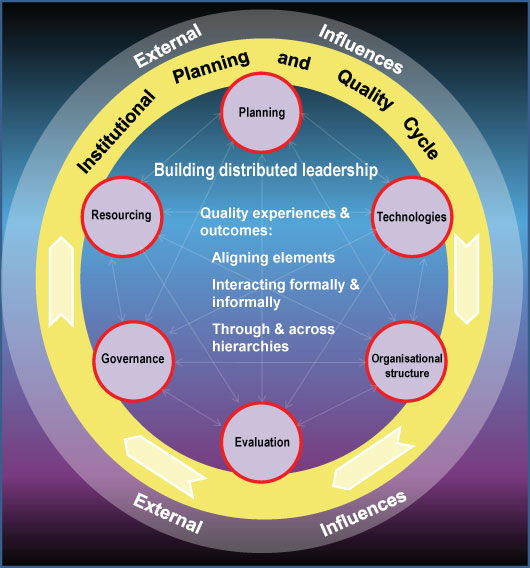

The project was successful in gaining funding from the OLT during 2011–2012. Drawing on a major literature investigation, a workshop of the project partners exploring the project themes in the context of their own institutions, and a first round of institutional focus groups at the five partner institutions, and with input from a project reference group composed of senior academics experienced in OLE leadership, an OLE quality management framework (‘the framework’) was developed (Holt et al., 2013). Through a process of review based on the on-going project data collection and stakeholder feedback, the framework was refined to have the form presented in Figure 1. The heart of the framework is the building of distributed leadership capacity with the aim of enhancing the quality of learning and teaching experiences and outcomes through the alignment of the six identified and interrelated elements. A set of desired characteristics of distributed leadership to support the quality management of OLEs was also developed, and these are summarised in Table 1.

Figure 1. Framework for the quality management of online learning environments

Element Descriptors:

Planning: external environmental analysis and trend spotting, strategic intelligence gathering, external benchmarking, organisational capacity analysis, institutional purpose, reputation, vision, principles, objectives and strategies, accountabilities, timelines and resource implications

- Organisational structure: nature, range, coordination and delivery of valued services (underpinned by clarity of understanding of needed expertise/staffing capabilities) for staff and students

- Governance: institutional, faculty and school/department committees and forums (and associated responsibilities and accountabilities), policies and standards

- Technologies: type, range, integration, promotion, and innovation and mainstreaming of emerging technologies

- Resourcing: maintenance and enhancement of technologies, skills recognition and staff development, media production, evaluation activities, governance mechanisms, i.e. all other elements

- Evaluation: stakeholder needs, methods, reporting, decision making through governance structures, evaluation relating to the initial selection of new technology, and evidence gathering relating to the on-going assessment of its performance, value and impact

Table 1: Desired characteristics of distributed leadership

Enabled individual and collective agency |

Co-created & shared vision |

Inclusive of all those who lead |

Broadest recognition of leadership |

Communicative and engaging |

Appropriate responsibilities |

Meaningful rewards |

Trusting and respectful |

Nurturing of valued professional expertise |

Collaborative in development |

Valuing professional forums & communities |

Continuity and sustainability |

The structure and value of elements of the framework were able to be tested and refined using the institutional contexts of the project partners and input from the reference group. However, exposure of the framework to a wider context was seen as an important step in the further validation of the framework. The project team and reference group contained a number of university institutional representatives of the Australasian Council on Open, Distance and E-Learning (ACODE — http://www.acode.edu.au). The stated mission of ACODE is, “to enhance policy and practice in open, distance, flexible and e-learning in Australasian higher education”. It was decided that the ACODE institutional representatives would be a suitable audience to provide feedback on the structure, value and application of the framework from a wide range of university perspectives in Australasia. Feedback was sought via an online survey. It was planned that this feedback would help to illuminate the relative importance of the framework elements, the prevalence and characteristics of distributed leadership in Australasian universities, whether there was a common view on these matters across the sector, and generally provide insights into the practice of leadership and governance of OLEs in Australasia.

Methodology

An online survey of ACODE institutional representatives at Australasian (Australian, New Zealand and South Pacific) universities was conducted during March 2012. A total of 46 current ACODE institutional representatives were publicly identifiable, and were invited to participate in the online survey. The survey included items addressing:

- background/demographic information;

- respondents’ perceptions of importance of, and satisfaction with, elements of the proposed framework;

- respondents’ perceptions of the importance and effectiveness of distributed leadership at their universities; and

- respondents’ perceptions of the importance, and evidence of presence, of a range of characteristics of distributed leadership at their universities.

As described in the Results section, some of the data collected are in the form of response scale ratings. We acknowledge that these ratings are fundamentally ordinal in nature. The use of ordinal data in many parametric statistical procedures, while commonplace in the social sciences, is not universally accepted as valid. However, there is a significant body of research that has demonstrated the practical utility of analysis of ordinal data, based on the robustness of many parametric methods to significant departures from assumptions about the underlying data, including departures from normality and ‘intervalness’ that might be present in ordinal scale data (Jaccard & Wan, 1996; Norman, 2010). In the following statistical analyses, a two-sided significance level of p < 0.01 was used. This significance level indicates that the observed result is likely to occur by chance only once for every hundred similar respondent samples, and hence strongly suggests that any observed difference in mean ratings is a real difference. A discussion of the observed results is also presented. As required by human research ethics procedures at the lead institution for the project, the survey was anonymous and voluntary.

Results and Discussion

Response Rate and Demographic Information

Completed survey responses were received from 27 of the 46 ACODE institutional representatives that were publicly identifiable at the time of the survey; a response rate of 58.7 %. However, an additional four incomplete responses were also received. Because the online survey system used saved all data progressively, some of the data and analyses presented below contain responses from up to 29 (63.0 %) respondents. The 48 Australasian universities can be classified into generally agreed institutional groupings. Survey respondents were asked to indicate which of these groupings their institution belonged to and this information is summarised in Table 2.

Table 2: Number and proportion of respondents by institutional grouping

Institutional grouping |

No. of respondents |

% of respondents |

South Pacific or New Zealand University (SPNZ) |

5 |

18.5% |

Group of Eight (Go8) |

4 |

14.8% |

Innovative Research Universities (IRU) |

5 |

18.5% |

Australian Technology Network (ATN) |

4 |

14.8% |

Regional Universities Network (RUN) |

4 |

14.8% |

Non-aligned / No grouping (NA) |

5 |

18.5% |

It was possible to compare the proportions of respondents in each grouping with both the target population of the 46 institutions with an identified ACODE representative, as well as with the entire population of 48 Australasian universities. In both cases, Fisher’s Exact Two-sided Test for comparing proportions was possible and there was no significant difference in the proportions of institutional groupings between the respondent sample and the target population (Fisher’s Exact Two-sided Test p > 0.979), and between the respondent sample and the entire population (Fisher’s Exact Two-sided Test p > 0.957). These findings, combined with the relatively high response rate, give some confidence that the respondent sample is representative of both the target population and the wider university sector in Australasia. A limitation that must be acknowledged is that a single representative may not be in a position to provide a complete and comprehensive response on behalf of their institution.

The Framework Elements

Respondents were exposed to the framework as presented in Figure 1 and Table 1, provided with a short audio commentary that provided background information about the project, the framework and the purpose of the survey, and a further written background document was available via a hyperlink. For each of the six framework elements, respondents were asked to rate:

- how important they felt that element was for effective management of the OLE at their university (using a scale of not important, somewhat important, important and very important); and

- how satisfied they were with their university’s performance on that element (using a scale of not satisfied, partially satisfied, satisfied and very satisfied).

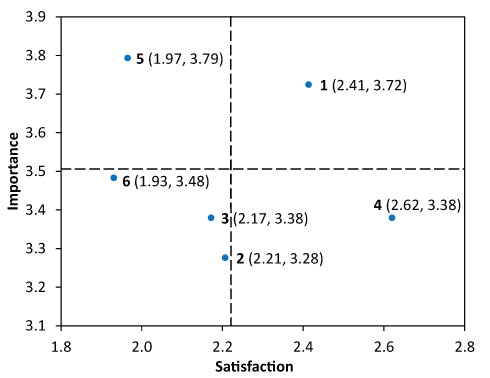

A method for visualising and interpreting importance-satisfaction data is the importance-satisfaction grid (Aigbedo & Parameswaran, 2004) — where the importance rating is plotted on the vertical axis and the satisfaction rating is plotted on the horizontal axis. Using the survey data from the 29 respondents (63.0 %) who completed this section, and assuming an ordinal rating scale of 1–4 for the ratings of importance and satisfaction, the mean ratings of importance and satisfaction (out of 4) for each of the six framework elements are plotted in Figure 2. The grand means (means of the six element means) for importance and satisfaction are also plotted as dashed lines to provide an indication of the relative ranking of the element mean ratings.

Figure 2. Importance-satisfaction grid

for mean ratings of framework elements

1. Planning; 2. Organisational structure; 3. Governance;

4. Technologies; 5. Resourcing; 6. Evaluation

Differences in mean ratings between institutional groupings were considered. An analysis of variance (ANOVA) provides a test for the significance of observed differences in means between groups. A requirement for the ANOVA test is that the variation of the mean rating be similar in all groups. Where Levene’s test of homogeneity of variance fails, it may be possible to perform a robust ANOVA test using the Welch test statistic instead. In this case, there were three importance ratings for which Levene’s test failed, and for all three ratings, all respondents from a particular institutional group gave the same rating response, meaning that it was not possible to estimate the variance of the mean for that group in the wider population. In this situation, it may not be reliable to use the ANOVA test result and it is not possible to perform the robust ANOVA calculation. However, for the nine mean ratings where it was possible to perform an ANOVA test, no significant difference in mean ratings of importance and satisfaction were observed between institutional groupings (0.237 < p < 0.910). This result suggests a high degree of commonality in the ratings across the sector.

We observe, relatively speaking, that:

- Planning and Resourcing are considered most important;

- Organisational structure was given the lowest mean importance rating;

- Technologies received the highest mean rating of satisfaction, in conjunction with the equal second lowest mean rating of importance, suggesting a view that this element is perceived as being relatively under control;

- respondents were least satisfied with Resourcing and Evaluation; and

- the element with the highest mean importance rating and almost equal lowest mean satisfaction rating was Resourcing, suggesting that respondents would like action to improve this aspect of OLEs.

The typical faculty-based structure of universities, centred around academic discipline areas, leads to a high degree of autonomy and decentralised operational decision making, resulting in ‘bottom-heavy’ institutions that are complex to govern and not conducive to rapid strategic innovation (Schneckenberg, 2009). The low rating of importance of Organisational structure as a contributor to the effective management of OLEs observed here may reflect the fact that OLEs are typically selected, implemented and managed centrally within a university, and, while many other areas of the university may use the OLE for a range of purposes, the broader structure of the university may have limited direct impact on the high-level governance of OLE.

Success in the implementation of an OLE, however that might be defined, does not automatically follow just because a technical solution is put in place; there is a need for system evaluation (Hilgarth, 2011). For an OLE, ‘Evaluation’ might encompass assessment of the effectiveness of a range of aspects, including: student and staff use and perception of the system; short- and longer-term take-up of the system by academic units; integration of the system into curriculum (re-)developments; the technical success of the system; and the success of change-management initiatives associated with the system implementation (Holt, Palmer, Dracup, 2011). A meta-analysis of the literature examining the impact of e-learning on student learning outcomes concluded that less than five per cent of 1132 published evaluations discovered contained a rigorous research design that would warrant them being included in the meta-analysis (Means et al., 2009). An investigation of e-learning undertaken by the UK Higher Education Academy included site visits to seven universities to assess a range of factors, including institutional evaluation of e-learning. They found that all seven were having difficulty evaluating their OLEs, and suggested that this was due to, “the pressure to implement rather than evaluate, the low status of pedagogic research, and poorly defined measures of institutional success …” (Sharpe et al., 2006, p.38). An investigation undertaken by the EDUCAUSE Center for Applied Research to explore the primary capabilities claimed for OLEs examined a wide range of recent literature. They found that most individual studies sought to correlate the use of the system with improved student academic performance; however, they also found evidence that this correlation is weak, and that students themselves view at least some elements of the OLEs as ‘educational infrastructure’; expected to be available and not necessarily a significant enhancer of their learning experience (White & Larusson, 2010). So, system evaluation is important, but complex, and often an afterthought in the rush to implement an OLE. Much of the published OLE evaluation literature is not rigorous in design, so such evaluations are unlikely to provide reliable data for action.

Kenny (2004) identified inadequate resourcing of educational technology projects as a major barrier to success. This under-resourcing was attributed to a poor understanding of the scope of what is required in educational technology projects by senior university managers responsible for such systems, and in particular a lack of appreciation of the staff time involved in implementing such systems (Kenny, 2004). Mott and Wiley (2009) offer another perspective on what might contribute to low satisfaction with OLE resourcing. They observe that, despite a very large allocation of resources, collectively, to educational technology in general, over a long time period, there has been little observable evidence of the ‘revolution’ in learning and teaching continually promised by such technologies (Mott & Wiley, 2009).

Distributed Leadership in Operation

Respondents were provided with the following concise definition of distributed leadership in the context of quality management of OLEs: “action by many people working collectively across the institution to build leadership capacity in learning and teaching”. Respondents were asked to consider the performance of distributed leadership in their organisation from the perspective of the alignments between:

- the vertical (formal line reporting relationships) and horizontal (peers in different work groups) actors/actions; and

- the formal (organisationally appointed/sanctioned) and informal (emergent and relationship-based) actors/actions.

For each of these two distributed leadership actors/actions, respondents were asked to rate:

- how important they felt the alignment between them was at their university (using a scale of not important, somewhat important, important and very important); and

- how effective (generally) they felt the alignment between them was at their university (using a scale of not effective, partially effective, effective and very effective).

Using the survey data from the 28 respondents (60.9 %) who completed this, and assuming an ordinal rating scale of 1–4 for the ratings of importance and effectiveness, the mean ratings of importance and effectiveness (out of 4) for the two distributed leadership perspectives are given in Table 3.

Table 3: Mean ratings of importance and effectiveness of aspects of distributed leadership

Distributed leadership actors/actions |

Mean importance |

Mean effectiveness |

Alignment of vertical and horizontal leadership |

3.71 |

3.68 |

Alignment of formal and informal leadership |

2.21 |

2.18 |

Differences in mean ratings between institutional groupings were considered. Using the same procedure as described above, an appropriate ANOVA test was able to be performed for the second row of the table, and no significant difference in mean ratings of importance and effectiveness were observed between institutional groupings (0.829 < p < 0.940). This result suggests a high degree of commonality in the ratings across the sector. The mean ratings for both importance and effectiveness were lower for ‘Alignment of formal and informal leadership’ compared to the mean ratings for ‘Alignment of vertical and horizontal leadership’. The variance of both the importance ratings and the effectiveness ratings were not significantly different between the two distributed leadership actor/action groupings, so it was possible to perform an ANOVA test on the significance of the observed difference in the mean ratings. The observed difference in mean ratings were significantly different for both importance (F55 = 64.36; p < 9.1x10-11) and effectiveness (F55 = 76.57; p < 6.2x10-12).

The alignment of distributed leadership relationships that might be inferred from an organisational chart (including those that might be observed running both vertically and horizontally), was rated as significantly more important and effective than the alignment of informal distributed leadership relationships that might be seen as cutting obliquely across the formal linear linkages in the official organisational structure. Under such an environment, it would seem to be important for those in formal leadership roles to recognise and nurture distributed leadership capacity within universities. This view has some support in the leadership literature. Heckman, Crowston and Misiolek (2007) considered the leadership of virtual teams from a theoretical perspective. They classed virtual teams as networked, self-organising, technology-supported, small teams with the ability to bridge discontinuities of geography and time — characteristics often found in university project teams existing in large, multi-campus universities. They argued that such teams will be most effective when they exhibit ‘first-order’ distributed leadership for the achievement of the required tasks, in combination with strong ‘second-order’ centralised leadership that governs team actions (Heckman, Crowston, Misiolek, 2007). Mehra, Smith, Dixon and Robertson (2006) conducted an empirical investigation of 28 similar work teams organised in one of three structures: leader-centred — teams with a single formal leader; distributed-fragmented — teams with fully distributed leadership; and, distributed-coordinated — teams with both a formal leader and informal leader(s) acting in a coordinated partnership. They found that distributed-coordinated teams performed the best at achieving their explicit organisational function (Mehra et al., 2006)

Characteristics of Distributed Leadership

Respondents were presented with 12 characteristics of distributed leadership identified in the framework and presented in Table 1. For each of the 12 characteristics of distributed leadership, respondents were asked to rate:

- how important that characteristic is for effective distributed leadership at their university (using a scale of not important, somewhat important, important and very important); and

- how clearly in evidence that characteristic of distributed leadership is at their university (using a scale of not in evidence, partially in evidence, in evidence and strongly in evidence).

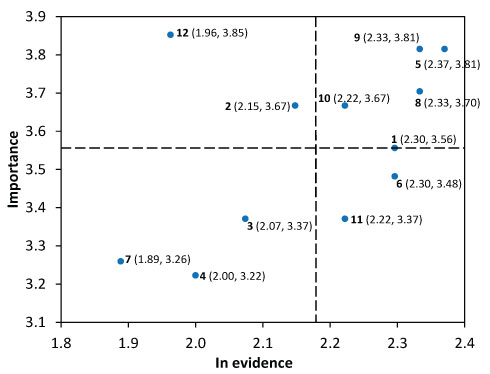

Using the survey data from the 27 respondents (58.7 %) who completed this section, and assuming an ordinal rating scale of 1–4 for the ratings of importance and ‘in evidence’, the mean ratings of importance and ‘in evidence’ (out if 4) for each of the 12 characteristics of distributed leadership are plotted in Figure 3 in a form analogous to the importance-satisfaction grid. The grand means for importance and ‘in evidence’ are also plotted as dashed lines to provide an indication of the relative ranking of the element mean ratings.

Figure 3. Importance-‘in evidence’ grid

for mean ratings of distributed leadership characteristics

1. Enabled individual and collective agency; 2. Co-created and shared

vision; 3. Inclusive of all those who lead; 4. Broadest recognition

of leadership; 5. Communicative and engaging; 6. Appropriate

responsibilities; 7. Meaningful rewards; 8. Trusting and respectful;

9. Collaborative in development; 10. Nurturing of valued professional

expertise; 11. Valuing professional forums and communities;

12. Continuity and sustainability

Differences in mean ratings between institutional groupings were considered. Using the same procedure as described above, an appropriate ANOVA test was able to be performed for 17 of the 24 mean ratings, and for all 17 no significant difference in mean ratings of importance and ‘in evidence’ were observed between institutional groupings (0.074 < p < 0.846). This result suggests a high degree of commonality in the ratings across the sector. We observe, relatively speaking, that:

- characteristics rated as important and most in evidence included ‘Communicative and engaging’, ‘Collaborative in development’ and ‘Trusting and respectful’;

- characteristics rated as least important and least in evidence included ‘Meaningful rewards’ and ‘Broadest recognition of leadership’; and

- perhaps most significantly for this section, while most characteristics appeared on a generally diagonal line in the grid (those rating relatively highly on importance were also rated as relatively highly in evidence), one characteristic was decidedly ‘off-diagonal’ — ‘Continuity and sustainability’ received the highest mean rating for importance combined with almost the lowest mean rating for ‘in evidence’.

The final observation suggests a concern for the long-term sustainability of distributed leadership in the sector. Special projects for the development of distributed leadership in universities often experience difficulty once seed funding or other support is removed (Bianchini, Maxwell, Dovey, 2013). More systematic adoption of an institutional commitment to distributed leadership is likely to be an initiative of the senior university leadership. However, these levels of leadership are prone to regular change, and the arrival of new senior university leadership often heralds the arrival of new ‘pet’ initiatives which can replace previous commitments (Kezar, 2009).

Developing and Sustaining Distributed Leadership

Distributed leadership approaches are highly relevant to the quality management of OLEs in higher education. The leadership of quality OLEs is becoming more complex and demanding due to:

- growing size and reach of universities;

- growing number of ICTs which constitute OLEs;

- loosening of institutional control over certain technologies which can be used for effective learning and teaching;

- greater size and more diverse composition of universities’ workforces and student populations;

- ever-present multiplicity of curricular and pedagogical models which underlie an ever-expanding range of occupations and professions requiring higher-level education; and

- intensifying of national and global competition in the e-Learning marketplace.

No single formal leader at the top, no matter how ambitious and knowledgeable, could possibly contend with the complexity of issues related to the quality management of online learning environments. Leaders must be mobilised down, across and throughout the organisation to realise the full benefits of massive institutional investments in online learning systems. The rapid changes in information and educational technologies mean that the OLE is a dynamic fast-moving space and educators need to work in new and sometimes very different ways to what they may have been accustomed. Further, they cannot necessarily rely on repeating strategies and solutions of the past without understanding what modern technologies can offer and analysing what is required for specific cohorts and learning needs at a particular point in time. Linking those from the centre of the organisation with those from the faculties, working locally – but within an institutional context and with sector-wide links and global understanding – mandates the involvement of many people across an institution. Building distributed leadership capacity at all levels seems an important part of gaining the best learning and teaching experiences and outcomes in the OLE space.

For change management within the OLE space to be effective, even within the distributed leadership construct there remains a hierarchy of leadership, and leaders need to act in ways that accord with their position. While making their own contribution to the university’s mission and vision in this regard, it is the responsibility of senior leaders to set an appropriate organisational framework to help shape the effective change management of the OLE. They need to create and/or allow opportunities for various approaches and strategies to be pursued to allow distributed leadership to flourish within such a framework and within well-understood and accepted boundaries. Leaders at all levels need to be encouraged and supported to see how their own leadership skills can be enhanced and how they can build leadership capacity in others. As major technology and pedagogical decisions are implemented, well-led interconnected networks, or teams, allow better outcomes for all concerned, as all stakeholders work in an environment of mutual respect and support towards common goals and as broader, as well as deeper, engagement with the OLE agenda results. A distributed leadership approach that is systematically embedded in an organisation’s culture and practice is likely to be more robust, and have the sustainability required to survive the inevitable changes of key personnel in the leadership structure.

Conclusions

OLEs need to be governed well, most importantly for the goal of quality student learning. Distributed models of leadership have been proposed as appropriate for the good governance of both large IT systems and higher education. A group of Australian universities undertook to develop and disseminate an overall framework for the quality management of OLEs in higher education. As part of the validation of the framework, all 46 identifiable institutional representatives of the Australasian Council on Open, Distance and E-Learning were exposed to the framework via a survey. The survey sought perceptions of the value and practice related to both the framework and distributed leadership in Australasian universities.

The survey response rate was approximately 60 per cent and representative of the Australasian university sector. In summary, OLE Technology was considered to be under control, while respondents would like Resourcing for OLEs to be improved. Considering distributed leadership in their institution, respondents reported that the organisational alignments represented by ‘official’ reporting and peer relationships were significantly more important and more effective than the organisational alignments linking the formal and informal leaders. Distributed leadership requires sponsorship and support from the formal institutional leadership structure. Respondent ratings of a range of desirable characteristics of distributed leadership highlighted a key issue – the characteristic of ‘continuity and sustainability’ received the highest rating of importance and a low rating of ‘in evidence’. There are concerns about the sustainability of distributed leadership.

The research presented here provides important insights into the quality management of OLEs in Australasian universities. Data were collected through a process of stimulating (via survey) those with institutional leadership roles for OLE governance to reflect on and characterise practices at their respective institutions. It highlights the relative importance of, and satisfaction with, OLE governance elements in practice, and characterises the application of distributed leadership in universities. No significant differences were observed in the ratings reported between recognised university groupings, suggesting a high degree of consistency across the Australasian university sector, regardless of OLE type and particular phase of system life-cycle. While the research project was undertaken in an Australian context, all of the key findings resonate with the international IT systems and educational technology literature, and hence should have wide generally relevance. Based on this, we offer advice on supporting and developing distributed leadership for the quality management of online learning environments.

References

- Aigbedo, H. and Parameswaran, R. (2004). Importance-performance analysis for improving quality of campus food service. In International Journal of Quality & Reliability Management, 21(8), (pp. 876–896).

- Australasian Council on Open Distance and E-learning. (2010). ACODE Benchmarks. Canberra: ACODE.

- Bianchini, S.; Maxwell, T.; Dovey, K. (2013). Rethinking leadership in the academy: an Australian case. In Innovations in Education and Teaching International, (pp. 1–12).

- Bolden, R.; Petrov, G. and Gosling, J. (2009). Distributed Leadership in Higher Education: Rhetoric and Reality. In Educational Management Administration & Leadership, 37(2), (pp. 257–277).

- Charles Sturt University. (2010). CSU Educational Technology Framework Wagga Wagga: Charles Sturt University.

- EDUCAUSE learning Initiative. (2011). 7 Things You Should Know About LMS Evaluation. Boulder, Co.: EDUCAUSE.

- Harris, A. (2009). Distributed leadership: different perspectives. Dordrecht; London: Springer.

- Heckman, R.; Crowston, K.; Misiolek, N. (2007). A Structurational Perspective on Leadership in Virtual Teams. In K. Crowston, S. Sieber & E. Wynn (eds.), Virtuality and Virtualization, (pp. 151–168). Boston: Springer.

- Hilgarth, B. (2011). E-Learning Success in Action! From Case Study Research to the creation of the Cybernetic e-Learning Management Model. In International Journal of Computer Information Systems and Industrial Management Applications, 3, (pp. 415–426).

- Holt, D.; Palmer, S.; Dracup, M. (2011, 4–7 December). Leading an evidence-based, multi-stakeholder approach to evaluating the implementation of a new online learning environment: an Australian institutional case study. Paper presented at the 28th Annual Conference of the Australasian Society for Computers in Learning in Tertiary Education, Hobart.

- Holt, D.; Palmer, S.; Munro, J.; Solmonides, I.; Gosper, M.; Hicks, M.; et al. (2013). Leading the quality management of online learning environments in Australian higher education. In Australasian Journal of Educational Technology, 29(3), (pp. 387-402).

- Hussain, Z.I. and Cornelius, N. (2009). The use of domination and legitimation in information systems implementation. In Information Systems Journal, 19(2), (pp. 197–224).

- Instructional Technology Council. (2011). 2010 Distance Education Survey Results. Washington, D.C.: Instructional Technology Council.

- IT Governance Institute. (2012). COBIT 5 — Introduction. Rolling Meadows, IL: IT Governance Institute.

- Jaccard, J. and Wan, C.K. (1996). LISREL approaches to interaction effects in multiple regression. Thousand Oaks, Calif.; London: SAGE.

- Jameson, J.; Ferrell, G.; Kelly, J.; Walker, S.; Ryan, M. (2006). Building trust and shared knowledge in communities of e-learning practice: collaborative leadership in the JISC eLISA and CAMEL lifelong learning projects. In British Journal of Educational Technology, 37(6), (pp. 949-967).

- Jones, S.; Lefoe, G.; Harvey, M.; Ryland, K. (2012). Distributed leadership: a collaborative framework for academics, executives and professionals in higher education. In Journal of Higher Education Policy and Management, 34(1), (pp. 67–78).

- Kenny, J. (2004). A study of educational technology project management in Australian universities. In Australasian Journal of Educational Technology, 20(3), (pp. 388–404).

- Keppell, M.; O’Dwyer, C.; Lyon, B.; Childs, M. (2010). Transforming distance education curricula through distributive leadership. In ALT-J, Research in Learning Technology, 18(3), (pp. 165–178).

- Kezar, A. (2009). Change in Higher Education: Not Enough, or Too Much? In Change: The Magazine of Higher Learning, 41(6), (pp. 18–23).

- Lefoe, G. (2010). Creating the Future: Changing Culture through Leadership Capacity Development. In U.-D. Ehlers & D. Schneckenberg (eds.), Changing Cultures in Higher Education, (pp. 189–204). Springer Berlin Heidelberg.

- Lonn, S. and Teasley, S.D. (2009). Saving time or innovating practice: Investigating perceptions and uses of Learning Management Systems. In Computers & Education, 53(3), (pp. 686–694).

- Marshall, S. (2007). E-Learning Maturity Model — Process Descriptions. Wellington: Victoria University of Wellington / New Zealand Ministry of Education.

- Means, B.; Toyama, Y.; Murphy, R.; Bakia, M.; Jones, K. (2009). Evaluation of Evidence-Based Practices in Online Learning: A Meta-Analysis and Review of Online Learning Studies. Washington, D.C.: U.S. Department of Education.

- Mehra, A.; Smith, B.R.; Dixon, A.L.; Robertson, B. (2006). Distributed leadership in teams: The network of leadership perceptions and team performance. In The Leadership Quarterly, 17(3), (pp. 232–245).

- Mott, J. and Wiley, D. (2009). Open for Learning: The CMS and the Open Learning Network. In in education, 15(2), (pp. 1–10).

- Norman, G. (2010). Likert scales, levels of measurement and the “laws” of statistics. In Advances in Health Sciences Education, 15(5), (pp. 625–632).

- Salinas, M.F. (2008). From Dewey to Gates: A model to integrate psychoeducational principles in the selection and use of instructional technology. In Computers & Education, 50(3), (pp. 652–660).

- Schneckenberg, D. (2009). Understanding the real barriers to technology-enhanced innovation in higher education. In Educational Research, 51(4), (pp. 411–424).

- Sharpe, R.; Benfield, G.; Roberts, G.; Francis, R. (2006). The undergraduate experience of blended e-learning: a review of UK literature and practice. York: The Higher Education Academy.

- Söderström, T.; From, J.; Lövqvist, J.; Törnquist, A. (2012). The Transition from Distance to Online Education: Perspectives from the Educational Management Horizon. In European Journal of Open, Distance and E-Learning, 2012(I).

- White, B. and Larusson, J.A. (2010). Strategic Directives for Learning Management System Planning. Boulder: EDUCAUSE Centre for Applied Research.

- Zhang, J. and Faerman, S.R. (2007). Distributed leadership in the development of a knowledge sharing system. European Journal of Information Systems, 16(4), (pp. 479–493).

Acknowledgements

The authors wish to thank the partner institutions (Deakin University, Macquarie University, RMIT University, University of South Australia, and University of South Queensland) for their generous contribution to this project, all of the members of the project team, and all those participants who contributed to the data collection processes. Support for this project has been provided by the Australian Government Office for Learning and Teaching. The views in this project do not necessarily reflect the views of the Australian Government Office for Learning and Teaching.